Upload and inference pre-trained ONNX models in MLTK

Version 5.4.0 and higher of the Splunk Machine Learning Toolkit (MLTK) provides the option to upload pre-trained ONNX models for inferencing in MLTK. You can train models in your preferred third-party environment, save the model in the .onnx file format, upload the model file to MLTK, and then retrieve and inference that model in MLTK. This option enables you to perform process-heavy model training outside of the Splunk platform, but benefit from model operationalization within the Splunk platform.

About ONNX

ONNX stands for Open Neural Network Exchange and is a common file format. This format lets you create models using a variety of frameworks, tools, runtimes, and compilers. ONNX supports many ML libraries and offers language support for loading models and inference in Python, C++, and Java. To learn more, see https://onnx.ai/

About ONNX model sizes

ONNX models are natively binary files. These model files are converted to base64 encoded entries to create the lookup entry. This type of encoding increases the file size by approximately 33% to 37%. Take this into consideration when setting your model upload file size limitations.

There are limits on the maximum model file upload size. The maximum model file upload size is based on the size limitations defined in the max_model_size_mb setting in the mlspl.conf file. To learn how to change these settings, see Edit default settings in the mlspl.conf file.

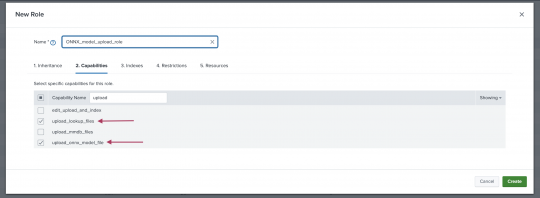

ONNX file upload permissions

By default the ONNX model upload capability is turned off for all users. Your Splunk admin can turn on the upload capability by creating a custom role and assigning that role the following two required permissions:

upload_lookup_filesupload_onnx_model_file

Your Splunk admin can also add these permissions to any current role in order for that role to upload ONNX models.

To learn more see, Set permission granularity with custom roles in the Securing Splunk Enterprise manual.

As an alternative, you can use the mltk_model_admin role that ships with MLTK. This role includes the two required role permissions.

Requirements

In order to successfully use the ONNX model upload feature, the following is required:

- You must be running MLTK version 5.4.0 or higher and a compatible version of the PSC add-on. See, Splunk Machine Learning Toolkit version dependencies.

- Use the correct ONNX opset value: Opset values are important while converting models from sklearn, TensorFlow, or PyTorch to the ONNX format. You must use the correct opset value when creating your models in order to successfully upload your models. Incorrect opset values will lead to inference errors. Use the latest target opset value officially supported by onnx.ai. To learn more, see http://onnx.ai/sklearn-onnx/auto_tutorial/plot_cbegin_opset.html#what-is-the-opset-number

Upload and inference a new ONNX model

Perform the following steps to upload a new ONNX model file and inference that model file in MLTK:

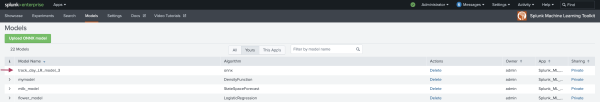

- From the MLTK main navigation bar select the Models tab.

- Click the Upload ONNX model button.

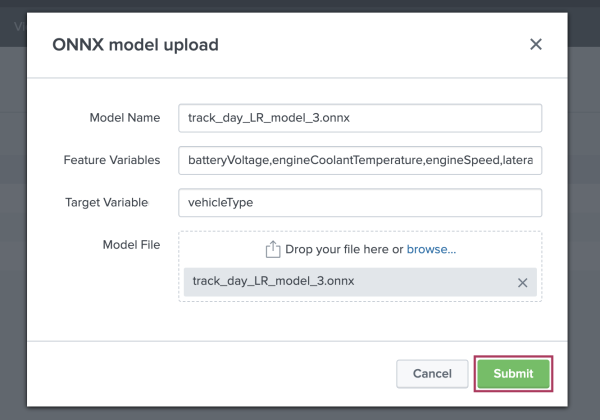

- Fill in the fields as follows. All fields are required:

- Enter the Model Name including the .onnx file format extension. Model name must be alphanumeric and can include underscores.

- Input one or more Feature Variables. Values must be comma separated.

- Input one Target Variable.

- Upload the Model File. The Model File name must match the Model Name, and the model must be in the .onnx file format.

- Click Submit when done.

- The uploaded model goes through a series of validation steps including validation that the user has the required model upload capabilities, and that the model is the correct file format. A success message appears on screen.

A successful model upload does not mean that the model will give you good results when running the model on new data. Only that the model has been successfully uploaded and stored.

- The model is now visible under the Models tab and stored as an MLTK lookup entry.

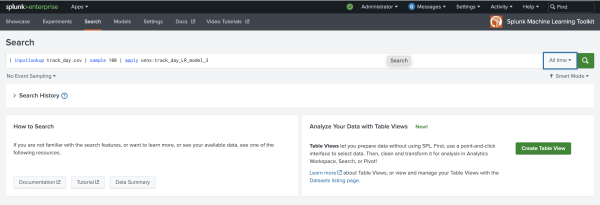

- Open the Search tab and compose an SPL search query to inference the uploaded ONNX model on new data as shown in the following example:

| inputlookup track_day.csv | sample 100 | apply onnx:track_day_LR_model_3

This SPL query uses the ML-SPL

applycommand in combination with theonnxkeyword.

If you want to use the ONNX model under the app namespace, you must change the model permissions to App or Global. In the Search, you must append the ONNX model call with the app keyword as shown in the following example:

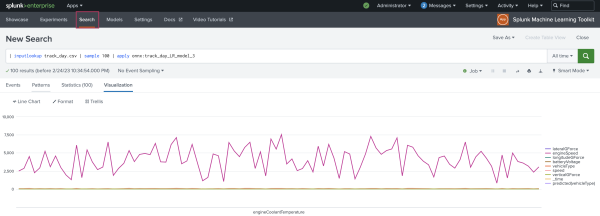

| inputlookup track_day.csv | sample 100 | apply app:onnx:track_day_LR_model_3 - View the on-screen results from running the query. If the results look good you can put the model into production.

If the results are not what you need or expect, one or both of the following might be true:- The ONNX model may need to be tuned and iterated. After you complete model tuning, re-uploading the model using the same Model Name will overwrite the previous model upload of the same name.

- The Feature Variables you chose during model upload may not align with the column names of the new data. You must re-upload the model with the correct Feature Variables. Re-uploading using the same Model Name will overwrite the previous model upload of the same name.

Troubleshoot ONNX model uploads

The following are errors you might encounter when using the ONNX model upload feature and how to troubleshoot:

| Error message | Troubleshoot |

|---|---|

| ONNX user validation error | Check that the required capabilities/roles are assigned to the user. |

| ONNX model validation error | Invalid ONNX schema found in the model file. Make sure the model is validated using onnx.checker module and try again. See, https://github.com/onnx/onnx/blob/main/onnx/bin/checker.py |

| Model size limit exceeded | Try increasing the size of uploaded model in max_model_size_mb in the settings page.

|

| Unknown model format | Error while encoding model file to base64. Validate the model file as ONNX binary and try again. |

| Opset 17 is under development and support for this is limited. The operator schemas and or other functionality may change before next ONNX release and in this case ONNX Runtime will not guarantee backward compatibility. | Use the latest target opset value officially supported by onnx.ai. See, http://onnx.ai/sklearn-onnx/auto_tutorial/plot_cbegin_opset.html#what-is-the-opset-number |

| Develop and package a custom machine learning model in MLTK | MLTK deep dives overview |

This documentation applies to the following versions of Splunk® Machine Learning Toolkit: 5.4.0, 5.4.1, 5.4.2, 5.5.0

Download manual

Download manual

Feedback submitted, thanks!