Set up LLM-RAG

Complete the following steps to set-up and begin using large language model retrieval-augmented generation (LLM-RAG).

Only Docker deployments are supported for running LLM-RAG on the Splunk App for Data Science and Deep Learning (DSDL).

Prerequisites

If you have not done so already, install or upgrade to DSDL version 5.2.0 and its dependencies. See Install or upgrade the Splunk App for Data Science and Deep Learning.

After installation, go to the setup page and configure Docker. See Configure the Splunk App for Data Science and Deep Learning.

Have Docker installed. See https://docs.docker.com/engine/install

Have Docker Compose installed. See https://docs.docker.com/compose/install

Make sure that the Splunk search head can access the Docker host on port 5000 for API communication, and port 2375 for the Docker agent.

Set up container environment

Follow these steps to configure a Docker host with the required container images. If you use an air-gapped environment, see Set-up LLM-RAG in an air-gapped environment.

- Run the following command to pull the LLM-RAG image to your Docker host:

docker pull splunk/mltk-container-ubi-llm-rag:5.2.0

- Get the Docker Compose files from the Github public repository as follows:

wget https://raw.githubusercontent.com/splunk/splunk-mltk-container-docker/v5.2/beta_content/passive_deployment_prototypes/prototype_ollama_example/compose_files/milvus-docker-compose.yml wget https://raw.githubusercontent.com/splunk/splunk-mltk-container-docker/v5.2/beta_content/passive_deployment_prototypes/prototype_ollama_example/compose_files/ollama-docker-compose.yml wget https://raw.githubusercontent.com/splunk/splunk-mltk-container-docker/v5.2/beta_content/passive_deployment_prototypes/prototype_ollama_example/compose_files/ollama-docker-compose-gpu.yml

Run Docker Compose command

Follow these steps to run the Docker Compose command:

- Install Ollama Module.

For CPU machines, run this command:docker compose -f ollama-docker-compose.yml up --detach

For GPU machines with the NVIDIA driver installed, run this command:

docker compose -f ollama-docker-compose-gpu.yml up --detach

- Install Milvus Module:

docker compose -f milvus-docker-compose.yml up --detach

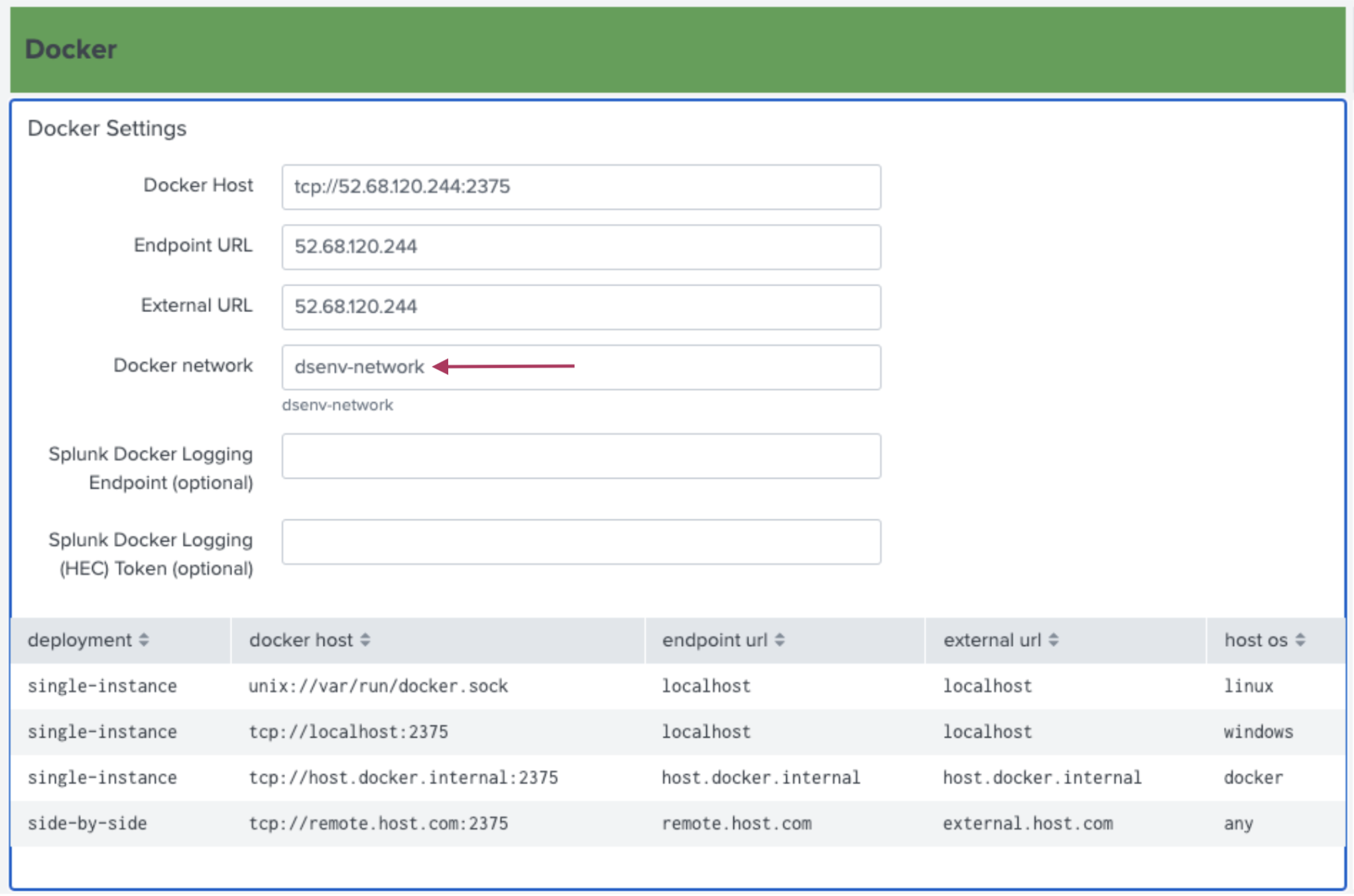

- As a final check, make sure the Ollama image has been spun up and is under the Docker network named

dsenv-network:docker ps docker inspect <Container ID of Ollama>

- Pull LLM to your local machine by running the following command. Replace

MODEL_NAMEwith the specific model name listed in the Ollama library at https://ollama.com/library:curl http://localhost:11434/api/pull -d '{ "name": "MODEL_NAME"}'You can also pull models in at a later stage using the Splunk DSDL command or dashboard.

Configure DSDL for LLM-RAG

Complete these steps to configure DSDL for LLM-RAG:

- Input

dsenv-networkin the Docker network box, as shown in the following image. This step ensures communication between your DSDL container and the other containers created by Docker compose.

- (Optional) If you prefer to remain on DSDL version 5.1.2 or 5.1.1 you can hardcode this network option into DSDL:

- Open SPLUNK_HOME/etc/apps/mltk-container/bin/start_handler.py.

- On Line 127 add

network='dsenv-network'to the docker_client.containers.run function as follows:c = self.docker_client.containers.run(repo + image_name, labels={ "mltk_container": "", "mltk_model": model, }, runtime=runtime, detach=True, ports=docker_ports, volumes={ 'mltk-container-data': {'bind': '/srv', 'mode': 'rw'}, 'mltk-container-app': {'bind': '/srv/backup/app', 'mode': 'ro'}, 'mltk-container-notebooks': {'bind': '/srv/backup/notebooks', 'mode': 'ro'} }, remove=True, log_config=docker_log_config, environment=environment_vars, network='dsenv-network')

- In the Splunk App for Data Science and Deep Learning (DSDL), navigate to the Containers page and spin up the Red Hat LLM RAG CPU (5.2) container.

- (Optional) If you did not pull the LLM model on your Docker host side, you can use either of the following methods to do so:

Method Description Run an SPL command Run the following SPL command on the Splunk search head to download model files of your choice. Replace <model_name>with the model name listed here: https://ollama.com/library:| makeresults | fit MLTKContainer algo=llm_rag_ollama_model_manager task=pull model=<model_name> _time into app:llm_rag_ollama_model_manager as Pulled

To delete a certain model, replace

task=pullwithtask=delete

To list all models existing, replacetask=pullwithtask=listUse LLM dashboard Use the LLM Management dashboard on DSDL to download models. From the Assistants menu choose LLM-RAG.

| About LLM-RAG | Set up LLM-RAG in an air-gapped environment |

This documentation applies to the following versions of Splunk® App for Data Science and Deep Learning: 5.2.0

Download manual

Download manual

Feedback submitted, thanks!