LLM-RAG use cases

The large language model retrieval-augmented generation (LLM-RAG) functionalities with assistive guidance dashboards handles the following use cases:

Standalone LLM

Use Standalone LLM for direct use of the LLM for Q&A inference or chat. For additional details, see Using Standalone LLM.

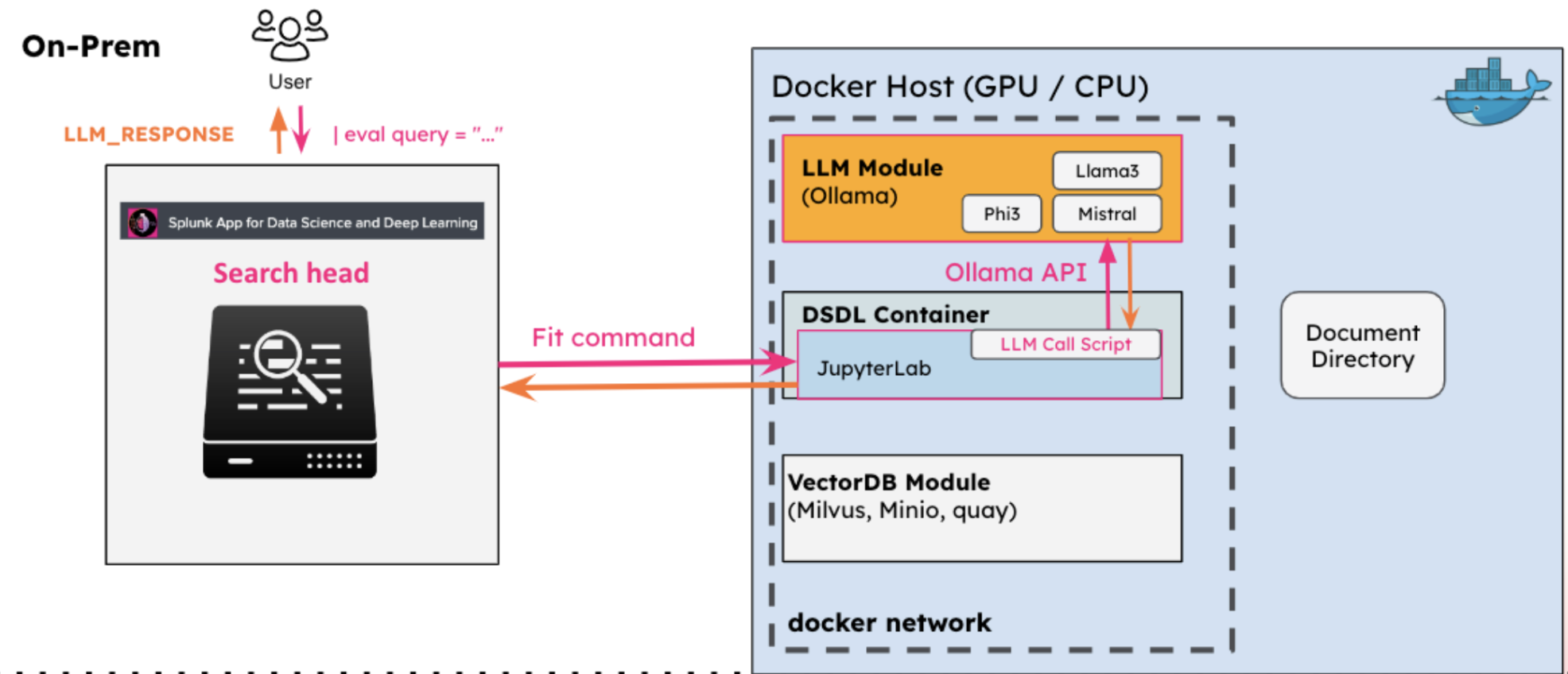

As shown in the following image, when using Standalone LLM, you initialize a search with a prompt as well as text data searched from the Splunk platform. The prompt is sent to the DSDL container, where the LLM module's API is called to generate responses. The responses are then returned to the Splunk platform search as search results.

Standalone VectorDB

Use Standalone VectorBD when you want to encode machine data and conduct similarity search. For additional details, see Using Standalone VectorDB.

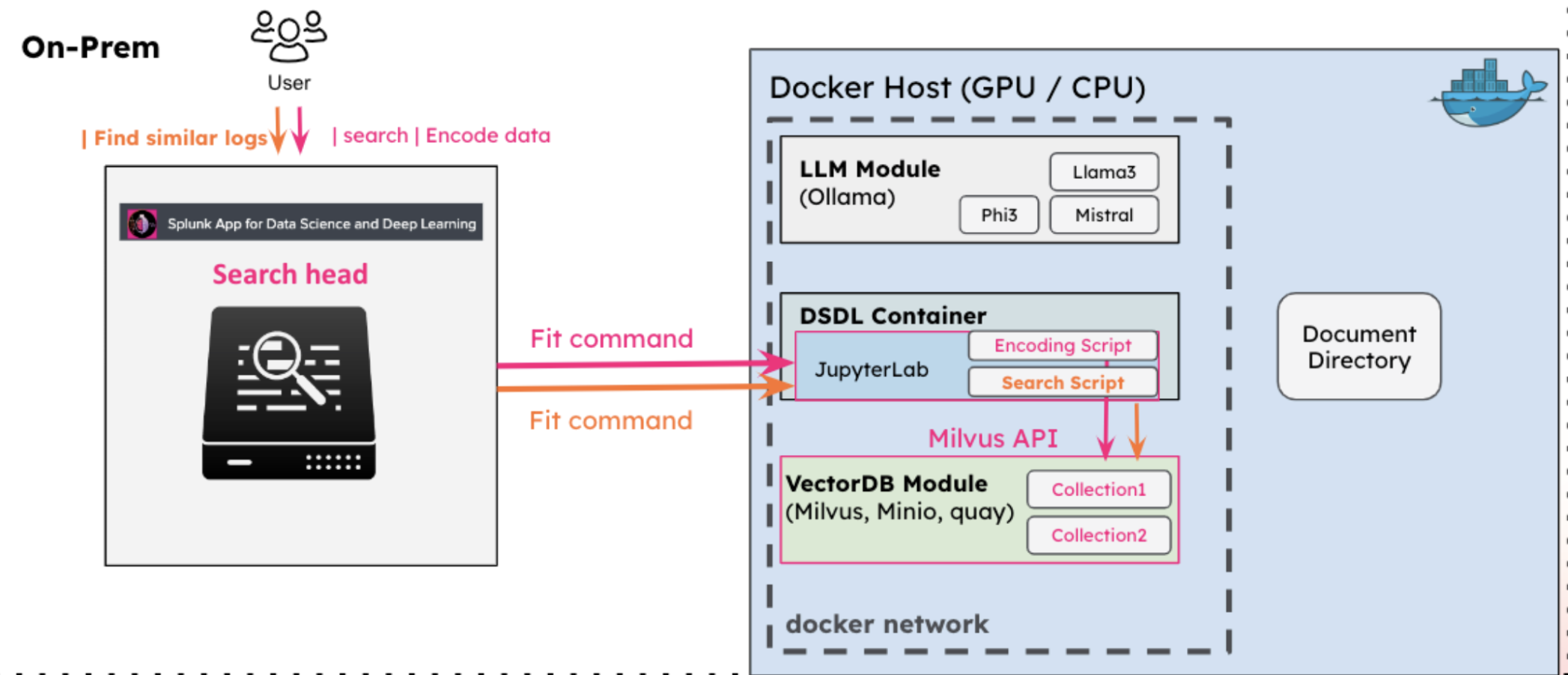

As shown in the following image, when using Standalone VectorDB you initially encode Splunk log data into a collection within the vector database. When an unknown log data occurs, you can conduct a vector search against the pre-encoded data to find similar recorded log data .

Document-based LLM-RAG

Use Document-based LLM-RAG when you want to encode arbitrary documents and use them as additional contexts when prompting LLM models. Document-based LLM-RAG provides results based on an internal knowledge database. For additional details, see Using Document-based LLM-RAG.

Document-based LLM-RAG has 2 steps:

- Document encoding

- Document pieces appended

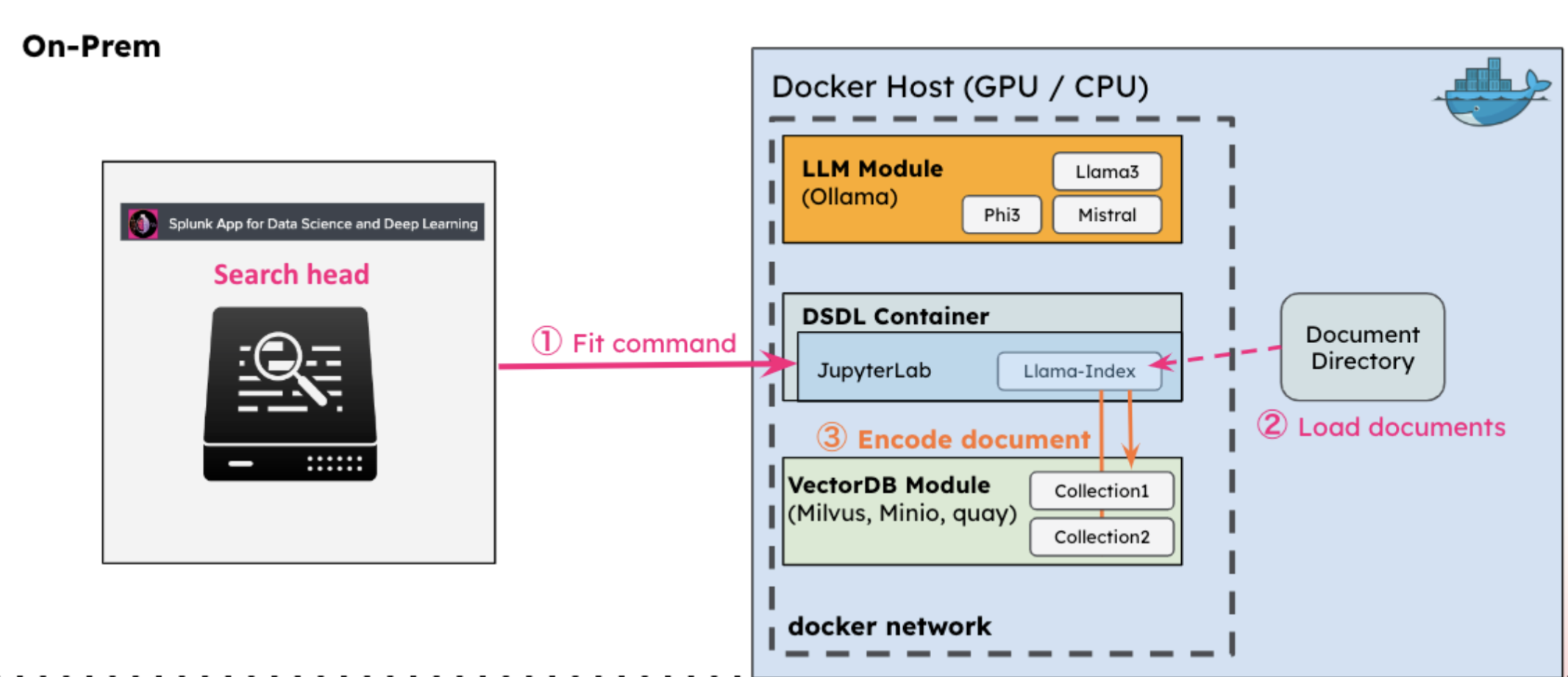

In the first step you encode any documents stored in a directory of the Docker host into a vectorDB collection.

The following image shows the document encoding step:

When you initialize a search that requires the knowledge from the documents, the DSDL container conducts a vector search on the encoded document collection to find related pieces of those documents.

In the second step the related pieces of documents are appended to the original search as additional contexts and the search is sent to the LLM. The LLM responses are then returned to the Splunk platform search as search results.

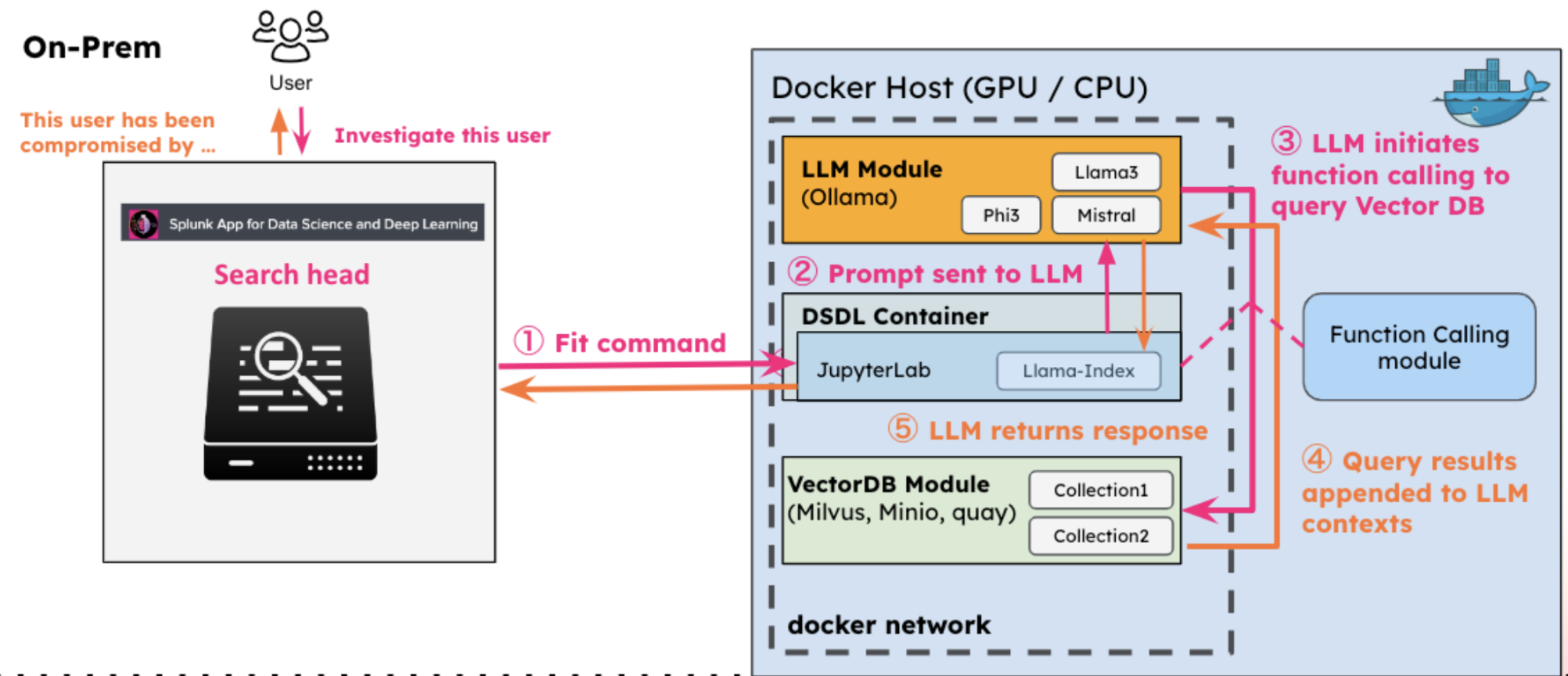

The following image shows the DSDL container vector search of the encoded documents:

Function Calling LLM-RAG

Use Function Calling LLM-RAG for the LLM to run customizable function tools to obtain contextual information for response generation. The Function Calling LLM-RAG provides example tools for searching Splunk data and searching VectorDB collections. For additional details, see Using Function Calling LLM-RAG

Similar to document-based LLM-RAG, function calling LLM-RAG also obtains additional information prior to generating the final response. The difference is that with function calling a set of function tools are made accessible to the LLM.

When additional context is needed, such as how many error logs are in a Splunk platform instance, the LLM automatically runs the functions to obtain the information and uses it to generate responses.

The following image shows Function Calling LLM-RAG architecture:

| About the compute command | Use Standalone LLM |

This documentation applies to the following versions of Splunk® App for Data Science and Deep Learning: 5.2.0

Download manual

Download manual

Feedback submitted, thanks!