All DSP releases prior to DSP 1.4.0 use Gravity, a Kubernetes orchestrator, which has been announced end-of-life. We have replaced Gravity with an alternative component in DSP 1.4.0. Therefore, we will no longer provide support for versions of DSP prior to DSP 1.4.0 after July 1, 2023. We advise all of our customers to upgrade to DSP 1.4.0 in order to continue to receive full product support from Splunk.

Processing data in motion using the

The is a data stream processing solution that collects data in real time, processes it, and provides at-least-once delivery of that data to one or more destinations of your choice.

As a user, you can enrich, transform, and analyze your data during the processing stage, gaining increased control and visibility into your data before it reaches its destination. If your data contains noisy or sensitive information, you can use the to remove or sanitize your data before that data is indexed, reducing security risks and focusing on only the data that you care about. You can enrich your data with data found in CSV files or even with machine learning. When your data looks the way that you want it to look, you can use the to route that data to the destination where it has the most value.

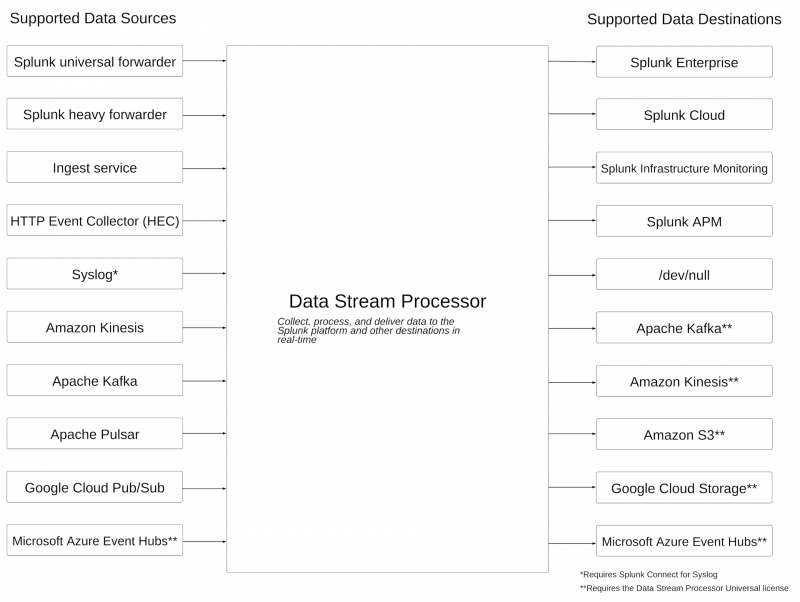

You can also use the to design custom data pipelines that transform data and route it between a wide variety of data sources and data destinations, even if they support different data formats. For example, you can route data from a Splunk forwarder to an Amazon S3 bucket. The following diagram summarizes the data sources that you can collect data from and the data destinations that you can send your data to when using the :

Get started with the

Before you can get started with the , you might need to perform a few setup steps. See the Install and administer the manual for instructions.

To get familiar with basic features and workflows, check out the Tutorial.

To start routing and processing your data, you need to create connections to your data sources and destinations of choice, and then build data pipelines that define how to move the data and how to transform it along the way. The following table lists the documentation that you can refer to for more information about common workflows and use cases:

| For information on how to do this | Refer to this documentation |

|---|---|

| Connect to specific data sources and destinations | Connect to Data Sources and Destinations with the |

| Summarize data based on specific conditions | Summarize records with the stats function |

| Format and organize data | |

| Filter and remove unwanted data | |

| Mask or obfuscate sensitive data | Masking sensitive data in the |

| Enrich your streaming data with data from a CSV file or a Splunk Enterprise KV Store | About lookups |

| Send data to multiple destinations |

| terminology |

This documentation applies to the following versions of Splunk® Data Stream Processor: 1.4.0, 1.4.1, 1.4.2, 1.4.3, 1.4.4, 1.4.5, 1.4.6

Download manual

Download manual

Feedback submitted, thanks!