All DSP releases prior to DSP 1.4.0 use Gravity, a Kubernetes orchestrator, which has been announced end-of-life. We have replaced Gravity with an alternative component in DSP 1.4.0. Therefore, we will no longer provide support for versions of DSP prior to DSP 1.4.0 after July 1, 2023. We advise all of our customers to upgrade to DSP 1.4.0 in order to continue to receive full product support from Splunk.

Create a pipeline with multiple data sources

When creating a data pipeline in the , you can choose to connect multiple data sources to the pipeline. For example, you can create a single pipeline that gets data from a Splunk forwarder, an Apache Kafka broker, and Microsoft Azure Event Hubs concurrently. You can apply transformations to the data from all three data sources as the data passes through the pipeline, and then send the transformed data out from the pipeline to a destination of your choosing.

If you want to create a pipeline with multiple data sources, in most cases, you can use the Splunk DSP Firehose source function. See the Data sources supported by Splunk DSP Firehose topic in the Connect to Data Sources and Destinations with manual.

However, if you want to use multiple data sources that are not supported by the Splunk DSP Firehose function or if you want to apply specific transformations to the data streams before combining them then do the following tasks:

- From the Pipelines page, select a data source.

- (Optional) From the Canvas View of your pipeline, click the + icon and add any desired transformation functions to the pipeline.

- Once you have added all the desired transformation functions to your pipeline, click the + icon and add a union function to your pipeline.

- Click the + icon on the immediate left of the

Unionfunction, and then add a second source function to your pipeline. You can optionally union more data sources, if desired. - (Optional) In order to union all of your data streams, they must have the same schema. If your data streams don't have the same schema, you can use the select streaming function to match your schemas.

- After unioning your data streams, continue building your pipeline by clicking the + icon to the immediate right of the

unionfunction.

Create a pipeline with two data sources: Kafka and Splunk DSP Firehose

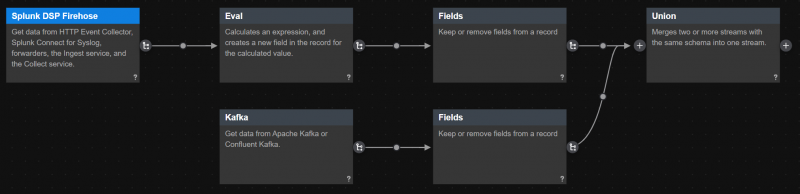

In this example, create a pipeline with two data sources, Kafka and Splunk DSP Firehose, and union the two data streams by normalizing them to fit the expected Kafka schema.

The following screenshot shows two data streams from two different data sources being unioned together into one data stream in a pipeline.

Prerequisites

Steps

- From the Pipelines page, select the Splunk DSP Firehose data source.

- From the Canvas View of your pipeline, add a union function to your pipeline.

- . Click the + icon on the immediate left of the

Unionfunction, and then select the Kafka source function. - With the Kafka source function selected, on the View Configurations tab, provide your connection ID and topic name.

- Normalize the schemas to match. Hover over the circle in between the

Splunk DSP FirehoseandUnionfunctions, click the + icon, and add an Eval function. - In the

Evalfunction, type the following SPL2. This SPL2 converts the event schema to the default Kafka schema.value=to_bytes(cast(body, "string")), topic=source_type, key=to_bytes(time())

- Hover over the circle in between the

EvalandUnionfunctions, click the + icon, and add a Fields function. - To modify the records from the Splunk DSP Firehose so that the schema matches the Kafka record schema, drop all the fields from these records except for the

value,topic, andkeyfields. In the fields_list parameter of theFieldsfunction, do the following:- Type

value. - Click + Add, and then type

topic. - Click + Add, and then type

key.

- Type

- Now, let's normalize the other data stream. Hover over the circle in between the Kafka and Union functions, click the + icon, and add another Fields function.

- In the fields_list parameter of the

Fieldsfunction, do the following:- Type

value. - Click + Add, and then type

topic. - Click + Add, and then type

key.

- Type

- Validate your pipeline.

You now have a pipeline that reads from two data sources, Kafka and Splunk DSP Firehose, and merges the data from both sources into one data stream.

| Create a pipeline using a template | Send data from a pipeline to multiple destinations |

This documentation applies to the following versions of Splunk® Data Stream Processor: 1.3.0, 1.3.1, 1.4.0, 1.4.1, 1.4.2, 1.4.3, 1.4.4, 1.4.5, 1.4.6

Download manual

Download manual

Feedback submitted, thanks!