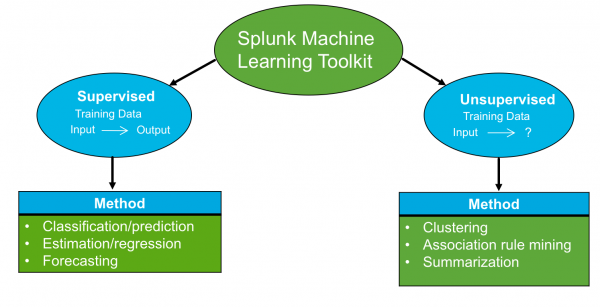

Splunk Machine Learning Toolkit workflow

Machine learning is a process of generalizing from examples. You can use these generalizations, typically called models, to perform a variety of tasks. Task examples include predicting the value of a field, forecasting future values, or detecting anomalies.

In a supervised task, create a model from training data to map known inputs to known outputs. In an unsupervised task, create a model from training data to detect patterns within known inputs. The following diagram shows the workflow of the Splunk Machine Learning Toolkit, and lists the appropriate methods for supervised or unsupervised tasks.

Clean and transform the data

Many analytics have explicit requirements, such as timestamps or fields with numeric values. Other analytics perform better when the data meets certain criteria, such as the values of a field not being arbitrarily large. Before applying machine learning, you should clean or transform your data to meet these explicit requirements. Later, after fitting a machine learning model and validating it, you might need to apply additional transformations to your data to improve the quality of the model.

Some of the assistants in the Splunk Machine Learning Toolkit provide the ability to apply multiple sequential transformations to your data. See Preprocessing for information.

Fit the model

To create a model in the Splunk Machine Learning Toolkit select an algorithm, and fit a model on the training data. For more information, see models.

In the following example, the model uses the linear regression algorithm to predict a numeric field.

... | fit LinearRegression temperature from date_month date_hour into temperature_model | ..

Use the apply command to apply the trained model to the new data.

... | apply temperature_model ...

For a list of all supported algorithms, see Algorithms.

The Splunk Machine Learning Toolkit also features the following assistants to perform analysis. See Assistants.

- Predict Numeric Fields

- Predict Categorical Fields

- Detect Numeric Outliers

- Detect Categorical Outliers

- Forecast Time Series

- Cluster Numeric Results

Validate the model

Validation involves training a model with a portion of your data (the training set) and then testing it with a different portion (the test set). For prediction tasks, validation often involves randomly partitioning events into one set or the other. For forecasting tasks, the training set is a prefix of the data, and the test set is a suffix of the data that is withheld to compare against the forecasts.

Validating a trained model with the test set can be performed several ways, depending on the type of model. Each assistant provides methods in the Validate section, which is displayed after you train a model.

For example, when predicting a numeric field, you want to know by how much the predictions vary from the actual values. The Predict Numeric Fields assistant provides validation methods, including statistical methods such as root mean squared error, and visual methods such as a scatterplot. For other analytics or other applications of those analytics, alternate methods might be more appropriate.

Refine the model

If the validation step reveals weaknesses in your model, or if the number of errors is too high, you can adjust the parameters to improve the relevant metrics. To review a history of the parameter combinations you have tried along with their corresponding validation metrics (and more), choose the Experiments History tab in any experiment.

Deploy the model

A model is ready to be deployed after passing the validation step. You can operationalize a model in different ways, depending on its type and the intended application.

Generally, operationalization actions fall into the following categories.

| Deployment action | Application |

|---|---|

| Make predictions or forecasts | The prediction or forecast made by a model might be useful directly or as the input to another analytic. |

| Detect outliers and anomalies | A model encodes expectations. When the model's predictions or forecasts do not match reality, especially when they otherwise usually do, the result is an anomaly. Detecting outliers and other anomalies in data is an extremely common use case for machine learning. |

| Trigger or inform an action | If the model detects an anomaly, it can trigger an alert. In response to this alert, you can find a solution. Similarly, for predictions, a trigger can alert you of forecasts for a strategic business decision. |

| Showcase examples | Search commands for machine learning |

This documentation applies to the following versions of Splunk® Machine Learning Toolkit: 3.2.0, 3.3.0

Download manual

Download manual

Feedback submitted, thanks!