Splunk App for Data Science and Deep Learning advanced architecture and workflow

The Splunk App for Data Science and Deep Learning (DSDL) processes data through containerized environments, manages logs and metrics, and scales to support enterprise-level model training and inference.

The following are some key concepts for working with DSDL:

| DSDL concept | Description |

|---|---|

| ML-SPL search commands | The fit, apply, and summary commands that trigger container-based operations in DSDL.

|

| DSDL container | A Docker, Kubernetes, or OpenShift pod running advanced libraries such as TensorFlow or PyTorch. |

| Data exchange | The process where data flows from the Splunk search head to the container through HTTPS, with results returning the same way. |

| Notebook workflows | JupyterLab notebooks that define custom code for fit and apply functions, exported into Python modules at runtime.

|

Overview

When you use DSDL, the Splunk platform connects to an external container environment such as Docker, Kubernetes, or OpenShift, to offload compute-intensive machine learning and deep learning workloads. This design allows you to achieve the following outcomes:

- Use GPUs for faster training and inference.

- Scale horizontally by running multiple containers concurrently.

- Isolate resource-heavy tasks from main processes for the Splunk platform.

- Monitor container logs, performance metrics, and model lifecycle within the Splunk platform or other observability platforms.

Architecture

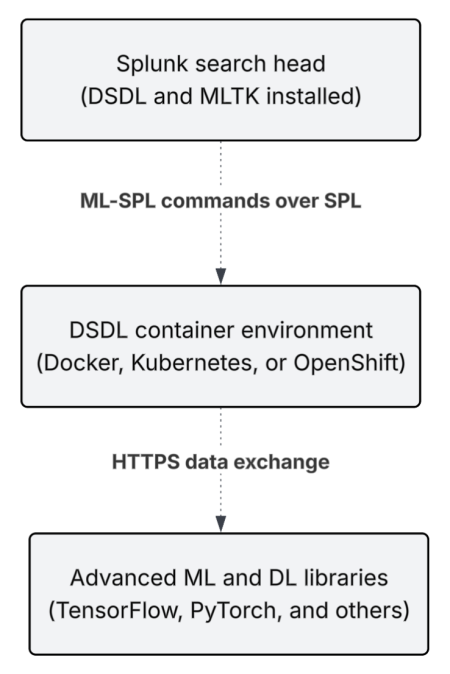

The following diagram illustrates a typical DSDL setup in a production environment.

While the specifics differ slightly across Docker, Kubernetes, or OpenShift platforms, the core components remain the same.

| Architecture component | Description |

|---|---|

| Splunk search head | Hosts Splunk App for Data Science and Deep Learning, Machine Learning Toolkit (MLTK), and the Python for Scientific Computing (PSC) add-on. Allows users to run the SPL searches that trigger container-based training or inference. |

| Container environment | Can be a single Docker host, or a cluster orchestrated by Kubernetes or OpenShift. DSDL automatically starts and stops containers or pods based on user requests or scheduled searches. |

| Machine learning libraries | Contains Python, TensorFlow, PyTorch, and more in Docker images or pods. Can optionally use GPUs for acceleration if the environment supports it. |

| Route, services, and ports | Allow communication to flow over HTTPS from the Splunk search head to the container's API endpoint, then back to the Splunk platform with results. |

Data lifecycle

DSDL data undergoes the following lifecycle:

| Lifecycle stage | Description |

|---|---|

| Run an SPL search | A user runs a search in the Splunk platform with an ML-SPL command. Example: index=my_data | fit MLTKContainer algo=my_notebook epochs=50 ... into app:my_custom_model |

| Prepare data | The Splunk platform retrieves the relevant data from indexes. For example, the date from the _time field and feature_* columns.If |

| Call Container API | The DSDL app packages data and metadata such as features and parameters, into CSV and JSON, then sends it to the container over HTTPS. In Kubernetes or OpenShift, this is typically a route or service endpoint. In Docker, it might be a TCP port such as port 5000. |

| Run model training or inference | The container reads the data, loads the relevant Python code as defined in a Jupyter notebook or .py module, and runs training or inference using TensorFlow, PyTorch, or other libraries. |

| Send results and logs | When complete, the container sends metrics and model output back to the Splunk platform. You can also stream logs through the HTTP Event Collector (HEC), or ingest container logs for debugging. |

| Store model | If you used into app:<model_name>, the model artifacts such as weights and config are persisted so you can re-apply them later or track training metrics in the Splunk platform.

|

Container lifecycle

Review the following descriptions of a container lifecycle:

| Lifecycle stage | Description |

|---|---|

| Launch container |

In developer (DEV) mode run |

| Stop and clean up container | In many orchestration platforms such as Kubernetes, DSDL container management automatically stops or scales down pods after idle time, or when tasks complete.

In Docker, containers might remain running for certain user sessions. You can stop them manually in DSDL or in the Splunk platform. |

| Log containers and collect metrics | If you use the HTTP Event Collector (HEC), the container can push logs or custom metrics back to the Splunk platform.

If you use Splunk Observability Cloud or other monitoring solutions, you can track CPU, GPU, and memory usage for each container or pod. |

Multi-container scaling and GPU usage options

DSDL includes the following options for container scaling and GPU:

| Option | Description |

|---|---|

| Horizontal scaling | Kubernetes: Kubernetes supports scaling from the container management interface.You can define multiple replicas of the DSDL container if your workload is concurrency-based with many inference jobs. DSDL might spawn multiple pods in parallel for large scheduled searches. Docker: Docker is typically limited to a single container per host unless you orchestrate multiple Docker hosts. |

| Acceleration | Docker: If your Docker host has GPUs and uses the NVIDIA runtime, DSDL can run GPU-enabled images. Kubernetes: Kubernetes supports node labeling for GPU nodes, which allows GPU-based pods to schedule automatically. |

| Tuning resource requests | CPU and memory limits: In Kubernetes and OpenShift, specify CPU and memory requests and limits in your container image or deployment. GPU requests: For GPU usage, define nvidia.com/gpu in the container resource spec file, letting the scheduler handle GPU allocation. |

Monitoring and logging options

DSDL integrates with Splunk platform logging and observability to facilitate troubleshooting, performance analysis, and real-time metrics capturing.

| Option | Description |

|---|---|

| Container logs in the Splunk platform | You can aggregate the standard container stdout and stderr logs with Splunk Connect for Kubernetes, a Docker logging driver, or a manually configured HEC endpoint. Check container logs to diagnose training or inference failures such as Python exceptions, and out-of-memory errors. |

| Metrics and observability | Using Splunk Observability Cloud, integrate your container environment for real-time dashboards such asCPU, memory, disk I/O, and network. Track your metrics with built-in tools. For Docker, use docker stats. For Kubernetes, use kubectl top pods/nodes. For OpenShift, the web console provides metrics. |

| Training and inference telemetry | Use notebooks to push custom metrics like training loss, or accuracy per epoch back into the Splunk platform.

You can create dashboards or alerts in the Splunk platform to track these metrics and detect model drift or performance issues. |

| Checking pre-container startup logs in the Splunk platform | If you suspect network timeouts or container launch failures before the container becomes fully available, search for error messages in Splunk internal logs:

By reviewing |

Architecture best practices

See the following best practices for your DSDL architecture:

| Best practice | Details |

|---|---|

| Separate your development and production environments | Maintain a development container environment for notebook exploration, and a separate, minimal environment for production inference. |

| Use GPU nodes for deep learning tasks | Ensure GPU scheduling is configured so you can significantly reduce training time. |

| Log everything | Route container logs to the Splunk platform for quick debugging and performance monitoring. For example stdout, stderr, and training logs. Monitor the _internal logs for mltk-container to spot pre-startup or network issues.

|

| Manage the container lifecycle | Clean up or scale down containers after tasks finish to avoid unnecessary resource usage. |

| Secure data flows | Use TLS or HTTPS for traffic between the Splunk platform and containers. Set up firewall rules to restrict traffic to authorized IPs. |

| Version your notebooks | Maintain a Git or CI/CD pipeline for your Jupyter notebooks to keep track of changes and ensure reproducibility. |

See also

Learn how to create custom notebook code for specialized algorithms and integrated ML-SPL commands. See Extend the Splunk App for Data Science and Deep Learning with custom notebooks.

| Splunk App for Data Science and Deep Learning architecture | Splunk App for Data Science and Deep Learning components |

This documentation applies to the following versions of Splunk® App for Data Science and Deep Learning: 5.2.1

Download manual

Download manual

Feedback submitted, thanks!