Use Document-based LLM-RAG

Use Document-based large language model retrieval-augmented generation (LLM-RAG) through a set of dashboards.

The following processes are covered:

All the dashboards are powered by the fit command. The dashboards showcase Document-based LLM-RAG functionalities. You are not limited to the options provided on the dashboards. You can tune the parameters on each dashboard, or embed a scheduled search that runs automatically.

Configure LLM and Embedding services

Make sure that you have enabled the LLM services and Embedding services of your choice on the Setup LLM-RAG (optional) page prior to starting the container. If you have not, finish the configuration and restart the container. See Set up LLM-RAG additional configurations.

For downloading local LLMs or embedding models, refer to the relevant sections in the Use Standalone LLM and Use Standalone VectorDB documents.

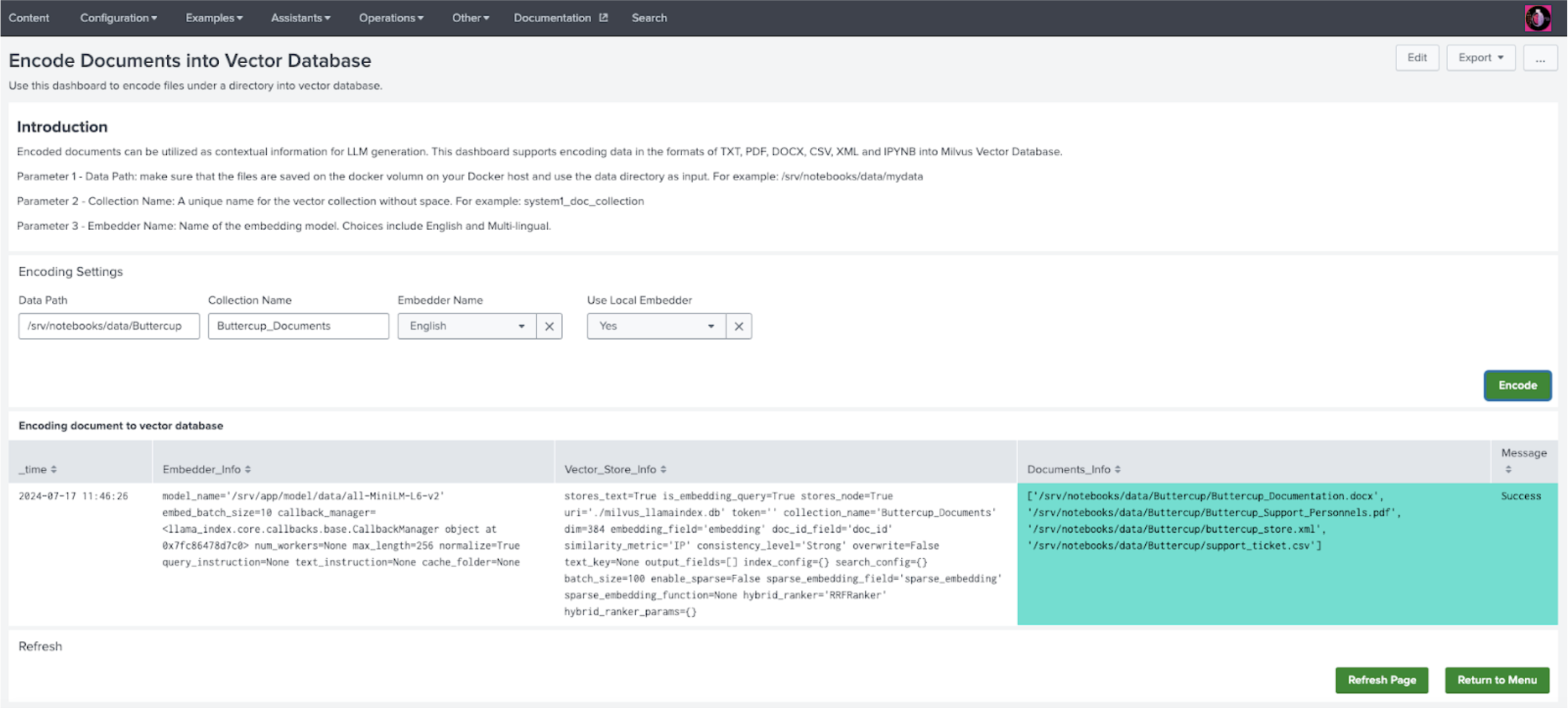

Encode documents into VectorDB

Complete the following steps:

- Gather the documents you want to encode into a single collection. The supported document extensions are TXT, PDF, DOCX, CSV, XML, and IPYNB.

- Upload the documents through JupyterLab or add the documents to the Docker volume:

Upload option Description JupyterLab Create a folder at any location in JupyterLab, for example notebooks/data/MyData, and upload all the files into the folder. Add the documents to the Docker volume Your files must exist on your Docker host. The Docker volume must be at /var/lib/docker/volumes/mltk-container-data/_data. Create a folder under this example path: /var/lib/docker/volumes/mltk-container-data/_data/notebooks/data/MyData

Copy the documents into this path.

- In DSDL, navigate to Assistants, then LLM-RAG, then Encoding data into Vector Database, and then select Encode documents.

- On the dashboard, input the data path with prefix "/srv". For example, if you have a folder on JupyterLab notebooks/data/Buttercup, your input would be /srv/notebooks/data/Buttercup.

- Create a unique name for a new Collection Name. If you want to add more data to an existing collection, use the existing name.

- Choose the configured VectorDB service and Embedding service.

- Select Encode to start encoding. A list of messages is shown in the associated panel after the encoding finishes.

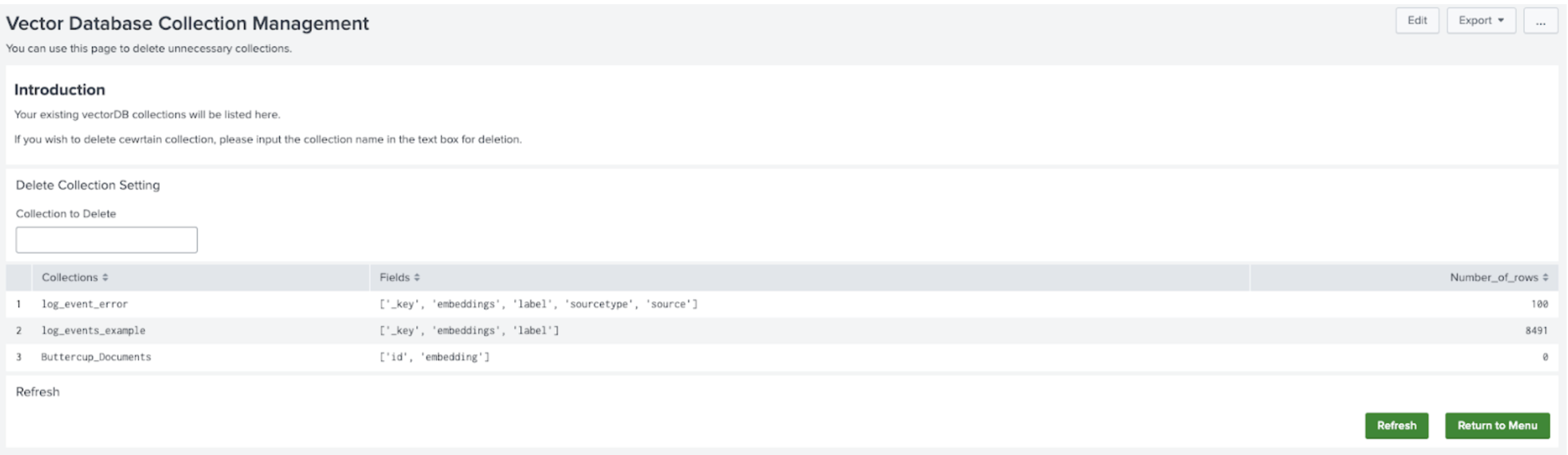

- Select Return to Menu and then select Manage and Explore your Vector Database. You will see the collection listed on the main panel.

It might take a few minutes for the complete number of rows to display.

On this page you can also delete any collection.

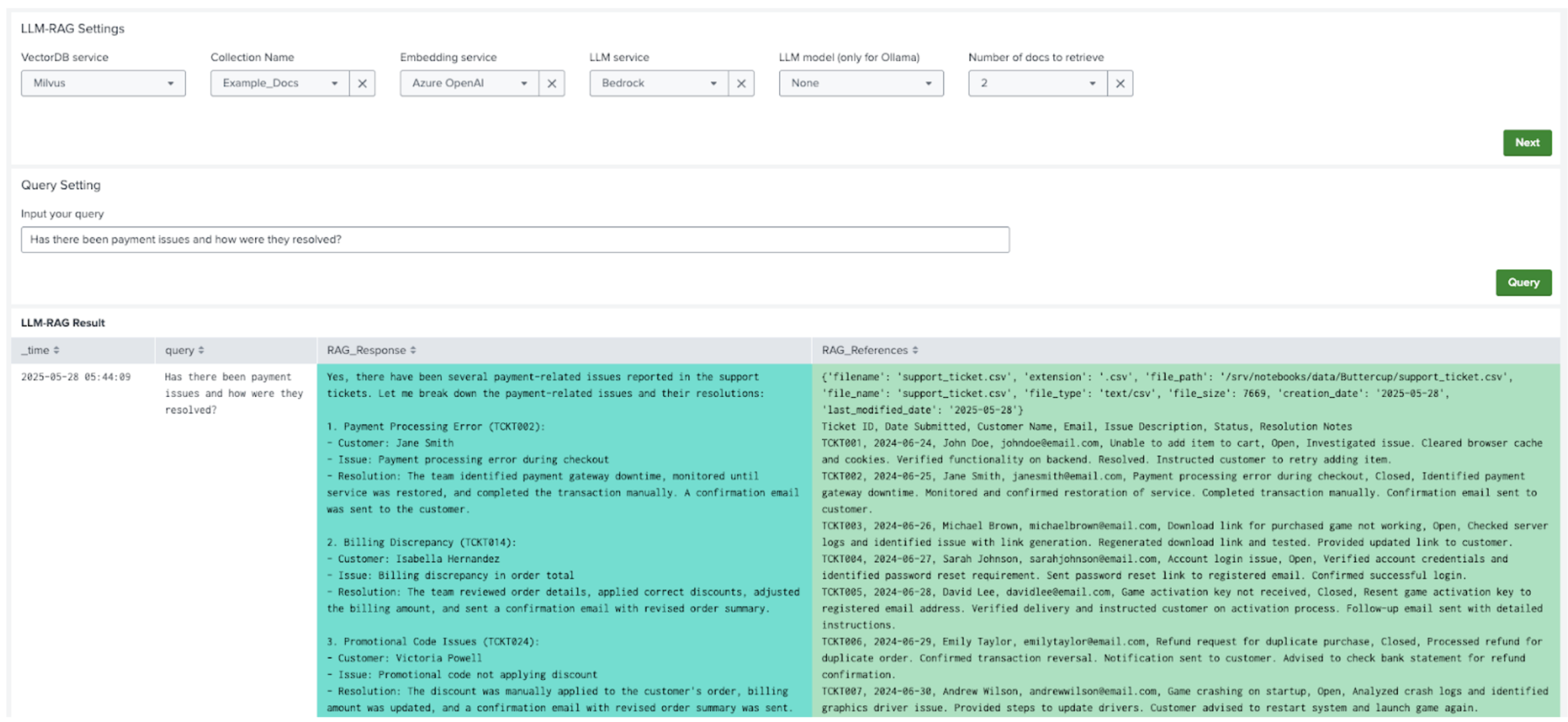

Use LLM-RAG

Complete the following steps:

- In DSDL, navigate to Assistants, then LLM-RAG, then Querying LLM, and then select RAG-based LLM.

- In Collection Name, select an existing collection on which you would like to search. Select the same VectorDB service and Embedding service that you used for encoding.

- Select the LLM service of your choice.

- Select Next to submit the settings.

- An Input your Query field becomes available. Enter your search and select Query as shown in the following image:

| Use Standalone VectorDB | Use Function Calling LLM-RAG |

This documentation applies to the following versions of Splunk® App for Data Science and Deep Learning: 5.2.1

Download manual

Download manual

Feedback submitted, thanks!