Query LLM with vector data

After pulling the LLM model to your local Docker container and encoding document or log data into the vector database, you can carry out inferences using the LLM. See, Encode data into a vector database.

Before you begin make sure you have encoded some documents or log data into the vector database.

To search LLM with VectorDB, complete these tasks:

- Standalone LLM: A one-shot Q&A agent to answer user's questions based on prior knowledge within the training data.

- RAG-based LLM: Uses additional knowledge that has been encoded in the vector database.

- LLM with Function Calling: Runs predefined functions to acquire additional information and generate answers.

- Local LLM and Embedding Management: List, pull, and delete LLM models in your on-premises environment.

Standalone LLM

You can use this standalone LLM to conduct text-based classification or summarization by passing the text field to the algorithm along with a prompt that states the task.

Go to the Standalone LLM page:

- In DSDL, go to Assistants.

- Select LLM-RAG, then Querying LLM with Vector Data, and then Standalone LLM.

Parameters

The Standalone LLM page has the following parameters:

| Parameter name | Description |

|---|---|

llm_service

|

Type of LLM service. Choose from ollama, azure_openai, openai, bedrock and gemini. |

model_name |

(Optional) The name of an LLM model. |

prompt |

A prompt that explains the task to the LLM model. |

Run the fit or compute command

Use the following syntax to run the fit command or the compute command:

- Run the

fitcommand:| makeresults | eval text = "Email text: Click to win prize" | fit MLTKContainer algo=llm_rag_ollama_text_processing llm_service=ollama prompt="You will examine if the following email content is phishing." text into app:llm_rag_ollama_text_processing

- Run the

computecommand:Make sure you append the

computecommand to a search pipeline that generates a table with a field calledtextto use Standalone LLM.| makeresults | eval text = "Email text: Click to win prize" | compute algo:llm_rag_ollama_text_processing llm_service:ollama prompt:"You will examine if the following email content is phishing." text

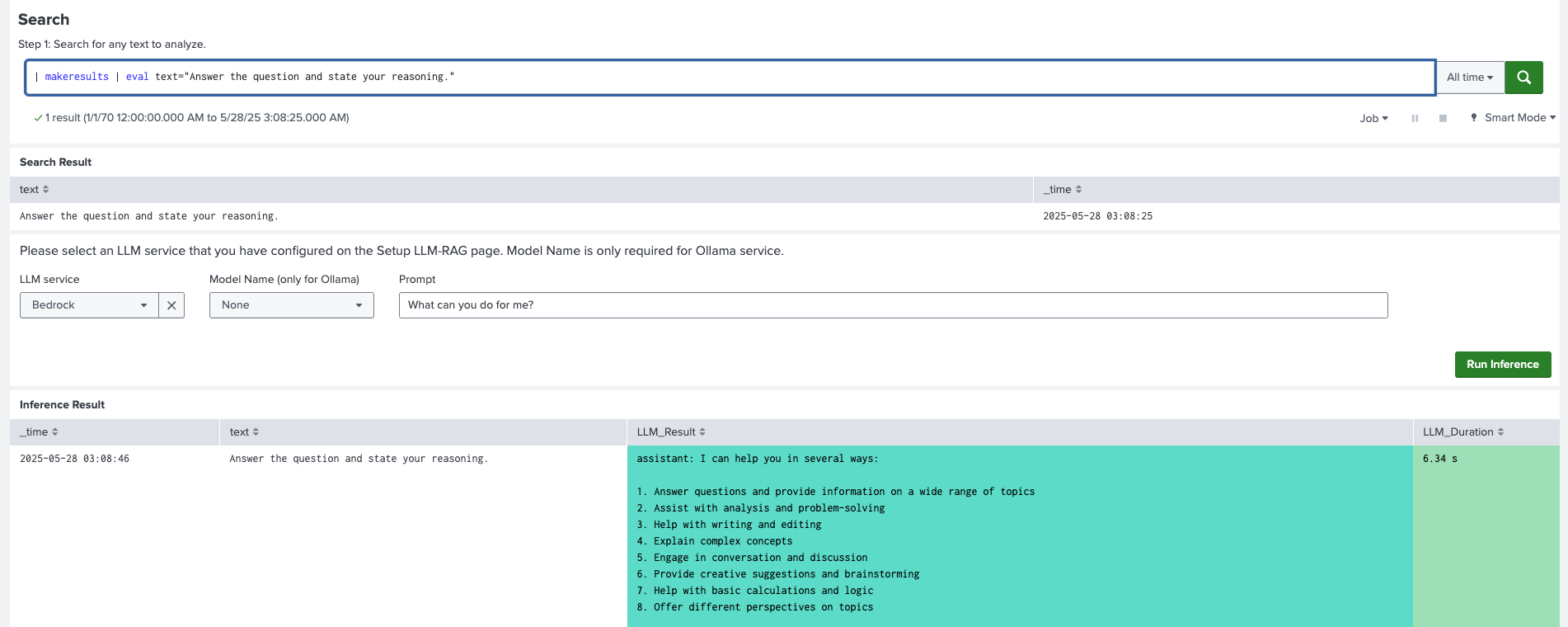

Dashboard view

The following image shows the dashboard view for the Standalone LLM page:

The dashboard view includes the following components:

| Dashboard component | Description |

|---|---|

| Search bar | Create a search pipeline that generates a table with a field called text that contains the text you want to search.

|

| Select LLM Service | Choose the LLM service. |

| Select LLM Model | The name of an LLM model that exists in your environment. If no model is shown in the dropdown menu, go to the LLM management page to pull models. |

| Prompt | Write a prompt explaining the task to the LLM. For example, "Is the following email phishing?" |

| Run Inference | Start searching after finishing all the inputs. |

| Refresh Page | Reset all the tokens on this dashboard. |

| Return to Menu | Return to the main menu. |

RAG-based LLM

In the Splunk App for Data Science and Deep Learning (DSDL), navigate to Assistants, then LLM-RAG, then Querying LLM with Vector Data, and then RAG-based LLM.

Parameters

The RAG-based LLM page has the following parameters:

| Parameter name | Description |

|---|---|

llm_service

|

Type of LLM service. Choose from ollama, azure_openai, openai, bedrock, and gemini.

|

model_name

|

(Optional) The name of an LLM model. |

collection_name

|

The existing collection to conduct similarity search on. |

vectordb_service

|

Type of VectorDB service. Choose from milvus, pinecone, and alloydb.

|

embedder_service

|

Type of embedding service. Choose from huggingface, ollama, azure_openai, openai, bedrock, and gemini.

|

top_k

|

Number of document pieces to retrieve for generation. |

embedder_name

|

Name of embedding model. Optional if configured on the Setup LLM-RAG page. |

Run the fit or compute command

Use the following syntax to run the fit command or the compute command:

- Run the

fitcommand:| makeresults | eval query = "Tell me more about the Buttercup online store architecture" | fit MLTKContainer algo=llm_rag_script llm_service=ollama model_name="llama3" embedder_service=huggingface collection_name="document_collection_example" top_k=5 query into app:llm_rag_script as RAG

- Run the

computecommand:| makeresults | eval query = "Tell me more about the Buttercup online store architecture" | compute algo:llm_rag_script llm_service:ollama model_name:"llama3" embedder_service:huggingface collection_name:"document_collection_example" top_k:5 query

Dashboard view

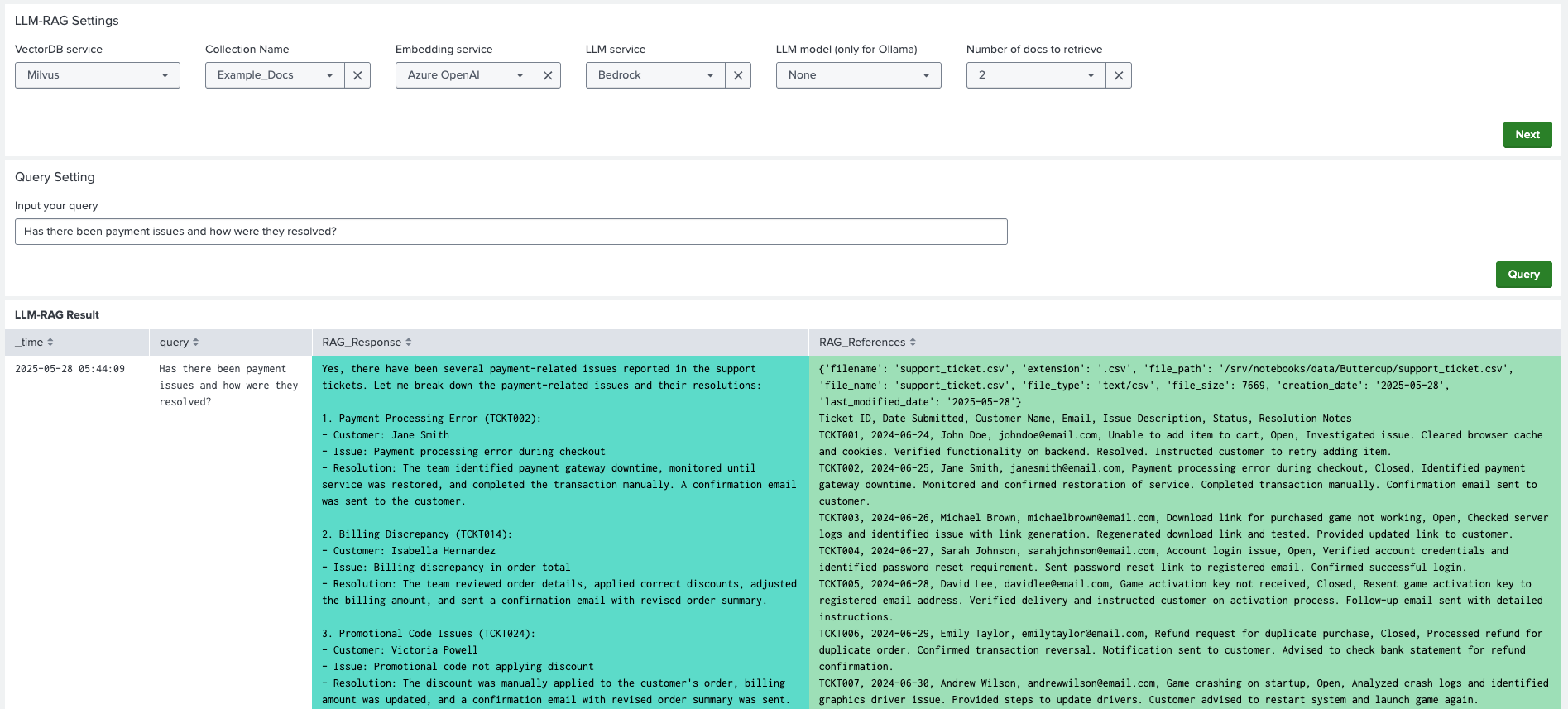

The following image shows the dashboard view for the RAG-based LLM page:

The dashboard view includes the following components:

| Dashboard component | Description |

|---|---|

| VectorDB Service | Type of vectorDB service. |

| Collection Name | An existing collection you want to use for the LLM-RAG. |

| Embedder Service | Type of embedding service. |

| LLM Service | Type of LLM service. |

| LLM Model | LLM model name. Only used for Ollama LLM service. |

| Number of docs to retrieve | Number of document pieces or log messages you wish to use in the RAG. |

| Input your query | Write your search in the text box. |

| Next | Submit the inputs and move on to search input. |

| Query | Select after entering your search and start Retrieval-Augmented Generation (RAG). |

| Refresh Page | Reset all the tokens on this dashboard. |

| Return to Menu | Return to the main menu. |

LLM with Function Calling

There are a set of built-in function tools for the model to use:

- Search splunk

- List indexes

- Get index info

- List saved searches

- List users

- Get indexes and sourcetypes

- Splunk health check

Use customization for specific use cases.

In the Splunk App for Data Science and Deep Learning (DSDL), navigate to Assistants, then LLM-RAG, then Querying LLM, and then LLM with Function Calling.

Parameters

The LLM with Function Calling page has the following parameters:

| Parameter name | Description |

|---|---|

prompt |

Search for the LLM in natural language. |

llm_service |

Type of LLM service. Choose from ollama, azure_openai, openai, bedrock, and gemini.

|

model_name |

The name of an LLM model that exists in your environment. |

Run the fit or compute command

Use the following syntax to run the fit command or the compute command:

- Run the

fitcommand:| makeresults | fit MLTKContainer algo=llm_rag_function_calling prompt="Search Splunk for index _internal and sourcetype splunkd for events containing keyword error from 60 minutes ago to 30 minutes ago. Tell me how many events occurred" llm_service=bedrock _time into app:llm_rag_function_calling as RAG

- Run the

computecommand:| makeresults | compute algo:llm_rag_function_calling prompt:"Search Splunk for index _internal and sourcetype splunkd for events containing keyword error from 60 minutes ago to 30 minutes ago. Tell me how many events occurred" llm_service:bedrock _time

Dashboard view

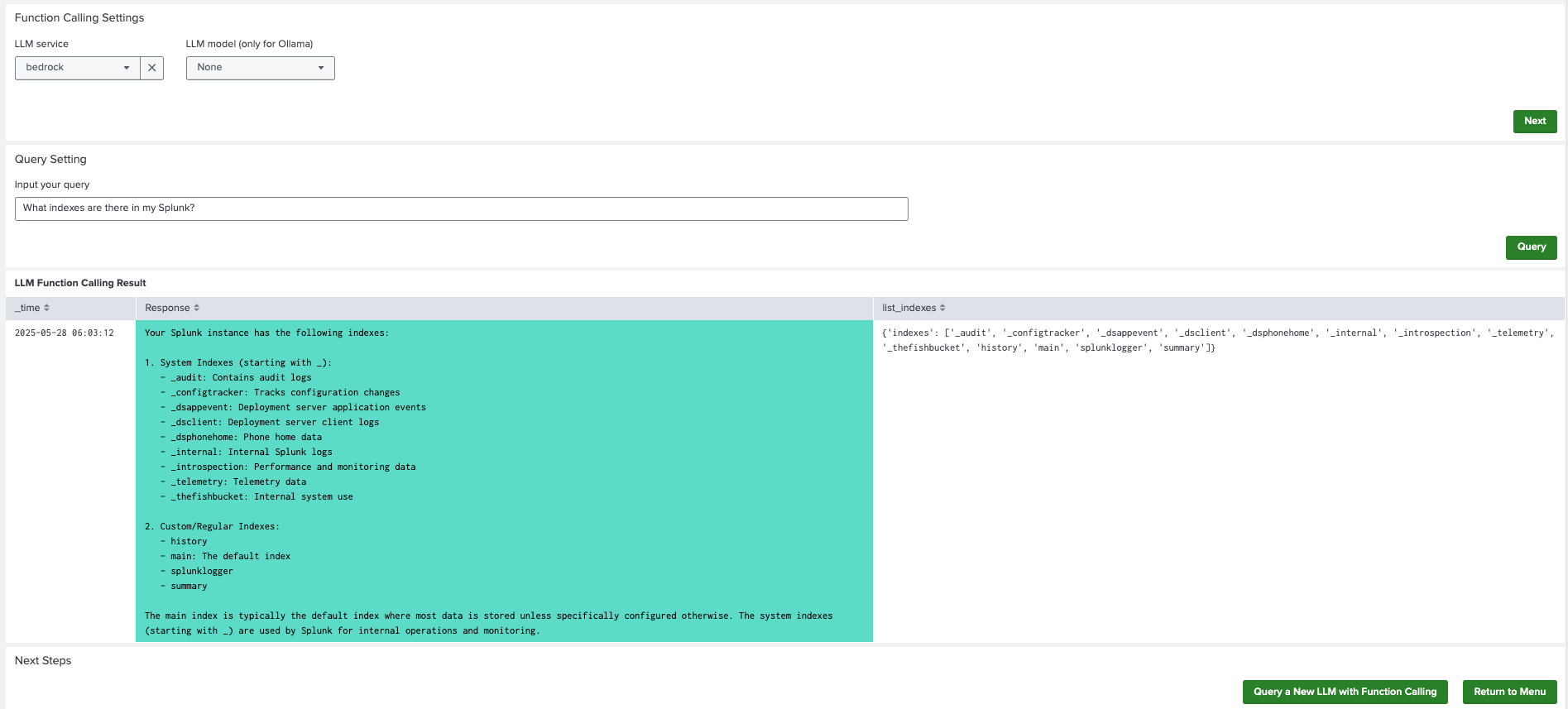

The following image shows the dashboard view for the LLM with Function Calling page:

The dashboard view includes the following components:

| Dashboard component | Description |

|---|---|

| Select LLM Service | Type of LLM service. |

| Select LLM model | (Optional) Name of the Ollama model. |

| Input your query | Write your search in the text box. |

| Next | Submit the inputs and move on to search input. |

| Query | Select after entering your search and start Retrieval-Augmented Generation (RAG). |

| Refresh Page | Reset all the tokens on this dashboard. |

| Return to Menu | Return to the main menu. |

Local LLM and Embedding Management

In the Splunk App for Data Science and Deep Learning (DSDL), navigate to Assistants, then LLM-RAG, then Querying LLM, and then Local LLM and Embedding Management.

Parameters

The Local LLM and Embedding Management page has the following parameters:

| Parameter name | Description |

|---|---|

task |

The specific task for management. Choose PULL to download a model and DELETE to delete a model. |

model_type |

Type of model to pull or delete. Choose from LLM and embedder_model.

Downloaded embedder models are stored under /srv/app/model/data. |

model_name |

The specific model name. This field is required for downloading or deleting models. |

Run the fit or compute command

Use the following syntax to run the fit command or the compute command:

- Run the

fitcommand:| makeresults | fit MLTKContainer algo=llm_rag_ollama_model_manager task=pull model_type=LLM model_name=mistral _time into app:llm_rag_ollama_model_manager

- Run the

computecommand:| makeresults | makeresults | compute algo:llm_rag_ollama_model_manager task:list model_type:LLM _time

Dashboard view

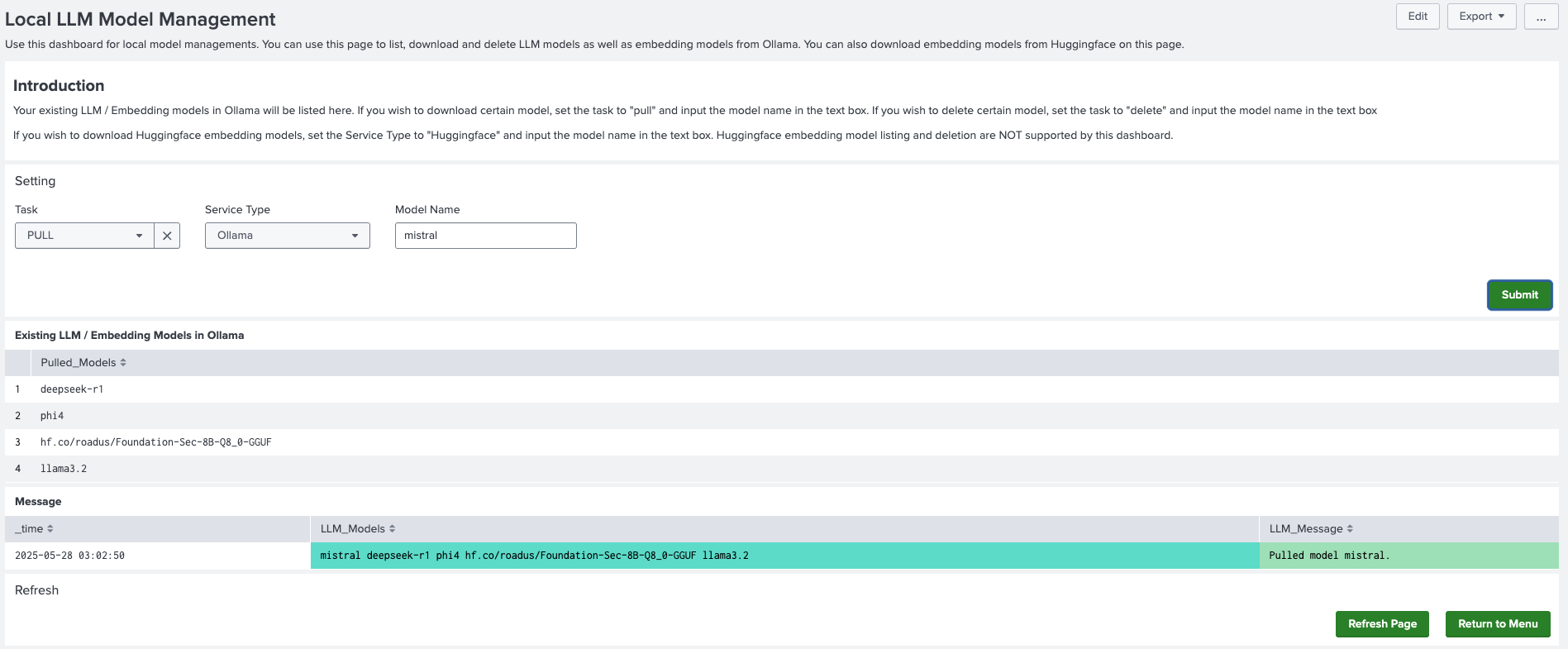

The following image shows the dashboard view for the 'Local LLM and Embedding Management page:

The dashboard view includes the following components:

| Dashboard component | Description |

|---|---|

| Task | The task to perform. Choose PULL to download a model and DELETE to delete a model. |

| Service Type | Type of model to pull or delete. Choose from Ollama or Huggingface. |

| Model Name | The name of the model to perform a certain task on. |

| Submit | Select to run the task. |

| Refresh Page | Reset all the tokens on this dashboard. |

| Return to Menu | Return to the main menu. |

| Encode data into a vector database | Troubleshoot the Splunk App for Data Science and Deep Learning |

This documentation applies to the following versions of Splunk® App for Data Science and Deep Learning: 5.2.1

Download manual

Download manual

Feedback submitted, thanks!