Set up additional LLM-RAG configurations

DSDL version 5.2.1 introduces an optional setup page that allows you to configure additional LLM-RAG features. You can tailor your configurations for Large Language Models (LLM), embedding models, vector databases (VectorDB), and graph databases (GraphDB) modules in the setup page.

Version 5.2.1 includes cloud-based options for more customizations. You can select from the following options for each module:

| Feature | Options |

|---|---|

| Large Language Models |

|

| Embedding models |

|

| Vector DB |

AlloyDB users must create a table on AlloyDB prior to using the table as a collection. |

| Graph DB |

|

You can configure multiple options for the same module, and switch between them, by using the fit command and by specifying the option. For example, llm_service=bedrock.

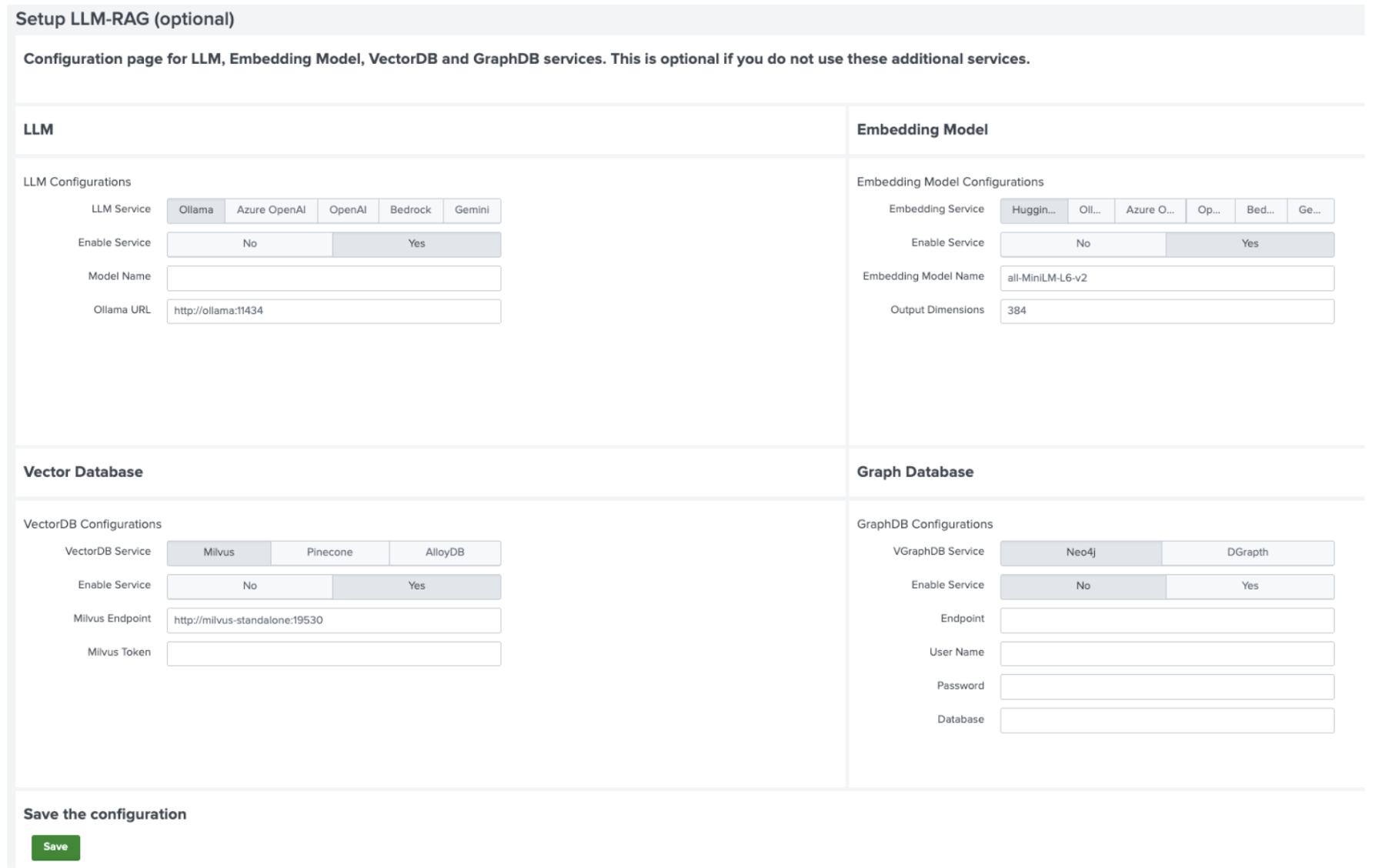

The Setup LLM-RAG (optional) page is where you can input configurations for each service. When you select Save, your customizations are saved into the llm.conf configuration file. Any password-related items are encrypted and kept in secret storage.

These configurations are integrated into the container environment upon startup. To apply changes restart the active LLM-RAG container.

The following image shows the Setup LLM-RAG (optional) page before any settings are configured:

Configure the settings panel

On this setup page, only 1 configuration is allowed for each option. However, for local LLM models on Ollama and local embedding models on Huggingface, you can override the default settings by specifying the model name in the fit command. For example model_name=llama3.3.

By default, Ollama is enabled for LLM, Huggingface is enabled for Embedding and Milvus is enabled for VectorDB. These default configurations follow the setup in DSDL version 5.2.0, where the LLM and VectorDB modules share the same Docker network with the LLM-RAG container.

To configure an option on Setup LLM-RAG (optional) page, follow these steps:

The model name you set on this setup page serves as the default model when no model name is specified in the fit command.

- Begin by selecting an option name within the panel of the module you want. For example, select Bedrock or LLM.

- Select Yes for Enable Service.

- Fill out the required fields for the chosen option.

- You can modify the endpoint URLs for Ollama and Milvus to fit your specific environment.

For embedding models, you must specify the output dimensionality in the Output Dimensions field.

Configure LLM or embedding models for DSDL on Splunk Enterprise

If you are using DSDL on Splunk Enterprise and want to configure multiple LLM or embedding models under the same option, such as Azure OpenAI, you have an alternative method available. Instead of using the standard setup configuration, follow these steps:

Using this method means that password-related items in the llm_config.json file are not encrypted. You must acknowledge the associated security risks when opting for this approach.

- Create an llm_config.json configuration file based on the example template at https://github.com/huaibo-zhao-splunk/mltk-container-docker-521/blob/main/llm_config.json. In the example, 2 LLM models are configured under Azure OpenAI as a list. You can use this approach to configure multiple items for the service of your choice.

- Place the llm_config.json file in the $SPLUNK_HOME/etc/apps/mltk-container/local directory.

- Open the $SPLUNK_HOME/etc/apps/mltk-container/bin/start_handler.py file. Uncomment lines 59 through 65 and comment lines 66 through 71. This modification ensures that the llm_config.json configuration file you created is directly passed into the container, bypassing the default Splunk configuration files.

| Set up LLM-RAG | About the compute command |

This documentation applies to the following versions of Splunk® App for Data Science and Deep Learning: 5.2.1

Download manual

Download manual

Feedback submitted, thanks!