Filtering data in DSP

You can filter out unnecessary records in your data pipeline in the Data Stream Processor (DSP). The filter function passes records that match the filter condition to downstream functions in your pipeline. You can also use filter to route your data to downstream branches in your pipeline.

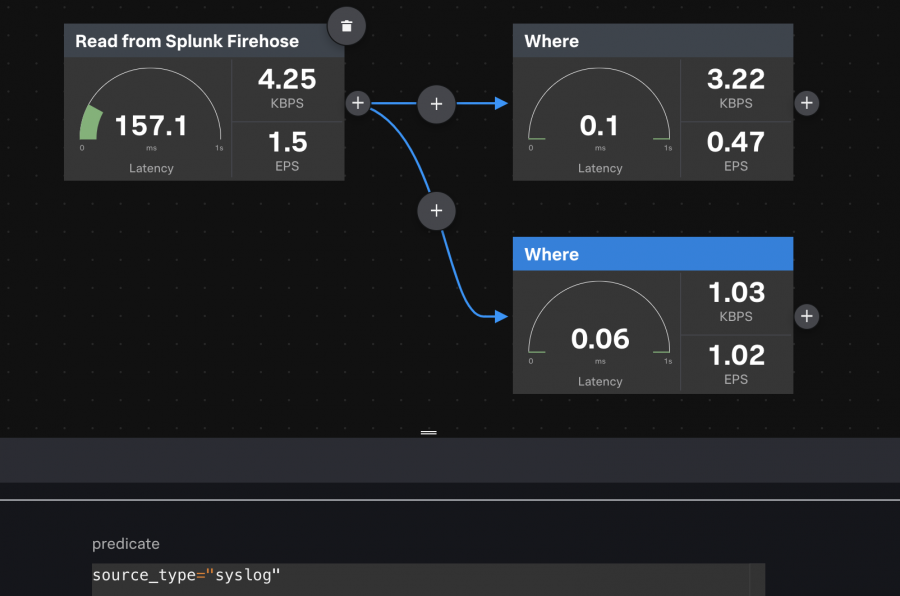

You can also branch your pipeline and apply a filter to both branches to have one set of data flowing down one branch and a different set down another. In the pipeline below, the filter function is being used to have one set of data with records where the source field is syslog and another set of data with records where the source field isn't syslog.

Filter conditions

The following table lists common filter conditionals that you can use.

| Predicate | Description |

|---|---|

| eq(get("kind"), "metric"); | If the kind field is equal to metric, the record passes downstream. If not, the record doesn't get passed through the pipeline. |

| like(get("source_type"), "cisco%"); | If the source_type field contains the string, "cisco", the record passes downstream. If not, the record doesn't get passed through the pipeline. |

| gte(get("ttms"), 5000); | Here, ttms is a custom top-level field that contains latency information. If the record has a ttms value of over 5 seconds, the record is passed downstream. If not, the record doesn't get passed through the pipeline.

|

| not(eq(get("timestamp"), null)); | If the timestamp field is not null, the record passes downstream. If timestamp is null, the record doesn't get passed through the pipeline. |

Use a filter function

Add a Filter function to allow only the records that match a specified condition to pass to other downstream functions.

- From the Data Pipelines Canvas view, click the + icon and add the Filter function to your pipeline.

- In the filter function, specify the filter condition. For examples of filter conditions, see the filter function or the Filter conditions section.

- With your Filter function highlighted, click Start Preview to verify that the expression is working as expected.

| Add a sourcetype in DSP | Overview of sending data from DSP to the Splunk platform |

This documentation applies to the following versions of Splunk® Data Stream Processor: 1.0.0

Download manual

Download manual

Feedback submitted, thanks!