All DSP releases prior to DSP 1.4.0 use Gravity, a Kubernetes orchestrator, which has been announced end-of-life. We have replaced Gravity with an alternative component in DSP 1.4.0. Therefore, we will no longer provide support for versions of DSP prior to DSP 1.4.0 after July 1, 2023. We advise all of our customers to upgrade to DSP 1.4.0 in order to continue to receive full product support from Splunk.

Create a pipeline using the SPL View

The SPL View allows you to quickly create and configure pipelines using SPL2 (Search Processing Language) statements. Normally, you use SPL2 to search through indexed data using SPL2 search syntax. However, in the , you use SPL2 statements to configure the functions in your pipeline to transform incoming streaming data. Unlike SPL2 search syntax, SPL2 supports variable assignments and require semicolons to terminate the statement. Use the SPL View if you are already experienced with SPL2. You can also easily copy and share pipelines in the SPL View with other users or get a quick overview of the configurations in your entire pipeline without having to manually click through each function in the canvas.

To access the SPL View, from the homepage, click Get Started and select a source. Then, on the Canvas View, click the SPL View toggle to switch over to the SPL View.

The pipeline toggle button is currently a beta feature and repeated toggles between the two views can lead to unexpected results. If you are editing an active pipeline, using the toggle button can lead to data loss.

Add a data source to a pipeline using SPL2

Your pipeline must start with the from function and a data source function. The from function reads data from a designated data source function. The required syntax is:

| from <source_function>;

If you've toggled over to the SPL View from the Canvas View, then this step is done for you.

Add functions to a pipeline using SPL2

You can expand on your SPL2 statement to add functions to a pipeline. The pipe ( | ) character is used to separate the syntax of one function from the next function.

The following example reads data from the Data Stream Firehose source function and then pipes that data to the Where function. The where function filters for records with the "syslog" sourcetype.

| from splunk_firehose() | where source_type="syslog";

You can continue to add functions to your pipeline by continuing to pipe from the last function. The following example adds an Apply Timestamp Extraction function to your pipeline to extract a timestamp from the body field to the timestamp field.

| from splunk_firehose() | where source_type="syslog" | apply_timestamp_extraction extraction_type="auto";

As you continue to add functions to your pipeline, click Build to validate and compile your SPL2 statements and validate the function's configuration. Remember that you must terminate the end of your SPL2 statements with a semi-colon ( ; ).

Specify a destination to send data to using SPL2

When you are ready to end your pipeline, end your SPL2 statement with a pipe ( | ), the Into function, and a sink function. The into function sends data to a designated data destination (sink function).

In the following example, we are expanding on the previous SPL2 statements by adding a Send to Splunk HTTP Event Collector sink function. This sink function sends your data to a Splunk Cloud Platform environment.

| from splunk_firehose() | where source_type="syslog" | apply_timestamp_extraction extraction_type="auto" | into splunk_enterprise_indexes(

"ec8b127d-ac69-4a5c-8b0c-20c971b78b90",

cast(map_get(attributes, "index"), "string"),

"main",

{"hec-enable-ack": "false", "hec-token-validation": "true", "hec-gzip-compression": "true"},

"100B",

5000);

Click Build to validate and compile your SPL2 statements and validate the function's configuration. Remember that you must terminate the end of your SPL2 statements with a semi-colon ( ; ).

Branch a pipeline using SPL2

You can also perform different transformations and send your data to multiple destinations by branching your pipeline. In order to branch your pipeline, declare a variable, and assign the functions you want to share between branches to that variable. Invoke the declared variable to define each branch in your pipeline using an SPL2 statement that terminates with a semi-colon ( ; ). Variable names must begin with a dollar sign ($) and can only contain letters, numbers, or underscores

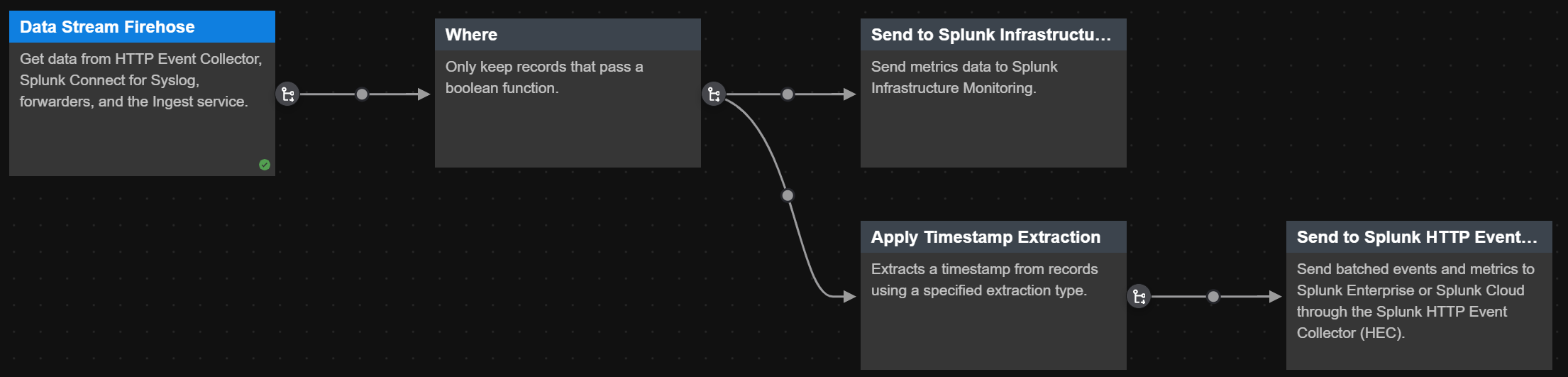

The following example adds a branch to the pipeline from the previous example. The $statement_1 variable is declared and the Data Stream Firehose source function along with the Where function are assigned to the variable. Then the variable is invoked to build the pipeline branches.

$statement_1 = | from splunk_firehose() | where source_type="syslog";

| from $statement_1 | apply_timestamp_extraction fallback_to_auto=false extraction_type="auto" | into splunk_enterprise_indexes("ec8b127d-ac69-4a5c-8b0c-20c971b78b90", cast(map_get(attributes, "index"), "string"), "main", {"hec-gzip-compression": "true", "hec-token-validation": "true", "hec-enable-ack": "false"}, "100B", 5000L);

| from $statement_1 | into signalfx("ad4c327d-ac69-4a5c-8b0c-20c971b78b90", "my_metric_name", 0.33, "COUNTER");

The first branch reads records that have source_type matching syslog from Data Stream Firehose and extracts the timestamp from the records to a top-level field. See Apply Timestamp Extraction for more details. The transformed records are then sent to Splunk Cloud Platform using the Send to Splunk HTTP Event Collector sink function.

The second branch also reads records that have source_type matching syslog from Data Stream Firehose but sends that data to an Splunk Infrastructure Monitoring endpoint.

Click the Start Preview ![]() button to compile your SPL2 statements and validate the pipeline's configuration. Here's what the pipeline from the example looks like:

button to compile your SPL2 statements and validate the pipeline's configuration. Here's what the pipeline from the example looks like:

Differences and inconsistent behaviors between the Canvas View and the SPL View

You can toggle between the Canvas View and the SPL View. There are several differences between the Canvas View and the SPL View.

Differences

| Canvas View | SPL View |

|---|---|

| Accepts SPL2 Expressions as input. | Accepts SPL2 Statements as input. SPL2 statements support variable assignments and must be terminated by a semi-colon. |

| For source and sink functions, optional arguments that are blank are automatically filled in with their default values. | If you want to specify any optional arguments in source or sink functions, you can pass arbitrary arguments using named syntax in any order. All unprovided arguments will continue to use their default values. See SPL2 for for more details. |

| When using a connector, the connection name can be selected as the connection-id argument from a dropdown in the UI. | When using a connector, the connection-id must be explicitly passed as an argument. You can view the connection-id of your connections by going to the Connections Management page. |

Source and sink functions have implied from and into functions respectively.

|

Source and sink functions require explicit from and into functions.

|

Toggling between the SPL2 View and the Canvas View (BETA)

The toggle button is currently a beta feature. Repeated toggles between the Canvas View and the SPL2 View may produce unexpected results. The following table describes specific use cases where unexpected results have been observed.

If you are editing an active pipeline, toggling between the Canvas and the SPL views can lead to data loss as the pipeline state is unable to be restored. Do not use the pipeline toggle if you are editing an active pipeline.

| Pipeline contains... | Behavior |

|---|---|

| Variable assignments | Variable names are changed and statements may be reordered. |

| Renamed function names | Functions are reverted back to their default names. |

| Comments | Comments are stripped out when toggling between builders. |

| A stats or aggregate with trigger function that uses an evaluation scalar function within the aggregation function. | Each function is called separately when toggling from the SPL2 builder to the Canvas builder. For example, if your stats function contained the if scalar function as part of the aggregation function count(if(status="404", true, null)) AS status_code, the Canvas Builder assumes that you are calling two different aggregation functions: count(if(status="404") and if(status="404") AS status_code both of which are invalid.

|

Additional resources

- See the Function Reference for a list of available functions and examples of how to use those functions in SPL2.

- See the SPL2 Search Manual to learn more about SPL2.

Even though the supports SPL2 statements, there are major differences in terminology, syntax, and supported functions. For more information, see SPL2 for .

| Create a pipeline using the Canvas View | Create a pipeline using a template |

This documentation applies to the following versions of Splunk® Data Stream Processor: 1.3.0, 1.3.1, 1.4.0, 1.4.1, 1.4.2, 1.4.3, 1.4.4, 1.4.5, 1.4.6

Download manual

Download manual

Feedback submitted, thanks!