Using multi-GPU computing for heavily parallelled processing

Use the multi-GPU computing option for heavily parallelled processing such as training of deep neural network models. You can leverage a GPU infrastructure if you are using NVIDIA and have the needed hardware in place. For more information on NVIDIA GPU management and deployment, see https://docs.nvidia.com/deploy/index.html.

The Splunk App for Data Science and Deep Learning (DSDL) allows containers to run with GPU resource flags added so that NVIDIA-docker is used, or GPU resources in a Kubernetes cluster are attached to the container.

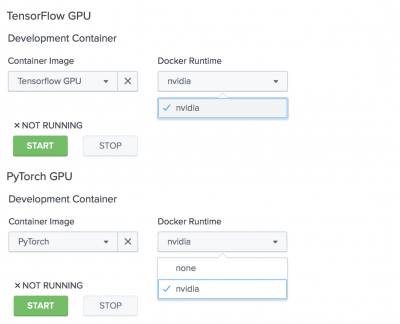

To start your development or production container with GPU support, you must select NVIDIA as the runtime for your chosen image. From the Configurations > Containers dashboard, you can set up the runtime for each container you run.

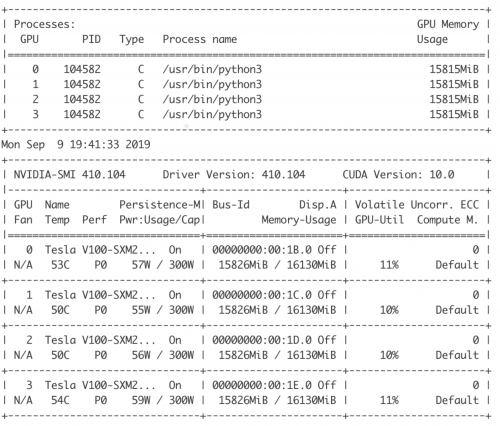

The following image shows an example console leveraging four GPU devices for model training.

If you want to use multi-GPU computing, review the strategies provided for your chosen framework:

- For Distributed Training with TensorFlow, see https://www.tensorflow.org/guide/distributed_training

- For Using multiple GPUs with TensorFlow, see https://www.tensorflow.org/guide/gpu#using_multiple_gpus

- For Using multiple GPUs with PyTorch, see https://pytorch.org/tutorials/beginner/former_torchies/parallelism_tutorial.html

- For Data Parallelism with PyTorch, see https://pytorch.org/tutorials/beginner/blitz/data_parallel_tutorial.html

| Develop a model using JupyterLab | Splunk App for Data Science and Deep Learning commands |

This documentation applies to the following versions of Splunk® App for Data Science and Deep Learning: 5.2.0, 5.2.1

Download manual

Download manual

Feedback submitted, thanks!