Use Standalone LLM

You can use Standalone LLM through a set of dashboards. The following processes are covered:

All the dashboards are powered by the fit command. The dashboards showcase Standalone LLM functionalities. You are not limited to the options provided on the dashboards. You can tune the parameters on each dashboard, or embed a scheduled search that runs automatically.

Download LLM models

If you want to use local LLMs from Ollama. Complete the following steps:"

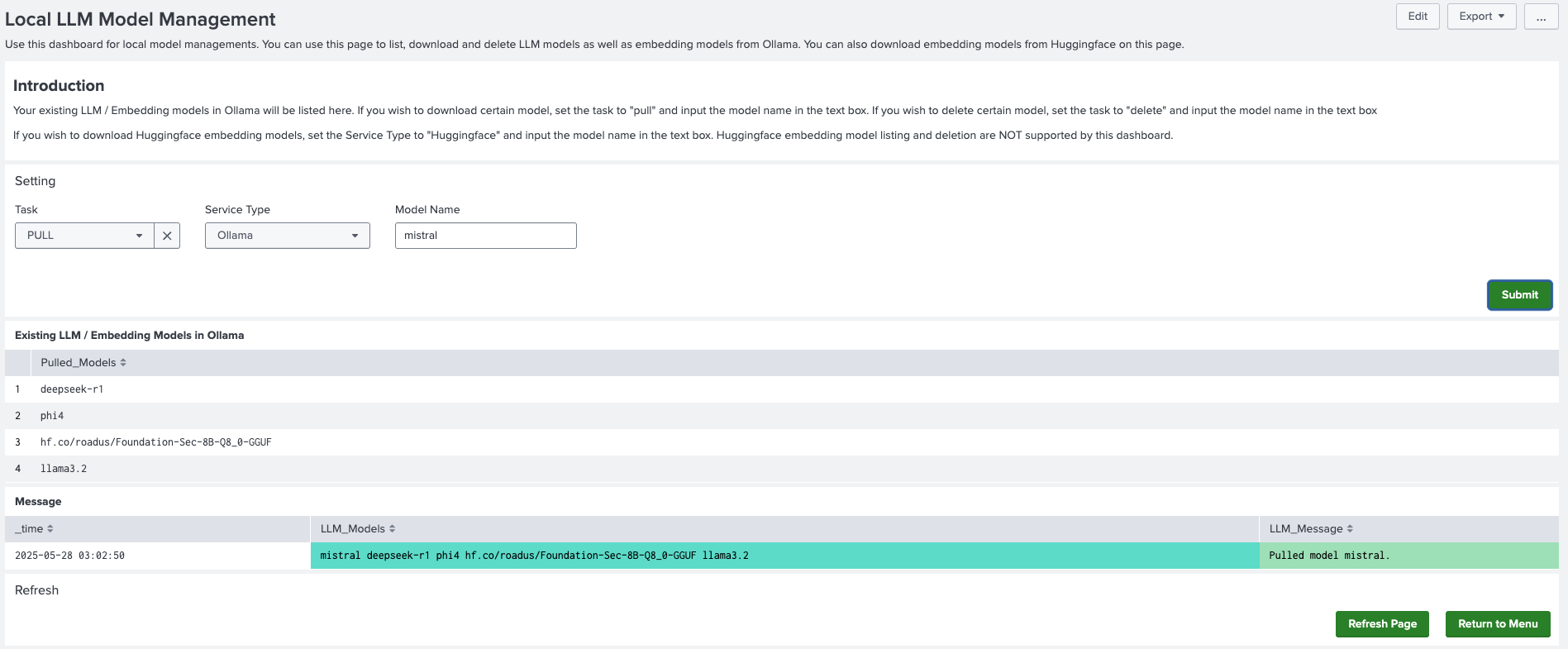

- In the Splunk App for Data Science and Deep Learning (DSDL), navigate to Assistants, then LLM-RAG, then Querying LLM, and select Local LLM and Embedding Management.

- On the settings panel, select PULL from the Task drop-down menu and Ollama from Service Type, as shown in the following image:

- In the Model Name field, enter the name of the LLM you want to download. For model name references, see the Ollama library page at https://ollama.com/library.

- Select Submit to start downloading. You see an on-screen confirmation after the download completes.

- Confirm your download by selecting Refresh Page. Make sure that the LLM model name shows on the Existing LLM models panel.

Inference with LLM

Complete the following steps:

- In DSDL, navigate to Assistants, then LLM-RAG, then Querying LLM, and select Standalone LLM.

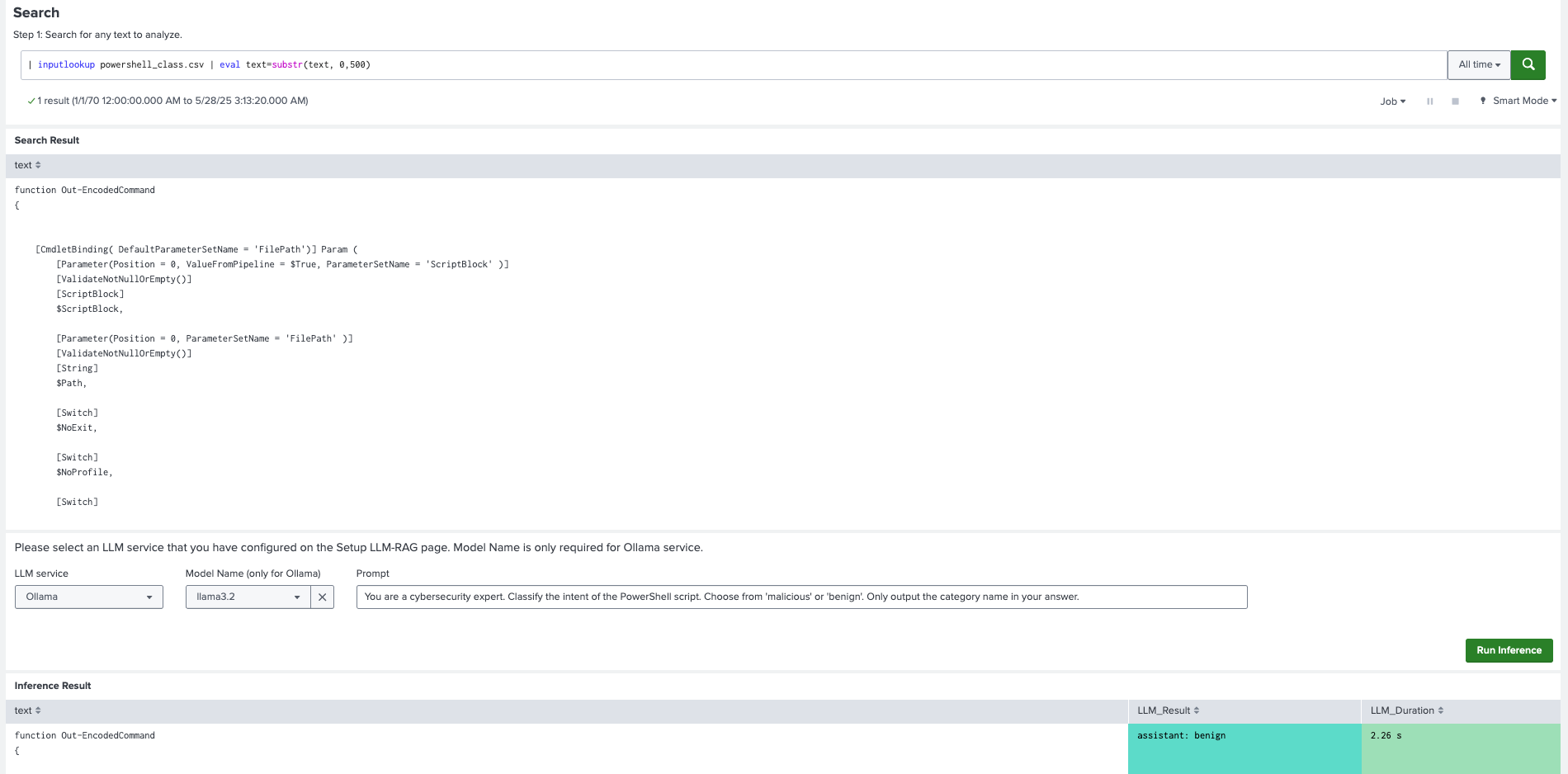

- A search bar is provided in the dashboard to conduct inference and natural language processing (NLP) tasks on the text data stored in Splunk. Use the

renamecommand in combination withas textto search the text data stored in the Splunk platform.

If you do not want to use text data, leave the default search command empty or tune the prompt with the search command.

- After inferencing completes, a panel for inference settings is available. On this panel you can choose LLM models and input your search.

Examples

The following example uses text data and a Standalone LLM search:

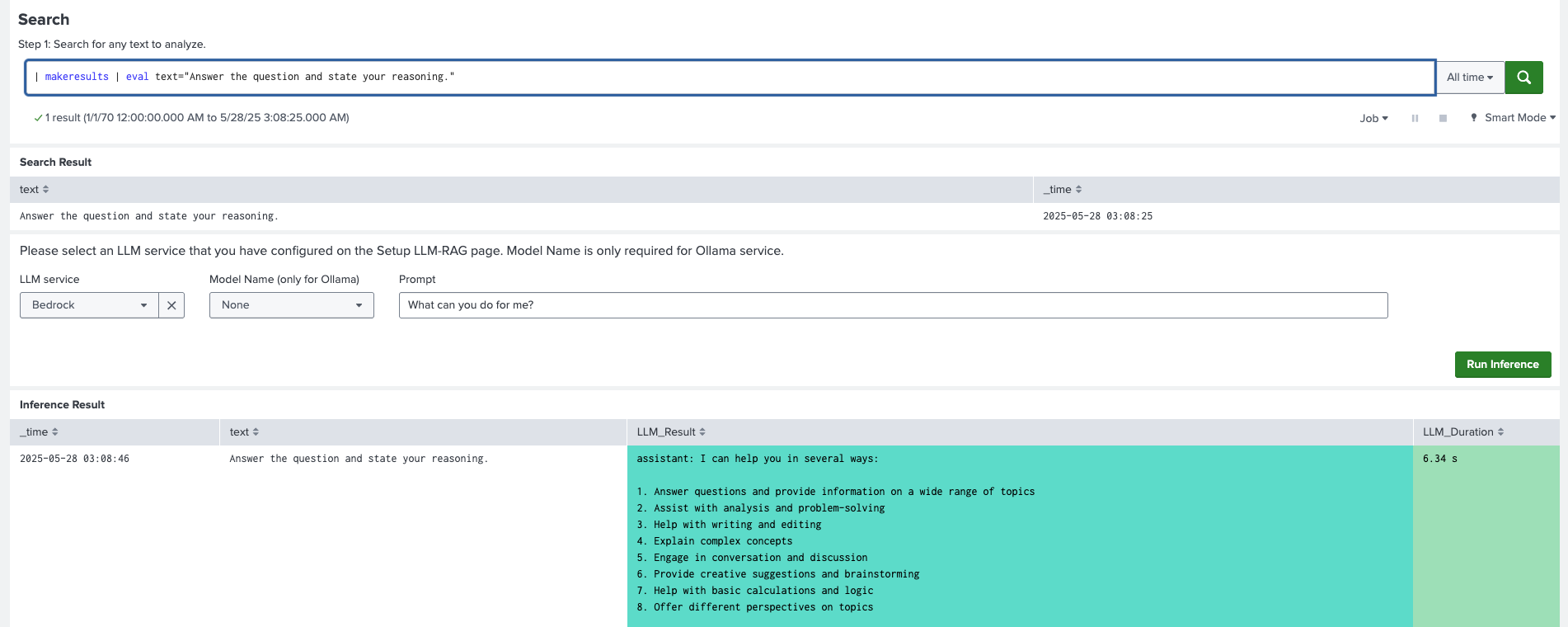

The following example uses a one-shot prompt:

| LLM-RAG use cases | Use Standalone VectorDB |

This documentation applies to the following versions of Splunk® App for Data Science and Deep Learning: 5.2.1

Download manual

Download manual

Feedback submitted, thanks!