Send data to multiple destinations in a pipeline

With the Data Stream Processor, you can send your data to multiple destinations in a single pipeline.

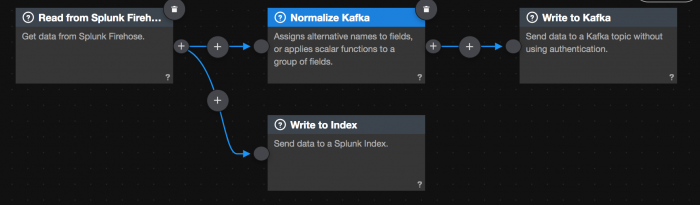

For example, in the following pipeline, all of the data from the Splunk Firehose data source passes to both branches of the pipeline for different types of processing. Data moves from the Splunk Firehose data source, gets normalized to match the Kafka schema, and then sent to Kafka as a destination. Data also moves from the Splunk Firehose data source directly into a Splunk Enterprise index.

The following steps assume that you want to add a branch to a pre-existing pipeline.

- From the Data Pipelines Canvas view, click the + icon to the immediate right of the function where you want to branch from.

- Select a new function to add your pipeline. This function is added on a separate branch of your pipeline.

When you branch a pipeline, all the same data flows through from the upstream function to all its downstream functions. For example, in the screenshot above, the same data flows from the upstreamSplunk Firehosefunction to theNormalize Kafkafunction and theWrite to Indexfunction. - Fill out the desired configurations for your function.

- Click Start Preview to verify that your pipeline is valid and data is flowing as expected.

- Save and activate your pipeline. If this is the first time you are activating your pipeline, do not select any of the activation options.

| Create a pipeline with multiple data sources | Data Stream Processor data types |

This documentation applies to the following versions of Splunk® Data Stream Processor: 1.1.0

Download manual

Download manual

Feedback submitted, thanks!