Create a pipeline with multiple data sources

You can send data from multiple data sources in a single pipeline.

- From the Build Pipeline page, select a data source.

- From the Canvas view of your pipeline, click the + icon, and add a union function to your pipeline.

- In the

Unionfunction configuration panel, click Add a new function. - Add a second source function to your pipeline.

- (Optional) In order to union two data streams, they must have the same schema. If your data streams don't have the same schema, you can use the select streaming function to match your schemas.

- Continue building your pipeline by clicking the + icon to the immediate right of the

unionfunction.

Create a pipeline with two data sources: Kafka and Read from Splunk Firehose

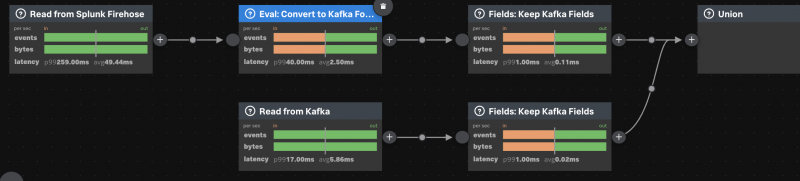

In this example, create a pipeline with two data sources, Kafka and Read from Splunk Firehose, and union the two data streams by normalizing them to fit the expected Kafka schema.

The following screenshot shows two data streams from two different data sources being unioned together into one data stream in a pipeline.

Prerequisites

Steps

- From the Build Pipeline page, select the Read from Splunk Firehose data source.

- From the Canvas view of your pipeline, add a union function to your pipeline.

- In the

Unionfunction configuration panel, click Add new function. - Select the Read from Kafka source function, and provide your connection and topic names.

- Normalize the schemas to match. Hover over the circle in between the

Read from Splunk FirehoseandUnionfunctions, click the + icon, and add an Eval function. - In the

Evalfunction, type the following SPL2. This SPL2 converts the DSP event schema to the expected Kafka schema.value=to_bytes(cast(body, "string")), topic=source_type, key=to_bytes(time())

- Hover over the circle in between the

EvalandUnionfunctions, click the + icon, and add a Fields function. - Because the Kafka record schema contains

value,topic, andkeyfields, drop the other fields in your record by typingvalue, clicking + Add, typingtopic, clicking + Add, and then typingkeyin theFieldsfunction. - Now, let's normalize the other data stream. Hover over the circle in between the Read from Kafka and Union functions, click the + icon, and add another Fields function.

- In the

Fieldsfunction, typevalue, click + Add, typetopic, click + Add, and then typekey. - Validate your pipeline.

You now have a pipeline that reads from two data sources, Kafka and Read from Firehose, and merges the data from both sources into one data stream.

| Create a pipeline using a template | Send data to multiple destinations in a pipeline |

This documentation applies to the following versions of Splunk® Data Stream Processor: 1.1.0

Download manual

Download manual

Feedback submitted, thanks!