Formatting event data in DSP

To use the Splunk Enterprise sink functions, the record must be transformed to a JSON payload that is compatible with HEC. A record must have at least one of the following:

- a non-empty

bodyfield - at least one valid entry in the

attributesmap and you've chosen to map attributes to HEC JSON (see the diagrams below) - at least one valid non-empty top level field that is not part of the DSP events schema

If none of the above are true, then your records are dropped by the sink functions.

Because you can add custom top-level fields to your event schema in DSP and DSP supports records of varying schemas, your data can be formatted in a way that is incompatible with Splunk HEC. Use the following diagram and examples as a guide for how to format your data so that it is indexed appropriately.

Top-level fields and attribute entries that start with an underscore (_index) are ignored when creating HEC event JSON.

Formatting data using the Splunk Enterprise Indexes or the To Splunk JSON functions

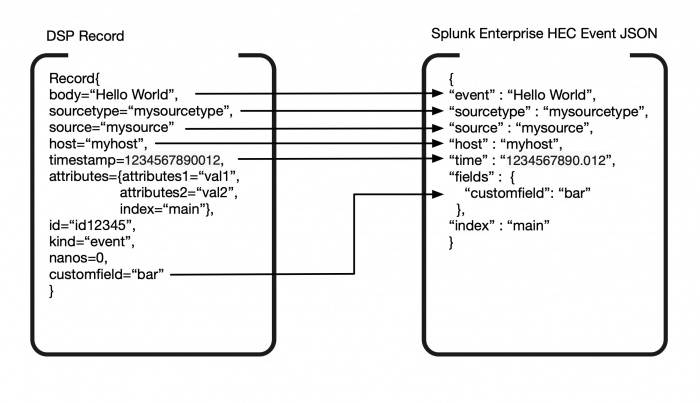

The following diagram shows how your records are transformed into HEC event JSON in two different scenarios:

- If you are using the Write to the Splunk platform with Batching.

- If you are using the To Splunk JSON function with

keep_attributes=falseand the Write to the Splunk Platform function.

Even if the attributes field is dropped, both the Write to the Splunk platform with Batching and the To Splunk JSON functions contain an index argument which allows the index key to be properly extracted. The value of the index key is used to determine which index to send your data to.

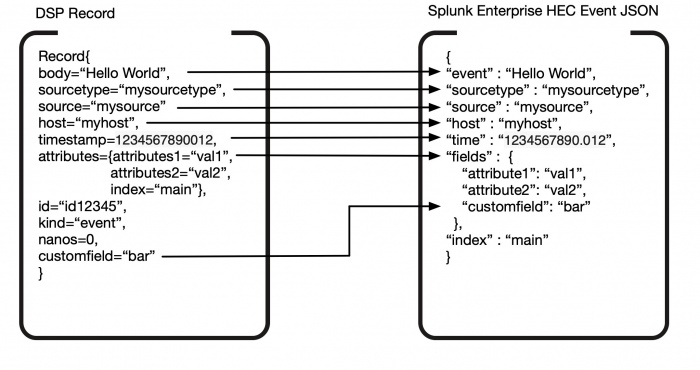

If your pipeline contains the standalone To Splunk JSON function and you've set keep_attributes=true, then your records are transformed to the following HEC event JSON.

| DSP event field | HEC event JSON | Type | Notes |

|---|---|---|---|

| body | event | any, except boolean

|

The "raw" event indexed in Splunk Enterprise. Best practices are to have a valid, non-empty body field in your DSP record. If your records are either missing the body field, of type boolean or an empty value then:

|

| sourcetype or source_type | sourcetype | string | If not present, no sourcetype is included in the HEC event JSON, and Splunk Enterprise uses the default sourcetype httpevent. If both sourcetype and source_type fields are present, the sourcetype field is used unless the sourcetype is empty or null.

|

| timestamp | time | long integer | The Data Stream Processor uses Unix epoch time in milliseconds. Your timestamp is automatically converted to Splunk epoch time format <sec>.<ms>. If blank or negative, time is set to now. There is currently a known issue where timestamps that are blank or negative are indexed into the Splunk platform with a |

| source | source | string | If not present, Splunk HEC uses the default http:test source.

|

| host | host | string | The host value to assign to the event data. This is typically the hostname of the client from which you're sending data. |

| attributes | fields | map<string, any> | The Write to the Splunk platform with Batching and To Splunk JSON drop the attributes field by default. The To Splunk JSON function has an optional argument keep_attributes that, when set to true, maps the DSP attributes map directly into the HEC event JSON fields object. These fields are available as index-extracted fields in Splunk Enterprise. Entries that are not included in fields include:

any key that starts with underscore (_time), or any of the Splunk HEC metadata fields |

| id | N/A | string | A DSP event field ignored by HEC. |

| kind | N/A | string | A DSP event field ignored by HEC. |

| nanos | N/A | integer | A DSP event field ignored by HEC. |

| any custom fields | fields | any | All custom top-level fields that are not part of the DSP data pipeline events schema and that don't start with an underscore or have a non-empty value are mapped to the HEC fields JSON object for index-extraction. |

| N/A | index | string | To set the index in HEC event JSON, you must pass the index name as an argument in the Write to Splunk Enterprise or Write to Index functions. If no index is selected, your data is sent to the default index associated with your HEC token. |

Formatting data without the Write to the Splunk platform with Batching or the To Splunk JSON functions

If you don't include a To Splunk JSON function or use Write to the Splunk platform with Batching as the sink function in your pipeline, use the following examples as a guide for how to format your data so that it is indexed appropriately.

Including a To Splunk JSON function or using Write to the Splunk platform with Batching as the sink function in your pipeline is still highly recommended. The use of the Write to the Splunk platform or the Write to the Splunk platform (Default for Environment) sink functions without the To Splunk JSON function is deprecated in DSP 1.1.0. These sink functions no longer supports the formatting of your DSP records into HEC JSON.

Example 1: The event has a non-empty body field

DSP Event:

Event{body="mybody", host="myhost", attributes=null, source_type="mysourcetype", id="id12345", source=null, timestamp=1234567890012}

HEC event JSON:

{"event":"mybody", "sourcetype":"mysourcetype", "host":"myhost", "time":"1234567890.012"}

Explanation: The value of the DSP Event body field is put as the HEC event JSON event field to be indexed. The timestamp value is converted to Splunk Epoch Time Format as a string.

Example 2: The event has both body and attributes fields

DSP Event:

Event{body="mybody", host="myhost", attributes={attr1="val1", attr2="val2"}, source_type="mysourcetype", id="id12345", source=null, timestamp=1234567890012}

HEC event JSON:

{"event":"mybody", "sourcetype":"mysourcetype", "host":"myhost", "time":"1234567890.012", "fields":{"attr1":"val1", "attr2":"val2"}}

Explanation: DSP attributes are mapped to HEC event JSON fields, a catch-all for additional metadata in the HEC event.

Example 3: The event has attributes, an empty body, and a custom field.

DSP Event:

Event{host="myhost", attributes={level="INFO", category=["prod", "test"]}, source_type="mysourcetype", id="id12345", source=null, body="", timestamp=1234567890012, newfield="newval"}

HEC event JSON:

{"event":{}, "fields": {"level":"INFO","category":["prod","test"],"newfield":"newval"}, "sourcetype":"mysourcetype", "host":"myhost", "time":"1234567890.012"}

Explanation: The Splunk Enterprise HEC endpoint doesn't support empty event fields, so the empty body is converted to an empty JSON object. The fields in attributes and the custom field newfield get added to the fields entry. You can search for index-extracted fields in Splunk Enterprise thusly:

search newfield::newval

Example 4: The event only contains custom top-level fields DSP Event:

Event{value=12345, level="INFO"}

HEC event JSON:

{"event":{}, "fields": {"value":12345,"newfield":"INFO"}, "time":"1567112419.503"}

Explanation: The Splunk Enterprise HEC endpoint doesn't support empty event fields, so the empty body is converted to an empty JSON object. Custom top-level fields are mapped into the fields part of the HEC event JSON. A timestamp of "now" is added, because there is no timestamp associated with the event. Data in the fields will be index-extracted fields that can be queried out of Splunk Enterprise thusly:

search value::12345 AND newfield::INFO

Example 5: The event contains a map object in body

DSP Event:

Event{body={key1="value1", foo="bar"}, kind="event", host="myhost", source_type="mysourcetype", id="id12345", source=null, timestamp=1234567890012, attributes={attr1="val1", attr2="val2"}}

HEC event JSON:

{"event":{"key1":"value1","foo":"bar"}, "fields":{"attr1":"val1","attr2":"val2"}, "sourcetype":"mysourcetype", "host":"myhost", "time":"1234567890.012"}

Explanation: The DSP body field can be of any datatype and is mapped to its appropriate JSON representation into the event HEC JSON field.

Example 6: The event's attributes field is a nested map DSP Event:

Event{nanos=null, kind=null, host=myhost, attributes={foo={bar="baz"}}, source_type="mysourcetype", id="id12345", source=null, body="mybody", timestamp=1234567890012}

HEC event JSON:

{"event":"mybody", "sourcetype":"mysourcetype", "host":"myhost", "time":"1234567890.012", "fields":{"foo.bar":"baz"}}

Explanation: When the attributes in your DSP Event is a nested map, they get translated into JSON objects with composite keys in fields of the HEC JSON event.

Example 7: The event schema contains index as a top-level field.

DSP Event:

Event{myint=13, body="mybody", index="index123", host="myhost", sourcetype="mysourcetype", attributes={attr1="val1"}, time=1234567890012, id="id12345"}

HEC event JSON:

{"event":"mybody", "sourcetype":"mysourcetype", "host":"myhost", "time":"1234567890.012", "fields":{"myint":13, "attr1":"val1"}, "index":"index123"}

{"event":"mybody", "sourcetype":"mysourcetype", "host":"myhost", "time":"1234567890.012", "fields":{"myint":13, "attr1":"val1"}, "index":"index-from-function-arg"}

Explanation: Because index is not part of the DSP event schema, the Data Stream Processor treats it as a custom top-level field and ignores the field entirely when creating the HEC event JSON. Instead, you must pass the index as an argument to the Write to Splunk Enterprise or Write to Index functions.

In the first HEC event JSON example, get("index"); was passed in as a function argument in the Write to Splunk Enterprise or Write to Index functions.

In the second HEC event JSON example, the string literal index-from-function-arg was passed in as the function argument.

If you are using batching and you want to route your data to different indexes, see Optimize performance for sending to Splunk Enterprise.

Example 8: The event's timestamp field is a string of numbers. DSP Event:

Event{sourcetype="mysourcetype", timestamp="123456789012", body="mybody"}

HEC event JSON:

{"event":"mybody","sourcetype":"mysourcetype","time":"1566245454.551", "fields":{"timestamp":"123456789012"}}

Explanation: The Data Stream Processor timestamps are in milliseconds since Unix epoch format of type long. If your timestamp has some other format, for example string, the timestamp will be treated as an unknown field and put into the fields JSON object. The timestamp assigned in the HEC event JSON is event processing time.

Example 9: The event's body is a byte array.

DSP Event:

Event{sourcetype="mysourcetype", body=java.nio.HeapByteBuffer[], timestamp=1234}

HEC event JSON:

{"event":"dGVzdC1ib2R5", "sourcetype":"mysourcetype", "time":"1.234"}

Explanation: If the value of body is a byte array, it will be base64-encoded in Splunk Enterprise ("dGVzdC1ib2R5" is the base64 encoding of body as bytes). Use the to-string function to convert your byte arrays to strings before sending your data to Splunk Enterprise for indexing.

| Create a connection to the Splunk platform in DSP | Formatting metrics data in DSP |

This documentation applies to the following versions of Splunk® Data Stream Processor: 1.1.0

Download manual

Download manual

Feedback submitted, thanks!