Deep dive: Create a data ingest anomaly detection dashboard using ML-SPL commands

Your data ingest pipelines can be impacted by traffic spikes, network issues, misconfiguration issues, and even bugs. These issues can cause unexpected downtime and negatively impact your organization. One option for accessing real-time insights on data ingestion is through a Splunk platform dashboard. The data ingest anomaly detection dashboard uses familiar ML-SPL commands like fit and apply to monitor your data ingest volume.

Prerequisites

- You must have the MLTK app installed. MLTK is a free app available on Splunkbase. For detailed installation steps, see Install the Machine Learning Toolkit.

- You must have domain knowledge, SPL knowledge, and Splunk platform experience, in order to make the required inputs and edits to the SPL queries provided.

Create the dashboard

Perform the following high-level steps to create a data ingest anomaly detection dashboard:

- Run an SPL search to fit the model.

- Run an SPL search to apply the model.

- Run an SPL search to calculate Z-scores.

- Create the Ingestion Insights dashboard.

- (Optional) Manually populate dashboard charts with historical data.

- Set up alerts.

Run an SPL search to fit the model

Perform the following steps to run the SPL search to fit the model:

- Open a new search. Apps > Search and Reporting.

- Input the following SPL to the Search field:

| tstats count where index=[insert your index here] sourcetype=[insert your sourcetype here] groupby _time span=1h | eval hour=strftime(_time,"%H") | eval minute=strftime(_time,"%M") | eval weekday=strftime(_time,"%a") | eval weekday_num=case(weekday=="Mon",1,weekday=="Tue",2,weekday=="Wed",3,weekday=="Thu",4,weekday=="Fri",5,weekday=="Sat",6,weekday=="Sun",7) | eval month_day=strftime(_time,"%m-%d") | eval is_holiday=case(month_day=="01-01",1,month_day=="01-18",1,month_day=="05-31",1,month_day=="07-05",1,month_day=="09-06",1,month_day=="11-11",1,month_day=="11-25",1,month_day=="12-24",1,month_day=="12-32",1,1=1,0) | fit RandomForestRegressor count from hour is_holiday weekday, weekday_num into regr as predicted

- Replace the [insert your sourcetype here] parameter with the source type you want to monitor, and the [insert your index here] parameter with the corresponding index.

- Add any company/regional holidays in the format of [month_day=="mm-dd",1,] to the

is_holidaycase parameter. All US holidays are already included. - Set the date and time range in the Advanced section as from -1h@h to -0h@h.

For the time range, assess against any seasonal patterns in your data feeds. For example, use a longer time range if your data varies month on month (MoM).

- Run the search.

- From the Save As menu, choose Report.

- Select Schedule Report and the Run on Cron Schedule option.

- Set Cron Schedule to

0 * * * *. This encodes "run on the 0th minute of every hour" for your scheduled searches.

Run an SPL search to apply the model

Perform the following steps to run an SPL search that will apply the model created in the previous steps:

- Open a new search. Apps > Search and Reporting.

- Input the following SPL to the search field:

| tstats count where index=[insert your index here] sourcetype=[insert your sourcetype here] groupby _time span=1h | eval hour=strftime(_time,"%H") | eval minute=strftime(_time,"%M") | eval weekday=strftime(_time,"%a") | eval weekday_num=case(weekday=="Mon",1,weekday=="Tue",2,weekday=="Wed",3,weekday=="Thu",4,weekday=="Fri",5,weekday=="Sat",6,weekday=="Sun",7) | eval month_day=strftime(_time,"%m-%d") | eval is_holiday=case(month_day=="01-01",1,month_day=="01-18",1,month_day=="05-31",1,month_day=="07-05",1,month_day=="09-06",1,month_day=="11-11",1,month_day=="11-25",1,month_day=="12-24",1,month_day=="12-32",1,1=1,0) | apply regr as predicted | eval error=count-predicted | outputlookup anomaly_detection.csv append=true

- Replace the [insert your sourcetype here] parameter with the source type you want to monitor, and the [insert your index here] parameter with the corresponding index.

- Add any company/regional holidays using the format of [month_day=="mm-dd",1,] to the

is_holidaycase parameter. All US holidays are already included. - Set the date and time range in the Advanced section as from -1h@h to -0h@h.

- Run the search.

- From the Save As menu, choose Report.

- Select Schedule Report and the Run on Cron Schedule option.

- Set Cron Schedule to

2 * * * *. This encodes "run on the 2nd minute of every hour" for your scheduled searches.

Run an SPL search to calculate Z-scores

Perform the following steps to run an SPL to calculate Z-scores and expected ranges from the model predictions and the actual data:

- Open a new search. Apps > Search and Reporting.

- Input the following SPL to the Search field:

| inputlookup anomaly_detection.csv | eval error_sq=error*error | eval pct_error=abs(error)/count | eventstats avg(error_sq) as mean_error | eventstats stdev(error_sq) as sd_error | eval z_score=(error_sq-mean_error)/sd_error | eval upper_error_bound=predicted+sqrt(3*sd_error+mean_error) | eval lower_error_bound=predicted-sqrt(3*sd_error+mean_error) | outputlookup anomaly_detection.csv

- Run the search.

- From the Save As menu, choose Report.

- Select Schedule Report and the Run on Cron Schedule option.

- Set Cron Schedule to

3 * * * *. This encodes "run on the 3rd minute of every hour" for your scheduled searches.

To learn more about Z-scores, see https://www.statisticshowto.com/probability-and-statistics/z-score/

Create the Ingestion Insights dashboard

Perform the following steps to create the dashboard:

- From Apps > Search and Reporting, go to the Dashboards tab.

- Choose the Create New Dashboard button. Give the Dashboard a title and optionally add a description.

- On the resulting Edit dashboard page, toggle to the Source option.

- Paste the following XML into the Source page:

<dashboard theme="dark"> <label>Ingestion Insights</label> <description>Aggregate ingest volume data, and analyze the data to find anomalies.</description> <row> <panel> <title>Anomaly History</title> <single> <title>Warnings and Alerts</title> <search> <query>| inputlookup anomaly_detection.csv | eval isWarning=if(3 < z_score AND 6 > z_score, 1, 0) | eval isAlert=if(6 < z_score, 1, 0) | eventstats sum(isWarning) as Warnings sum(isAlert) as Alerts | fields _time, Warnings, Alerts</query> <earliest>-24h@h</earliest> <latest>now</latest> </search> <option name="colorBy">trend</option> <option name="drilldown">none</option> <option name="height">174</option> <option name="numberPrecision">0</option> <option name="rangeColors">["0x53a051","0x0877a6","0xf8be34","0xf1813f","0xdc4e41"]</option> <option name="refresh.display">progressbar</option> <option name="showSparkline">0</option> <option name="showTrendIndicator">1</option> <option name="trellis.enabled">1</option> <option name="trellis.size">large</option> <option name="trellis.splitBy">_aggregation</option> <option name="trendDisplayMode">absolute</option> <option name="trendInterval">-24h</option> <option name="unitPosition">after</option> <option name="useColors">0</option> <option name="useThousandSeparators">0</option> </single> </panel> <panel> <title>Percent Prediction Errors</title> <single> <title>Data is:</title> <search> <query>| inputlookup anomaly_detection.csv | eval pct_error=round(pct_error*100, 0) | eval high_or_low=if(error>0, "% higher than expected", "% lower than expected") | fields _time, pct_error, high_or_low | strcat pct_error high_or_low error_info | fields error_info</query> <earliest>-24h@h</earliest> <latest>now</latest> </search> <option name="drilldown">none</option> <option name="height">158</option> <option name="rangeColors">["0x53a051","0x0877a6","0xf8be34","0xf1813f","0xdc4e41"]</option> <option name="refresh.display">progressbar</option> <option name="showTrendIndicator">0</option> <option name="useThousandSeparators">1</option> </single> </panel> <panel> <title>Current anomaly score</title> <chart> <title>Anomaly score from last time point</title> <search> <query>| inputlookup anomaly_detection.csv | stats latest(z_score) as z_score | eval z_score=if(z_score>0, z_score, 0)</query> <earliest>-24h@h</earliest> <latest>now</latest> <refresh>5m</refresh> <refreshType>delay</refreshType> </search> <option name="charting.chart">radialGauge</option> <option name="charting.chart.rangeValues">[0,3,6,10]</option> <option name="charting.chart.style">minimal</option> <option name="charting.gaugeColors">["0x53a051","0xf8be34","0xdc4e41"]</option> <option name="height">171</option> <option name="refresh.display">progressbar</option> </chart> </panel> </row> <row> <panel> <title>Ingest Anomaly Detection</title> <viz type="Splunk_ML_Toolkit.OutliersViz"> <title>Live predictions and observations</title> <search> <query>| inputlookup anomaly_detection.csv | eval isOutlier=if(count>upper_error_bound OR count<lower_error_bound, 1, 0) | eval "Lower Error Bound"=if(lower_error_bound<1, 1, lower_error_bound) | eval "Upper Error Bound"=upper_error_bound | fields _time, count, "Lower Error Bound", "Upper Error Bound"</query> <earliest>-24h@h</earliest> <latest>now</latest> <refresh>5m</refresh> <refreshType>delay</refreshType> </search> <search type="annotation"> <query>| inputlookup anomaly_detection.csv | where(z_score>3) | eval annotation_label=if(z_score>5, if(0 > error, "High Volume Alert", "Low Volume Alert"), if(error > 0, "High Volume Warning", "Low Volume Warning")) | eval annotation_color=if(z_score>5, "#FF0000", "#ffff00")</query> <earliest>-24h@h</earliest> <latest>now</latest> </search> <option name="drilldown">none</option> </viz> </panel> </row> <row> <panel> <title>Recent Large Prediction Errors</title> <chart> <title>Prediction errors greater than the mean error - last 48 hours</title> <search type="annotation"> <query>| inputlookup anomaly_detection.csv | where(z_score>3) | eval annotation_label=if(z_score>6, if(0 > error, "High Volume Alert", "Low Volume Alert"), if(error > 0, "High Volume Warning", "Low Volume Warning")) | eval annotation_color=if(z_score>6, "#FF0000", "#ffff00")</query> <earliest>-24h@h</earliest> <latest>now</latest> </search> <search> <query>| inputlookup anomaly_detection.csv | eval "Z-Score"=if(z_score<0, 0, z_score) | sort - _time | head 48 | fields _time, "Z-Score"</query> <earliest>-24h@h</earliest> <latest>now</latest> <refresh>5m</refresh> <refreshType>delay</refreshType> </search> <option name="charting.axisTitleX.visibility">visible</option> <option name="charting.axisTitleY.visibility">visible</option> <option name="charting.axisTitleY2.visibility">visible</option> <option name="charting.chart">column</option> <option name="charting.chart.showDataLabels">none</option> <option name="charting.chart.stackMode">default</option> <option name="charting.drilldown">all</option> <option name="charting.layout.splitSeries">0</option> <option name="charting.legend.labelStyle.overflowMode">ellipsisEnd</option> <option name="charting.legend.placement">none</option> <option name="charting.seriesColors">[0xdc4e41, #ff0000, #ff0000, #ff0000]</option> <option name="refresh.display">progressbar</option> </chart> </panel> </row> </dashboard> - Click Save.

A page reload is required. Click Refresh in the modal window. The dashboard will populate over time. Usually 24 hours.

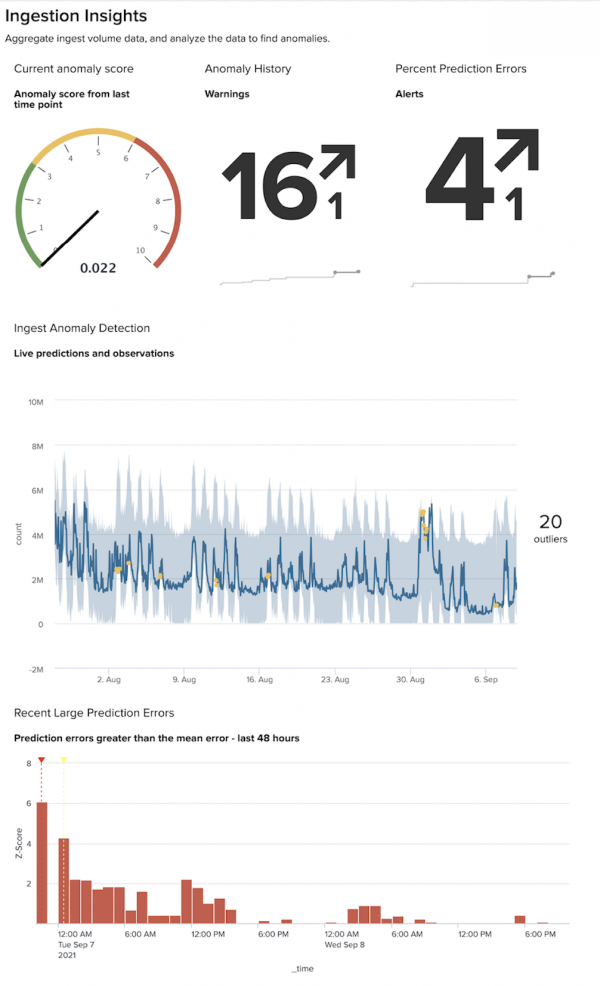

Example dashboard

The following image shows an example of how the dashboard might look once populated with data:

(Optional) Manually populate dashboard charts with historical data

Because the new dashboard will take about 24 hours to populate, you can manually add historical data to get a preview of the dashboard in action. To achieve this, run the apply searches on the past week of data.

Perform the following steps to manually populate the new dashboard:

- From Apps > Search and Reporting, go to the Dashboards tab.

- Input the following SPL into the Search field:

| tstats count where index=[insert your index here] sourcetype=[insert your sourcetype here] groupby _time span=1h | eval hour=strftime(_time,"%H") | eval minute=strftime(_time,"%M") | eval weekday=strftime(_time,"%a") | eval weekday_num=case(weekday=="Mon",1,weekday=="Tue",2,weekday=="Wed",3,weekday=="Thu",4,weekday=="Fri",5,weekday=="Sat",6,weekday=="Sun",7) | eval month_day=strftime(_time,"%m-%d") |eval is_holiday=case(month_day=="08-02",1,month_day=="07-07",1,month_day=="07-06",1,month_day=="07-05",1,month_day=="01-01",1,month_day=="01-18",1,month_day=="05-31",1,month_day=="07-05",1,month_day=="09-06",1,month_day=="11 -11",1,month_day=="11-25",1,month_day=="12-24",1,month_day=="12-32",1,1=1,0) | apply regr as predicted | eval error=count-predicted | outputlookup anomaly_detection.csv

- Set the date and time range as from -7d@d to -0h@h in the Advanced section.

- Run the search.

- Open another search and input the following SPL:

| inputlookup anomaly_detection.csv | eval error_sq=error*error | eval pct_error=abs(error)/count | eventstats avg(error_sq) as mean_error | eventstats stdev(error_sq) as sd_error | eval z_score=(error_sq-mean_error)/sd_error | eval upper_error_bound=predicted+sqrt(3*sd_error+mean_error) | eval lower_error_bound=predicted-sqrt(3*sd_error+mean_error) | outputlookup anomaly_detection.csv

- Run the search. This second search populates the dashboard with the past week of data.

Set up alerts

Perform the following steps to setup an alert:

- Open a new search. Apps > Search and Reporting.

- Input the following SPL to the Search field:

| inputlookup anomaly_detection.csv | eval isOutlier=if(count>upper_error_bound OR count<lower_error_bound, 1, 0) | eval "Lower Error Bound"=if(lower_error_bound<1, 1, lower_error_bound) | eval "Upper Error Bound"=upper_error_bound | search isOutlier=1

- Run the search.

- From the Save As menu, choose Alert.

- Under Alert Type select Schedule and the Run on Cron Schedule option.

- Set Cron Schedule to

4 * * * *. This encodes "run on the 4th minute of every hour" for your scheduled searches. - Add any trigger conditions as you deem necessary.

Learn more

To learn about implementing analytics and data science projects using Splunk platform statistics, machine learning, and built-in and custom visualization capabilities, see Splunk 8.0 for Analytics and Data Science.

To learn more about using Cron syntax, see Use cron expressions for alert scheduling in the Splunk Cloud Platform Alerting Manual.

| Deep dive: Using ML to identify network traffic anomalies | Troubleshooting the deep dives |

This documentation applies to the following versions of Splunk® Machine Learning Toolkit: 4.5.0, 5.0.0, 5.1.0, 5.2.0, 5.2.1, 5.2.2, 5.3.0, 5.3.1, 5.3.3, 5.4.0, 5.4.1, 5.4.2, 5.5.0, 5.6.0, 5.6.1

Download manual

Download manual

Feedback submitted, thanks!