Deep dive: Using ML to identify user access anomalies

The goal of this deep dive is to identify when there are unusual volumes of failed logons as compared to the historical volume of failed logins in your environment.

Increases in failed logins can indicate potentially malicious activity, such as brute force or password spraying attacks. Alternatively, these failed logins can identify potential misconfigurations in service account permissions or misconfigured organizational unit (OU) groups for standard users.

Data sources

The following data sources are relevant to monitoring failed logins and can be used in this deep dive.

wineventlog:securitylinux_secure

The deep dive leverages the wineventlog:security source type.

Algorithms

For best results, use the DensityFunction algorithm.

As an alternative approach, try stats or the DBSCAN algorithm.

Train the model

Before you begin training the model, do the following things:

- Change the index for your environment if necessary.

- You must pick a search window that has enough data to be representative of your environment. Search over 30 days at a minimum for this analytic. The more data the better.

Enter the following search into the search bar of the app you where want the analytic in production:

| tstats count WHERE index=windows_logs source="wineventlog:security" TERM(EventCode=4625) BY _time span=5m host | eval HourOfDay=strftime(_time,"%H") | fit DensityFunction count by HourOfDay as outlier into app:logon_failures_outlier_detection_model

This search counts the number of failed logons per host over 5 minute time intervals, enriches the data with the hour of day, and then trains an anomaly detection model to detect unusual numbers of failed logons by the hour of day.

After you run this search and are confident that it is generating results, save it as a report and schedule the report to periodically retrain the model. As a best practice, train the model every week, and schedule training for a time when your Splunk platform instance has low utilization.

Model training with MLTK can use a high volume of resources.

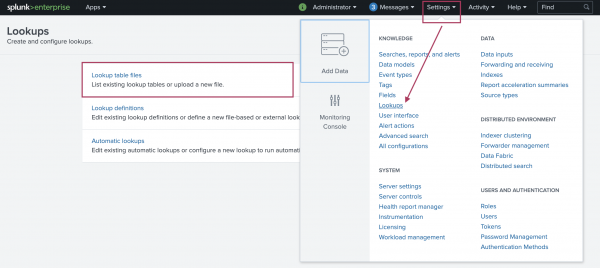

After training the model you can select Settings in the top menu bar, then select Lookups, then select Lookup table files. and search for your trained model.

Make sure that the permissions for the model are correct. By default, models are private to the user who has trained them, but since you have used the app: prefix in your search, the model is visible to all users who have access to the app the model was trained in.

Apply the model

Now that you have set up the model training cycle and have an accessible model, you can start applying the model to data as it is coming into the Splunk platform. Use the following search to apply the model to data:

| tstats count WHERE index=windows_logs source="wineventlog:security" TERM(EventCode=4625) BY _time span=5m host | eval HourOfDay=strftime(_time,"%H") | apply logon_failures_outlier_detection_model

This search can be used to populate a dashboard panel or can be used to generate an alert.

When looking to flag outliers as alerts, you can append | search outlier=1 to the search, to filter your results to show only those that have been identified as outliers. You can save this search as an alert that triggers when the number of results is greater than 0, which can be run on a scheduled basis such as hourly.

Tune the model

When training and applying your model, you might find that the number of outliers being identified is not proportionate to the data: that the model is either flagging too many or too few outliers. The DensityFunction algorithm has a number of parameters that can be tuned to your data, creating a more manageable set of alerts.

The DensityFunction algorithm has a threshold option that is set at 0.01 by default, which means it will identify the least likely 1% of the data as an outlier. You can increase or decrease this threshold configuration at the apply stage, depending on the tolerance for outliers, as shown in the following search:

| tstats count WHERE index=windows_logs source="wineventlog:security" TERM(EventCode=4625) BY _time span=5m host | eval HourOfDay=strftime(_time,"%H") | apply logon_failures_outlier_detection_model threshold=0.005 | search outlier=1

Additional fields can also be extracted and used during the fit and apply stage. For example, if your data has hourly and daily variance, such as significantly more errors during working hours on a weekday, you can include the hour of the day and the day of the week in the by clause to more finely tune your model to your data, as shown in the following search:

| tstats count WHERE index=windows_logs source="wineventlog:security" TERM(EventCode=4625) BY _time span=5m host | eval HourOfDay=strftime(_time,"%H"), DayOfWeek=strftime(_time,"%a") | fit DensityFunction count by "HourOfDay,DayOfWeek" as outlier into app:logon_failures_outlier_detection_model

Learn more

For help using this deep dive, see Troubleshooting the deep dives.

See the following customer use cases from the Splunk .conf archives:

- How Israel's Ministry of Energy applies Machine Learning to protect their Critical Infrastructure and OT Operations

- Augment your Security Monitoring Use Cases with MLTK's Machine Learning

- Anomaly Detection, Sealed with a KISS

See the following Splunk blog posts on outlier detection:

- Cyclical Statistical Forecasts and Anomalies - Part 1

- Cyclical Statistical Forecasts and Anomalies - Part 4

- Cyclical Statistical Forecasts and Anomalies - Part 5

- Building Machine Learning Models with DensityFunction

- Anomalies Are Like a Gallon of Neapolitan Ice Cream - Part 1

- Anomalies Are Like a Gallon of Neapolitan Ice Cream - Part 2

| MLTK deep dives overview | Deep dive: Using ML to detect outliers in error message rates |

This documentation applies to the following versions of Splunk® Machine Learning Toolkit: 4.5.0, 5.0.0, 5.1.0, 5.2.0, 5.2.1, 5.2.2, 5.3.0, 5.3.1, 5.3.3, 5.4.0, 5.4.1, 5.4.2, 5.5.0, 5.6.0, 5.6.1

Download manual

Download manual

Feedback submitted, thanks!