Getting AWS data into the Splunk platform

When selecting a solution for gathering data from AWS it is important to take into account the trade-offs involved. Topics covered in this Splunk Validated Architecture (SVA) apply to Splunk Cloud Platform and Splunk Enterprise products. Where applicable, limitations of platform availability are noted.

AWS has more than 200 services and writing an exhaustive list for each service is outside the scope of this document. This SVA is intended to show the most common data ingestion paths for the majority of customers who ingest AWS data into the Splunk platform. As a general rule, Data Manager is the recommended method of data ingestion for Splunk Cloud Platform customers for supported data sources where available. See Overview of source types for Data Manager in the Data Manager User Manual. Data Manager greatly reduces the time to configure cloud data sources from hours to minutes, while providing a centralized data ingestion management, monitoring, and troubleshooting experience.

This document walks users through the multiple options that are available to ingest AWS data sources taking into account customer architecture, data sources, volume, and velocity.

General overview

Push vs. pull

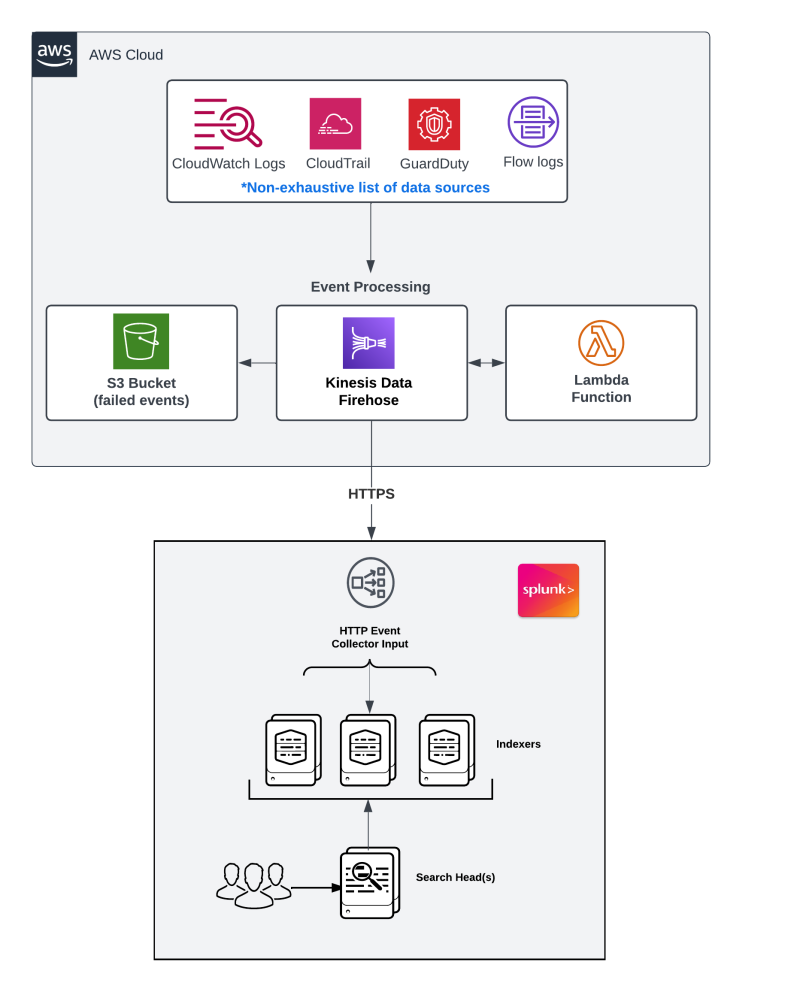

There are two common approaches to ingesting data from AWS: push and pull. For the purposes of this document, unless otherwise noted, push refers to streaming data from Kinesis Data Firehose to a Splunk HEC endpoint. Likewise, pull refers to the Splunk Add-On for AWS fetching data from AWS services.

Further details are outlined in the dedicated push and pull sections of this document, but these are the high level benefits and limitations of each approach.

- Push benefits

- Higher scalability.

- Utilizes managed services, requiring less management and maintenance overhead.

- Push limitations.

- Requires a publicly available endpoint.

- Cost is typically notably higher for lower volume (less than approximately 1 TB per day).

- Pull benefits

- Simple configuration.

- Doesn't require a public network to ingest data.

- Pull limitations.

- At higher volumes, scaling requires additional hosts and can become more complicated.

- Requires management of AWS security keys on a Splunk Cloud Platform hosted IDM or search head.

Push method

Benefits

Splunk and Amazon have implemented a serverless (no infrastructure to manage) integration between Kinesis and the Splunk HTTP Event Collector (HEC) that enables you to stream data from AWS directly to a HEC endpoint, configurable via your AWS console or CLI. This solution is well suited for high volume as it automatically scales resources (autoscaling) needed for storing and processing events.

This architecture supports automation. You can use the Splunk Admin Config Service (ACS) API to create and manage HEC tokens for your Splunk Cloud Platform deployment programmatically. This coupled with a CI/CD platform to deploy infrastructure as code (IaC) allows you to manage all aspects of your infrastructure using an automated approach.

- Suited for high volume.

- Autoscaling.

- Serverless.

- Tight integration between AWS services.

- Built-in retry mechanisms via Kinesis Data Firehose (configurable).

- Load balanced (Splunk Cloud Platform).

- Deployable via automation.

Limitations

- Customer Managed HEC endpoints must use a Public Certificate Authority issued SSL Certificate.

- High level of knowledge needed to deploy AWS infrastructure.

- Recommend using infrastructure as code (IaC) (CloudFormation, Terraform) to deploy infrastructure, especially if managing multiple AWS accounts.

- Custom data sourcetypes (non AWS) may require additional coding for Lambda and field extraction in Splunk.

- AWS services incur cost.

- Recommend using the AWS Pricing Calculator for estimates.

- Also see Understand and Optimize AWS Data Transfer Charges for Splunk Cloud on AWS Ingestion on the AWS Partner Network (APN) Blog.

Pull method

Benefits

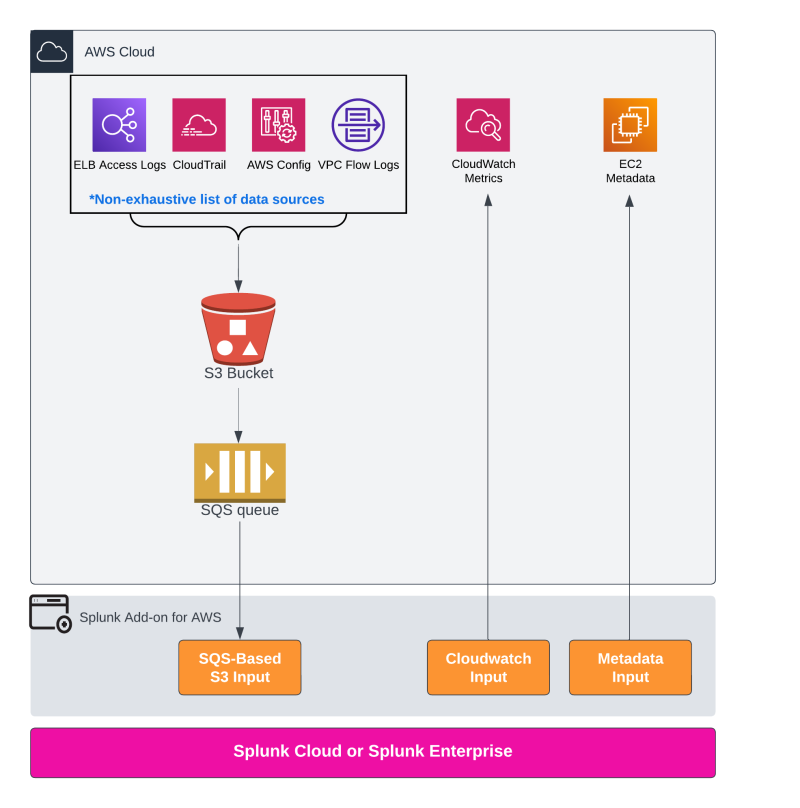

Since the original release in 2014, the Splunk Add-on for AWS has been an essential tool for Splunk Administrators seeking to collect AWS data. In addition to the data collection itself, the Splunk Add-on for AWS also contains a host of pre-built sourcetype definitions for many AWS services.

In addition to pulling data from AWS S3 buckets, there are two additional commonly gathered source types that require a separate input on the Splunk Add-on for AWS: metadata and cloudwatch metrics. The metadata input gathers information about many commonly deployed infrastructure types like EC2, RDS, Lambda, IDM, and so on. The cloudwatch metrics input gathers metric information for similar commonly deployed infrastructure such as EC2, Lambda, SQS, SNS, and so on.

Splunk Cloud Platform customers configure these inputs on either their IDM (Classic Experience) or on their search head (Victoria Experience). If your environment has an IDM, installation of the Splunk Add-on for AWS requires a support request to Splunk Cloud Platform Support. For customers using Splunk Enterprise, the Splunk Add-on for AWS is typically installed and configured on a heavy forwarder.

- Straightforward configuration that requires a S3 bucket and SQS queue.

- Ingestion failures are typically much easier to recover from due to SQS queues and checkpointing.

- Capable of utilizing private networks or VPC endpoints to retrieve data.

- Daily ingest of less than 1 TB per day is almost always considerably more cost effective compared to the push method.

- The Splunk Add-on for AWS provides an API for automating account and input creation. See API reference for the Splunk Add-on for AWS in the Splunk Add-on for AWS manual.

Limitations

- Can be CPU/SVC intensive when using many inputs.

- Not recommended for ingesting more than 1 TB per day per node.

- Scaling on Splunk Enterprise for higher volumes requires additional resources (nodes/VM) and management overhead.

- For Splunk Cloud Platform, the limits described in the Experience designations section of the Splunk Cloud Platform Service Details apply.

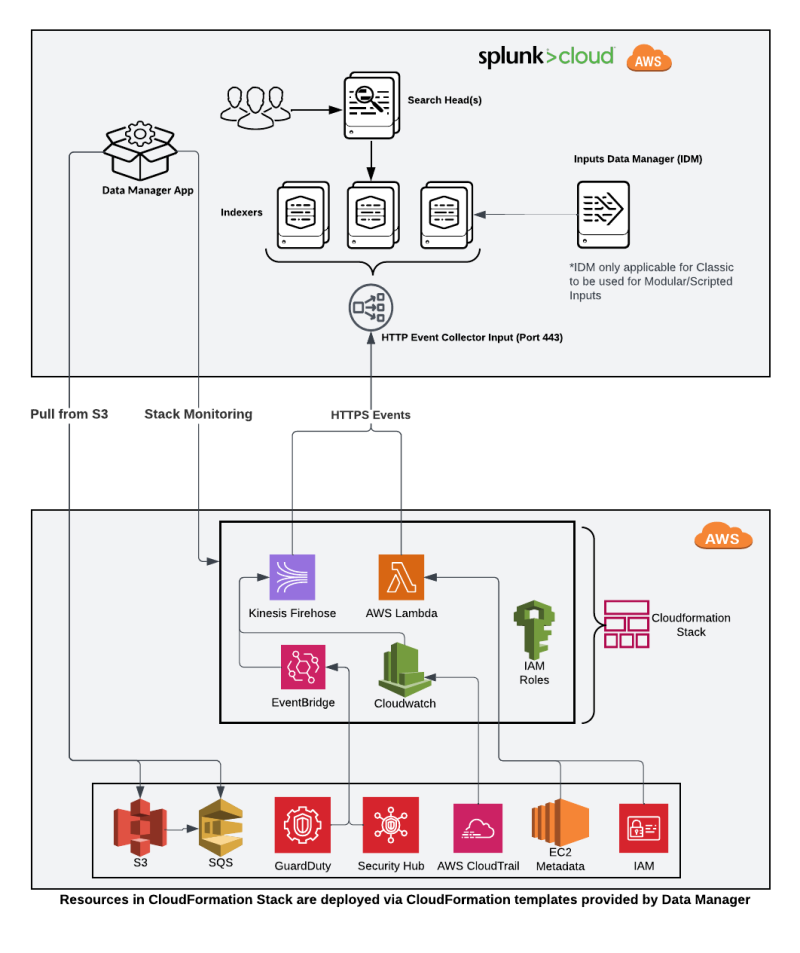

Data Manager method

Data Manager is a Splunk provided way to easily configure the best method of ingestion for multiple sources from all 3 major cloud providers. It is available as a pre-installed app on all AWS Splunk Cloud Platform regions (except GovCloud) as of version 8.2.2104.1. For supported services, Data Manager provides you with a prebuilt CloudFormation template to deploy in a customer managed cloud environment. This template configures resources for a push or pull configuration (depending on the service) in your account(s) and region(s).

Benefits

- Fully supported method built by Splunk for GDI from a cloud provider using best practices.

- Gives the ability to utilize push methodology without having to build and maintain custom cloud infrastructure.

- Built in methods to monitor push based infrastructure in your AWS environment within the Data Manager app in Splunk Cloud Platform.

- Lowers resource utilization on Splunk Cloud Platform as compared to pull methodologies or the AWS TA.

Limitations

- Requires resources to be deployed in a customer's AWS environment. This can lead to higher costs than using the AWS TA.

- Does not support all data sources in AWS or custom data sources.

- Only available on Splunk Cloud Platform hosted on AWS.

- Not available on IL5/FedRamp stacks.

- Requires installation of AWS TA to correctly parse data from AWS data sources.

| Getting Microsoft Azure data into the Splunk platform | Splunk Cloud Platform Experiences |

This documentation applies to the following versions of Splunk® Validated Architectures: current

Download manual

Download manual

Feedback submitted, thanks!