Edge Processor Validated Architecture

The Edge Processor solution is a data processing engine that works at the edge of your network. Use the Edge Processor solution to filter, mask, and transform your data close to its source before routing the processed data to Splunk and S3. Edge Processors are hosted on your infrastructure so data doesn't leave the edge until you want it to.

Overall Benefits

The Edge Processor solution:

- Provides users the capability to process and/or route data at arbitrary boundaries.

- Enables users to apply real-time processing pipelines to data en-route.

- Uses SPL2 to author processing pipelines.

- Can scale both vertically and horizontally to meet processing demand.

- Can be deployed in a highly available and load-balanced configuration as part of your data availability strategy.

- Enables flexibility for scaling data and infrastructure at scale.

- Use cases and enablers:

- Organizational requirement for centralized data egress

- Routing, forking, or cloning events to multiple Splunk deployments and/or Amazon S3

- Masking, transforming, enriching, or otherwise modifying data in motion

- Reducing certificate or security complexity

- Remove or reduce ingestion load on the indexing tier due to offloading initial data processing, such as line breaking.

- Relocate data processing away from the indexer to reduce the need for rolling restarts and other availability disruptions.

Architecture and topology

Refer to the Splunk docs for the latest system architecture for a high level view of the components of a deployment of the Edge Processor solution. However, there are several key points related to the default topology, which are critical to understand for a successful Edge Processor solution deployment:

- The term Edge Processor is a logical grouping of instances that share the same configuration.

- All pipelines deployed to an Edge Processor will run concurrently on all instances in that Edge Processor.

- Edge Processor instances are not aware of one another. There is no intra-instance communication, synchronization, balancing, or other behavior.

- All Edge Processor instances are managed by the Splunk Cloud control plane.

- Edge Processors collect and send telemetry and analytics data to Splunk Cloud separately from the processed data flow.

- Raw data is processed and routed only as described by each Edge Processor's deployed configuration, that is, customer data isn't included in any telemetry.

Edges and domains

An edge in the context of Edge Processor and Splunk data routing refers to any step between the source and the destination where event control is required. Some common edges are data centers, clouds and cloud availability zones, network segments, vlans, and infrastructure that manages regulated or compliance-related data (PII, HIPAA, PCI, GDPR, etc). Data domains represent the relationships between the data source and the edge. When data traverses an edge, the data has left the originating data domain.

In Splunk terms, edges are generally correlated with other "intermediate forwarding" concepts and you may often see edges referred to with Edge Processor, heavy and universal forwarders, and OTEL which can all serve as intermediate forwarders and affect change on events.

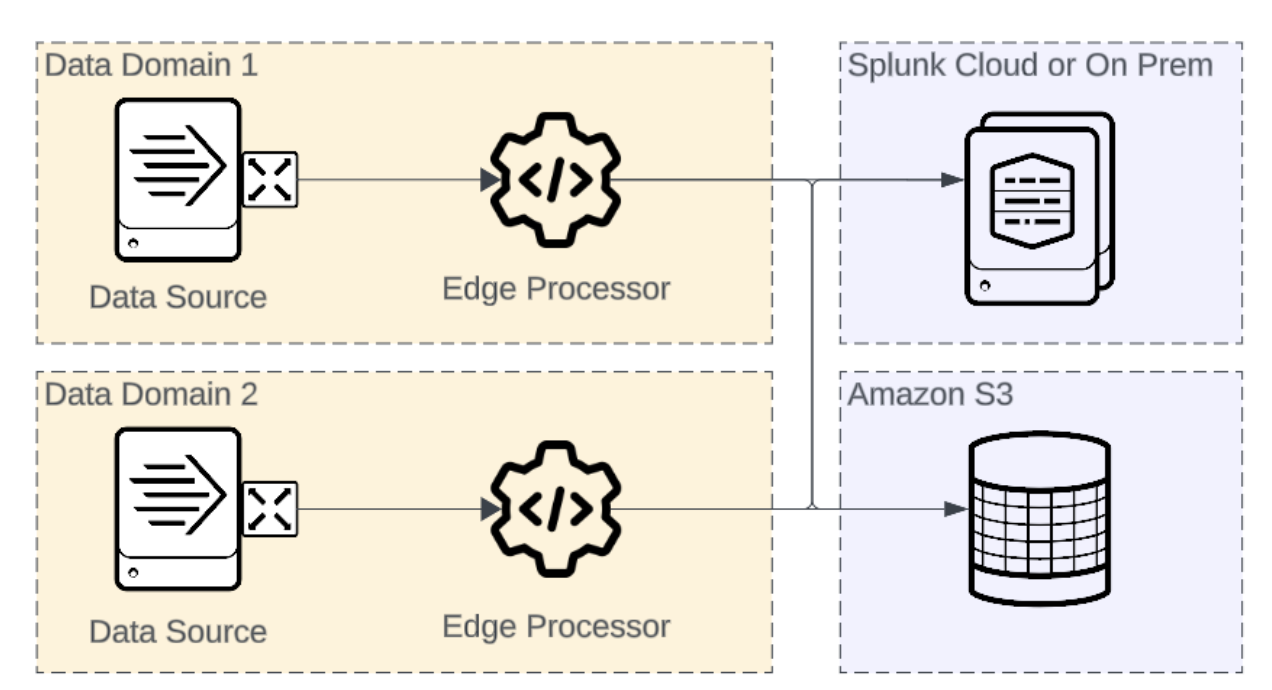

Intermediate Routing - close to source

Edge Processors can be deployed close to the source, logically within the same data domain as the data source.

| Benefits | Limitations |

|

|

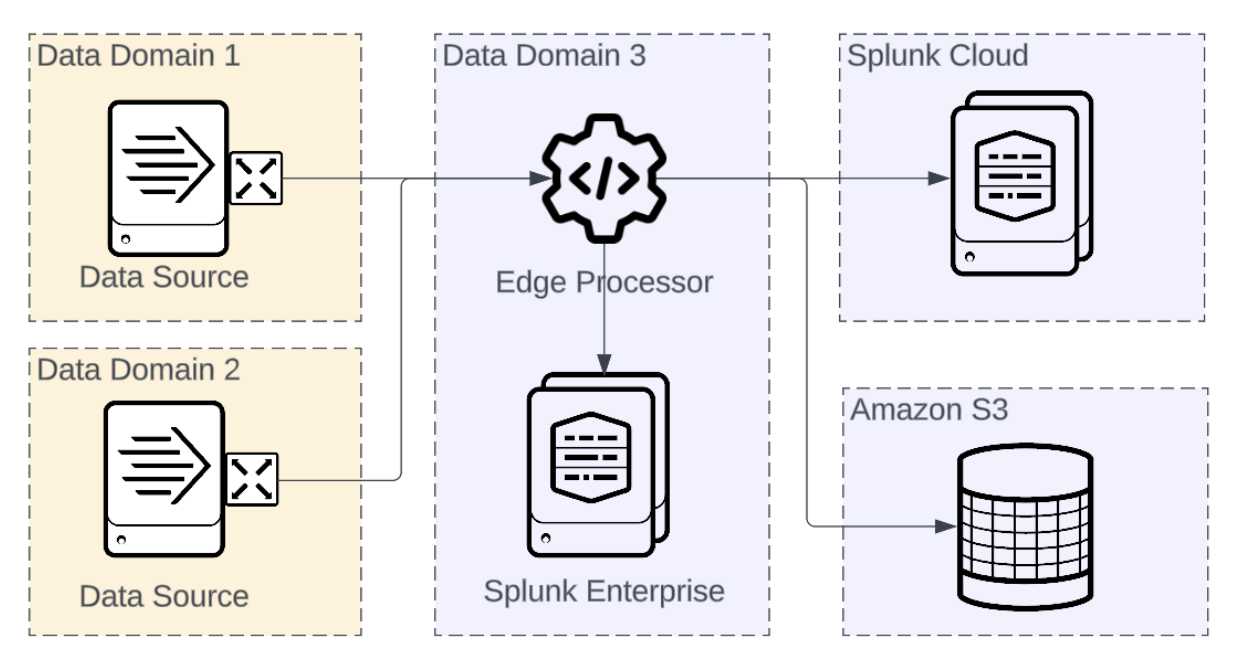

Intermediate routing - close to destination

Edge Processors can be deployed close to the destination, logically in a different data domain than the data source, often within the same data domain as the destination.

| Benefits | Limitations |

|

|

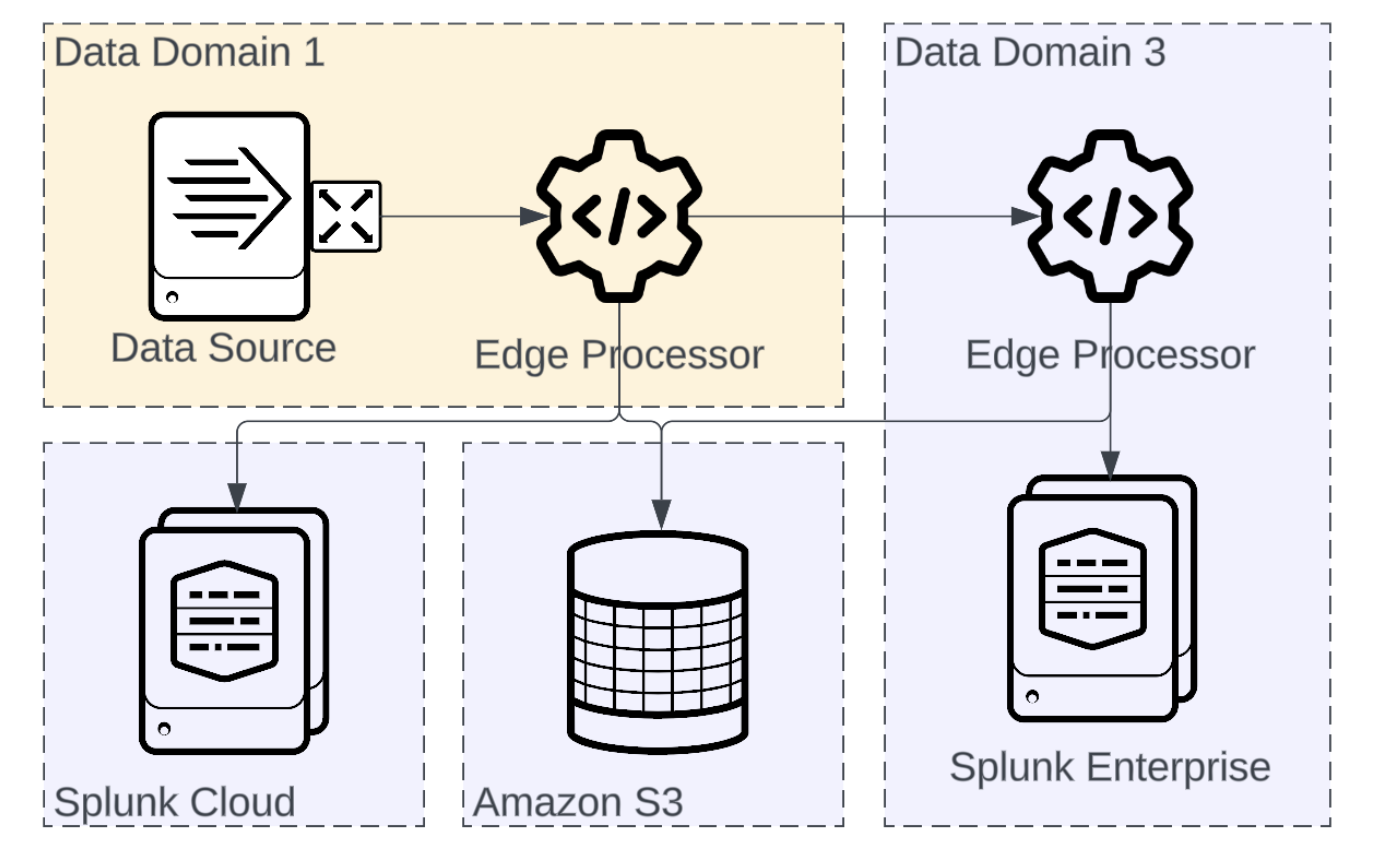

Multi-hop

There is no restriction on the number of Edge Processors events can travel through. In situations where it is desirable to have event processing near the data as well as near the destination Edge Processors can send and receive from one another.

| Benefits | Limitations |

|

|

When data spans multiple hops as shown, it can be helpful to include markers in the data to indicate the systems that have been traversed. For example, adding the following SPL2 to your pipeline will build a list of traversed Edge Processors into a field called "hops". | eval hops=hops+"[your own marker here]"

Management Strategy

Once you have decided on where Edge Processor instances will be provisioned you have to decide how you want to manage the deployment of pipelines to those instances.

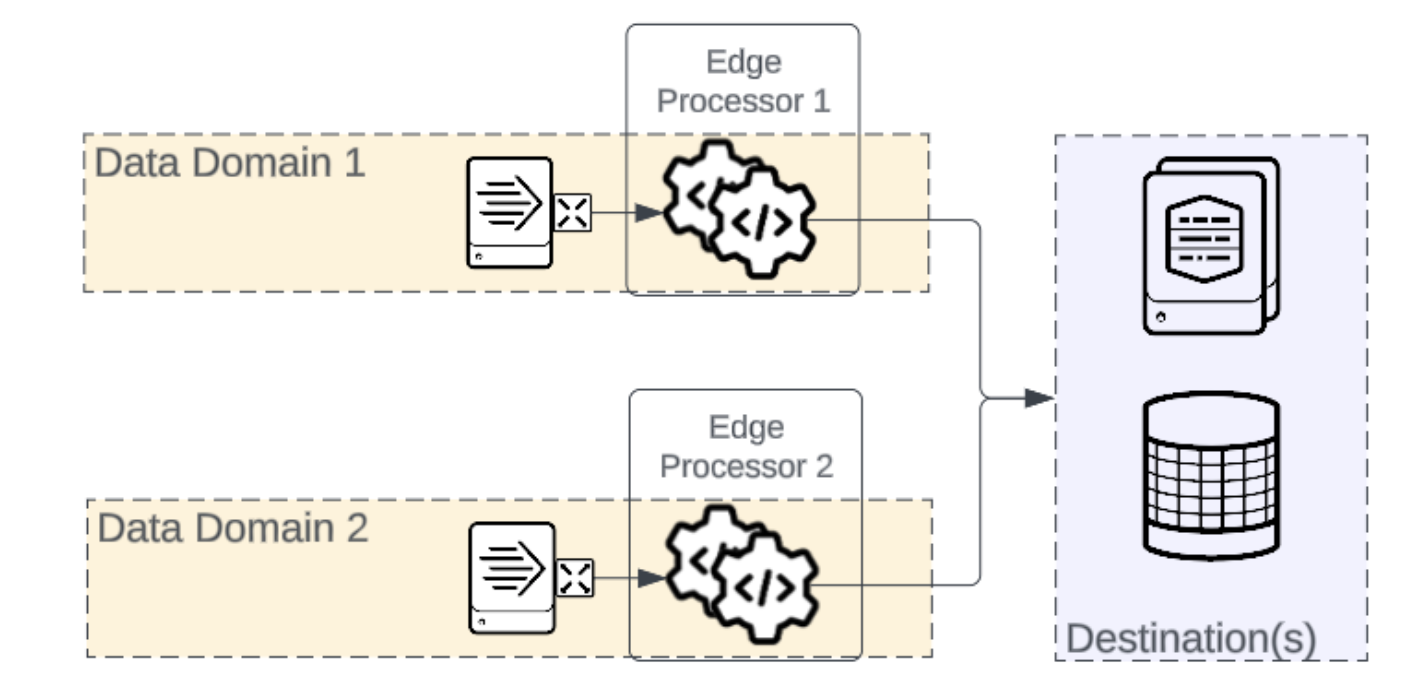

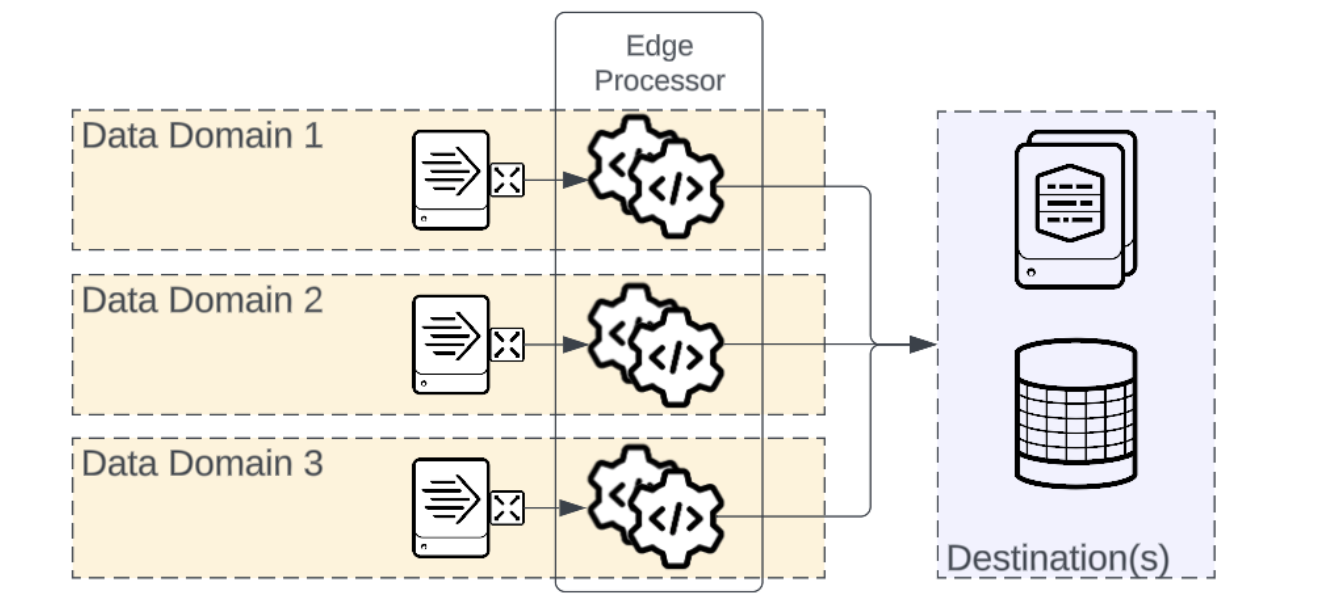

Per Domain Configuration

In most cases, each data domain that requires event processing will have an Edge Processor and the instances belonging to that Edge Processor will be specific to that data domain. Organizing in this manner generally results in easy to understand naming and data flow, and offers the best reporting fidelity. When organized this way, all instances in each Edge Processor belong to the same Splunk output group for purposes of load balancing.

| Benefits | Limitations |

|

|

Stretched Configuration

When event processing data source types, ports, requirements, and destinations are the same across more than one data domain, all of the instances can belong to the same Edge Processor. There is no requirement that each data domain must have its own Edge Processor.

| Benefits | Limitations |

|

|

Input Load Balancing

Edge Processor supports three input types: Splunk (s2s), HTTP Event Collector, and syslog via UDP and TCP. Edge Processor has no specific configuration or support for load balancing these protocols, and best practices for each protocol should be followed using the Edge Processor instances as targets. High level strategies and architectures for each input type will be covered, but specific protocol optimization and tuning is out of scope of this validated architecture.

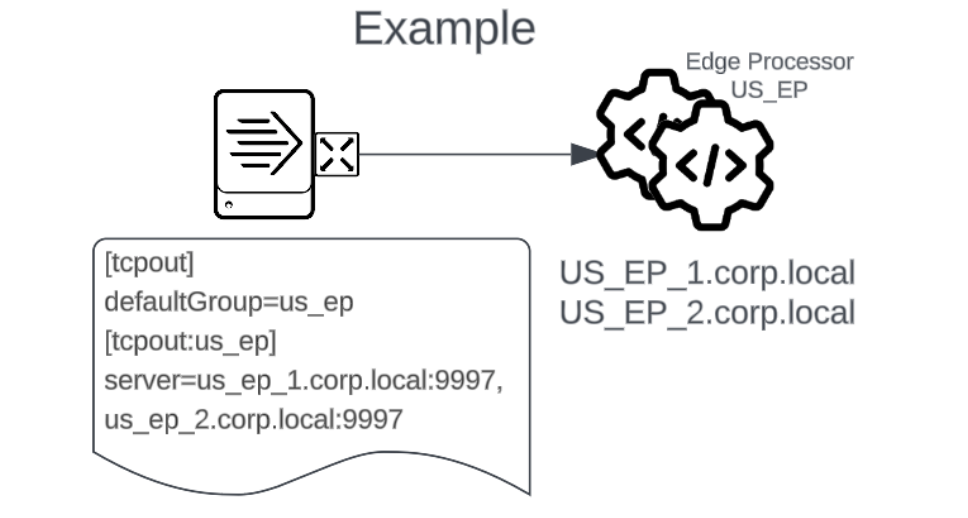

Splunk

Splunk universal and heavy forwarders should continue to use outputs.conf with the list of Edge Processor instances as a server group to enable output load balancing. The behavior of the forwarders that is expected when using indexers or other forwarders as output targets can be expected when Edge Processors are output targets.

| Benefits | Limitations |

|

|

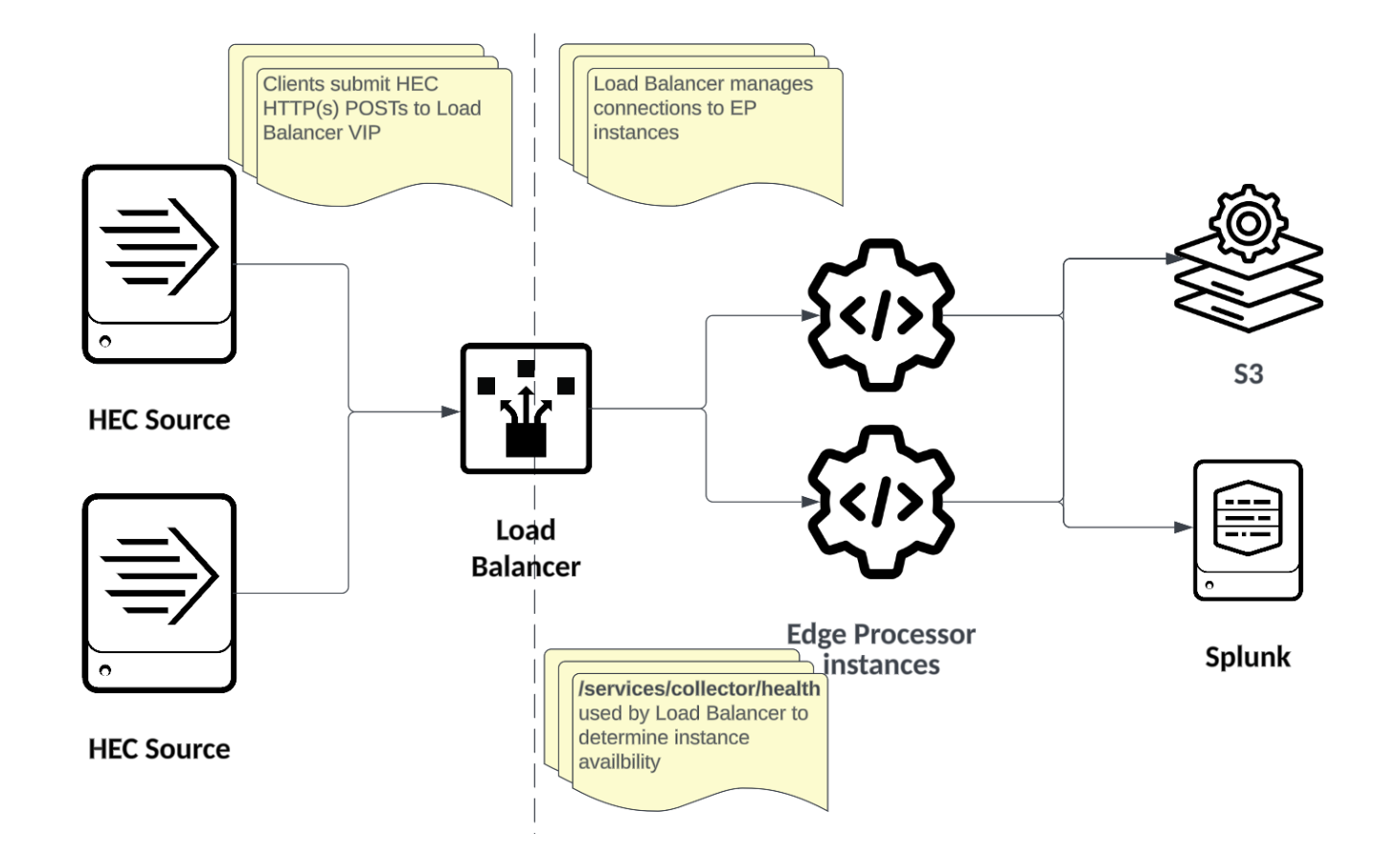

HTTP Event Collector (HEC)

Where more than one Edge Processor instance is configured to receive HEC events, some sort of independent load balancing must be used. There is no mechanism in Edge Processor that load balances HEC traffic.

Some examples of load balancing HEC traffic to Edge Processor:

Network or Application load balancers

The most common approach to load balancing HEC is the same technology used to balance most other HTTP traffic - Classic network load balancers or application load balancers. Purpose-built load balancers offer a managed solution for providing a single endpoint that can be used to intelligently distribute HEC traffic to one or more Edge Processors. You can see a simple example here.

| Benefits | Limitations |

|

|

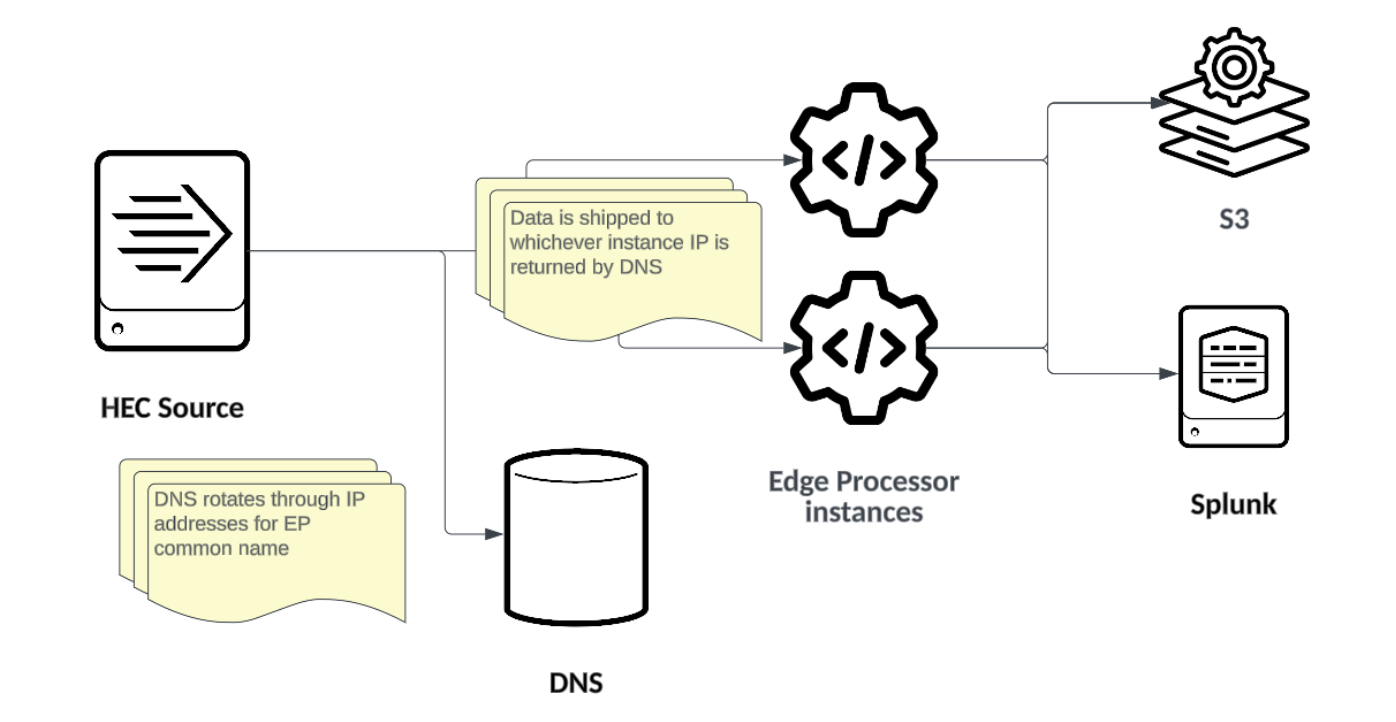

DNS Round Robin

DNS Round Robin is a simple load balancing method used to distribute network traffic across multiple servers. In this approach, DNS is configured to rotate through a list of Edge Processor IP addresses associated with a single domain name. When a HEC source makes a request to the domain name, the DNS server responds with one of the IP addresses from the list.

| Benefits | Limitations |

|

|

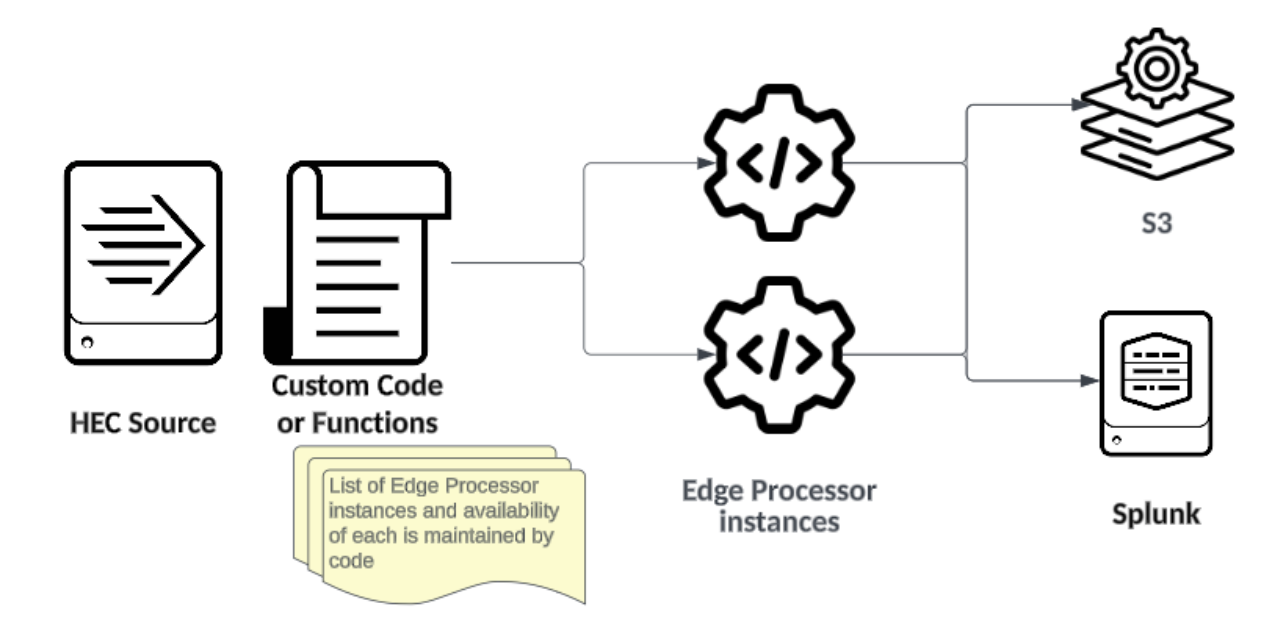

Client-side load balancing

In scenarios where HEC integration is scripted or access to the code is otherwise available, the responsibility for load balancing, queuing, retrying, and monitoring availability can be managed by the sending client or mechanism. This design decentralizes the complexity from the central network infrastructure to the individual sending entities. Each sender can dynamically adjust to downstream Edge Processor instance availability by implementing retry mechanisms that respond to errors and timeouts. The client implementation can be made as reliable as required.

| Benefits | Limitations |

|

|

Syslog

In many cases, systems that use syslog can only specify a single server name or IP in their syslog configuration. There are several approaches to distributing or load balancing the syslog traffic among the Edge Processors to avoid a single point of failure. The guidance provided in this document as it relates to load balancing the syslog protocol is not specific to Edge Processor and is intentionally brief. Refer to About Splunk Validated Architectures or Splunk Connect for Syslog for more information. See https://datatracker.ietf.org/doc/html/rfc5424 for a relevant syslog request for comments (RFC).

DNS Round Robin

Using a single friendly name to represent a list of possible listeners. The same considerations as with DNS Round Robin for HEC apply to syslog. In particular, caching of DNS records can result in stalled data even when healthy instances are available.

Network Load Balancer

The same considerations as with Network load balancers for HEC apply to syslog with some notable differences:

- Syslog often relies on the sender's IP address, which is typically lost with a load balancer. If the sending IP address is needed, special configuration on the load balancer must be made.

- There is no specific syslog health check that can be used by a load balancer.

- BGP or other layer 3 load balancing strategies may be considered for very large or sensitive syslog environments. Consult with a Splunk Architect or Professional Services in this case.

For further guidance and best practices for load balancing syslog traffic, refer to the Syslog Validated Architecture.

Port Mapping

Similar to Splunk Heavy Forwarders using TCP or UDP listeners to receive syslog data, Edge Processor can be configured to open one or more arbitrary ports on which syslog data is received. RFC compliance, source, and sourcetype are assigned to each port and to each event arriving on that port. The data sources, use cases, pipeline structure, and destinations all need to be considered when choosing a syslog port assignment strategy as port assignment can directly affect pipeline structure and performance.

Considerations

There are several configuration patterns to consider when building your Edge Processor syslog topology.

While the following examples are the most common implementations, it's important to keep in mind that these port and pipeline configurations are not mutually exclusive and in practice the actual topology tends to evolve over time.

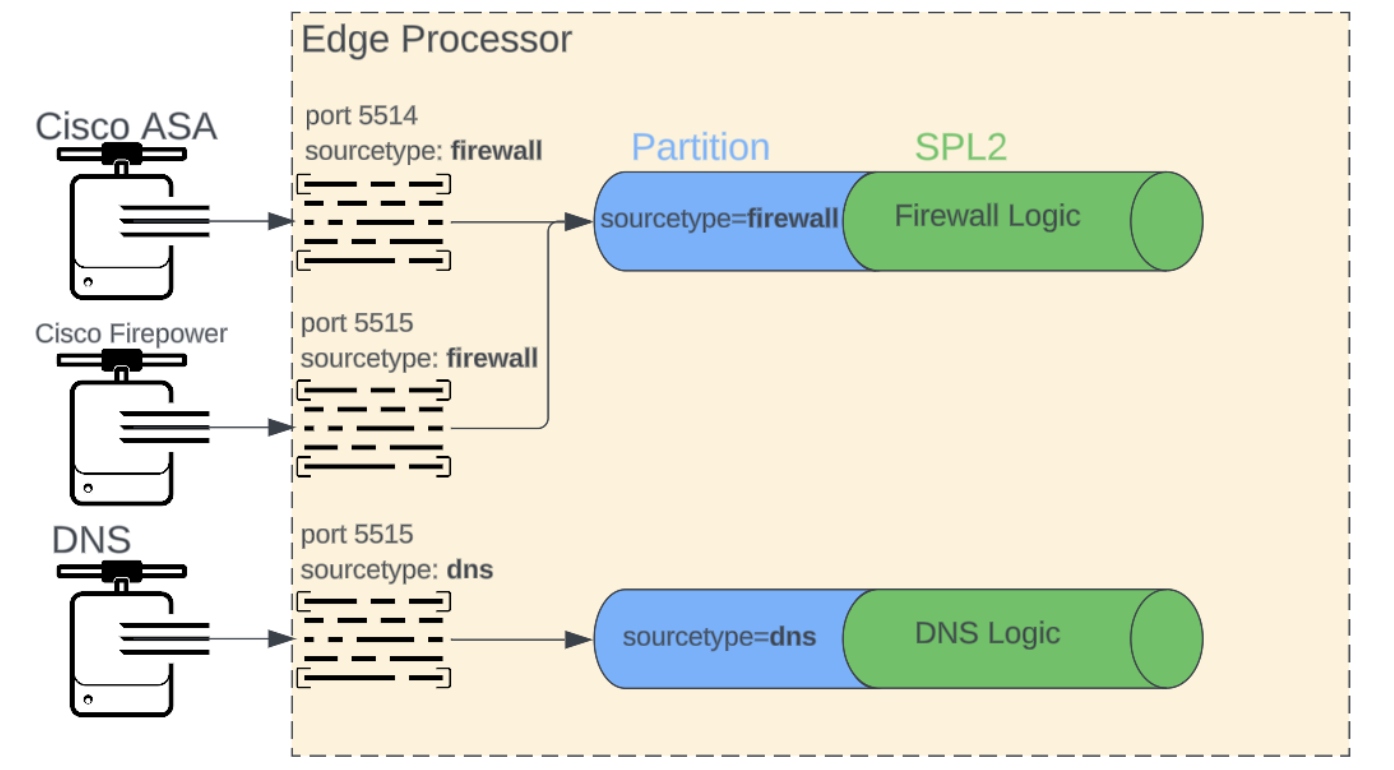

In this configuration, each unique device and sourcetype is assigned a specific port and each sourcetype is processed by a unique pipeline. This results in one pipeline per sourcetype, and multiple ports may supply data to the same pipeline when assigning the same sourcetype.

| Benefits | Limitations |

|

|

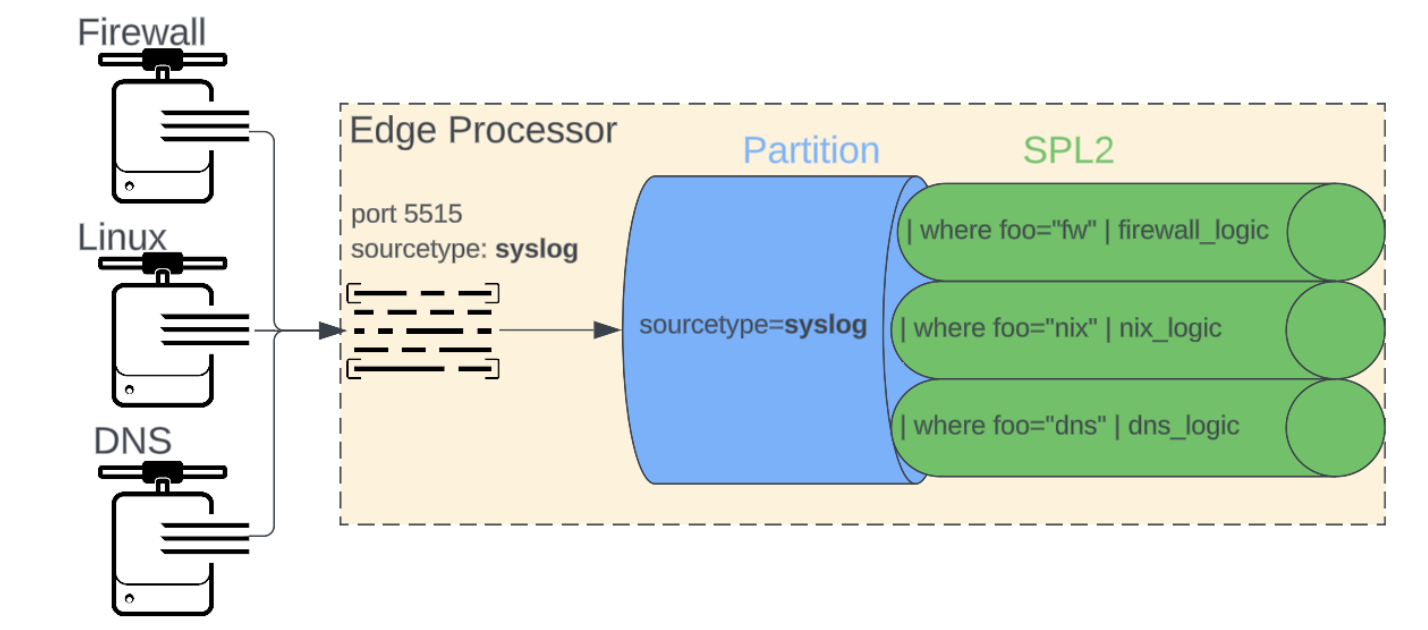

In this configuration, one or more syslog sources share a single sourcetype and a single pipeline is responsible for processing that single sourcetype into distinct sourcetype events.

| Benefits | Limitations |

|

|

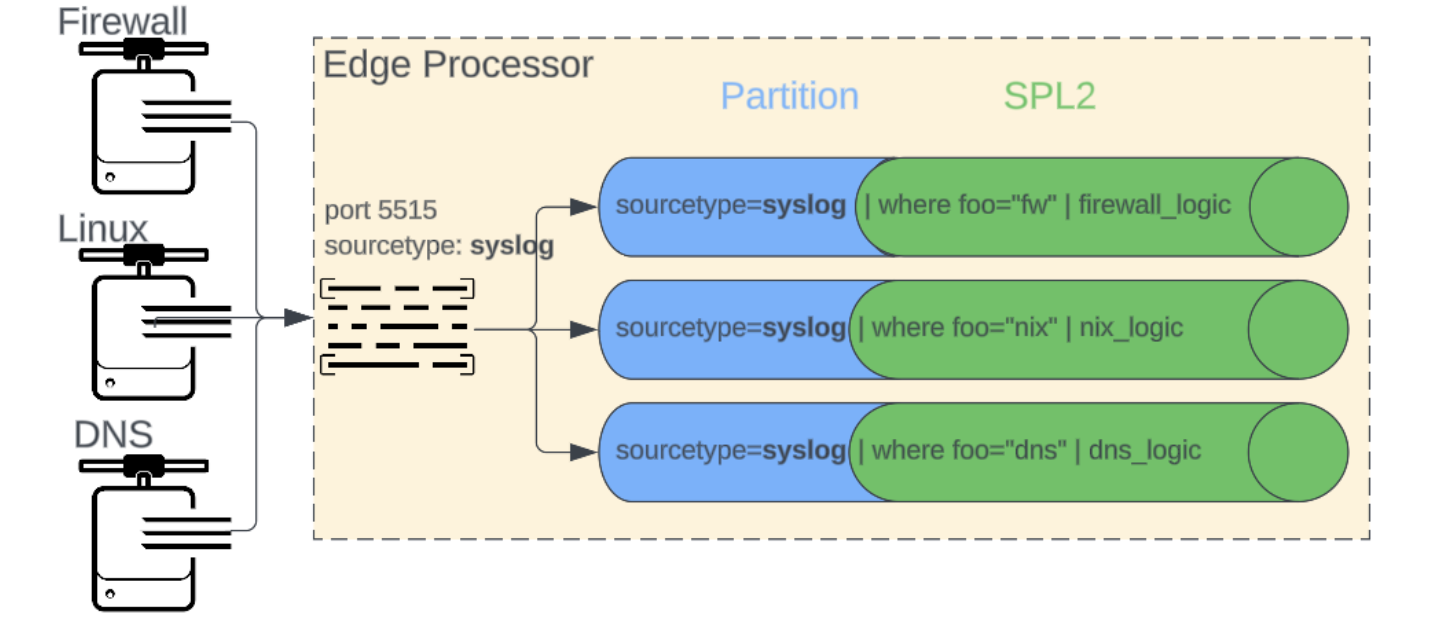

As with the prior configuration, one or more syslog sources share a single sourcetype and the pipeline(s) are responsible for detecting and processing distinct sourcetype events. However in this configuration a distinct pipeline is used for each unique sourcetype, where the initial partition is the generic sourcetype and some initial filter is used to select specific sourcetype events.

| Benefits | Limitations |

|

|

Splunk Destination

When sending events to Splunk, both S2S and HEC are validated and supported protocols. The S2S protocol is default and is configured as part of the first time Edge Processor setup.

S2S - Splunk To Splunk

| Benefits | Limitations |

|

|

HEC - HTTP Event Collector

| Benefits | Limitations |

|

|

Asynchronous Load Balancing From Splunk Agents

To learn more about Splunk asynchronous load balancing see the Splunkd intermediate forwarding validated architecture.

Asynchronous load balancing is utilized to spread events more evenly across all available servers in the tcp output group of a forwarder. Traditionally the list of output servers are indexers, but the configuration is also valid when the output servers are Edge Processors. When configuring outputs from high volume forwarders to Edge Processors, configuring asynchronous load balancing can improve throughput.

There are no specific asynchronous load balancing settings related to the output of Edge Processors being sent to Splunk.

Funnel with Caution/Indexer Starvation

To learn more about indexer starvation see the intermediate forwarding validated architecture.

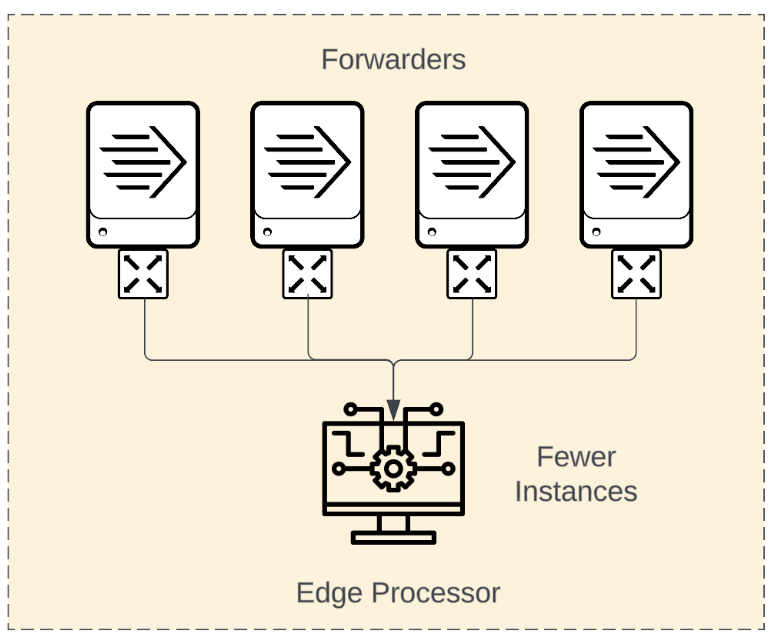

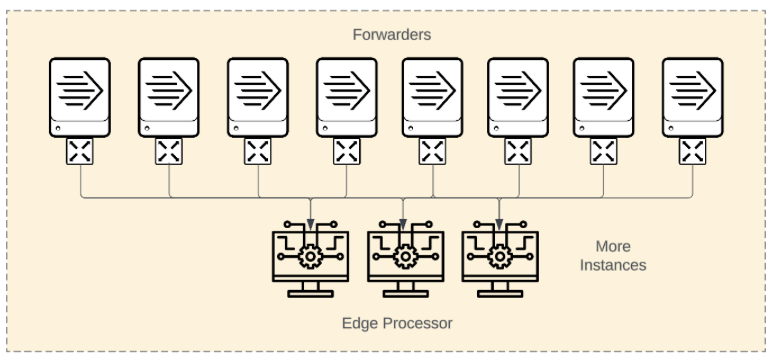

The use of Edge Processors introduces the same funneling concerns as when heavy forwarders are used as intermediate forwarders. Consolidation of forwarder connections and events into a small intermediate tier can introduce bottlenecks and performance degradation when compared to environments where intermediate forwarding is not used.

When building architectures that have large numbers of agents and indexers, consider scaling your Edge Processor infrastructure horizontally as a primary approach. Vertical scaling of the Edge Processor infrastructure will not result in a wider funnel.

Resiliency And Queueing

Pipelines, queuing, and data loss

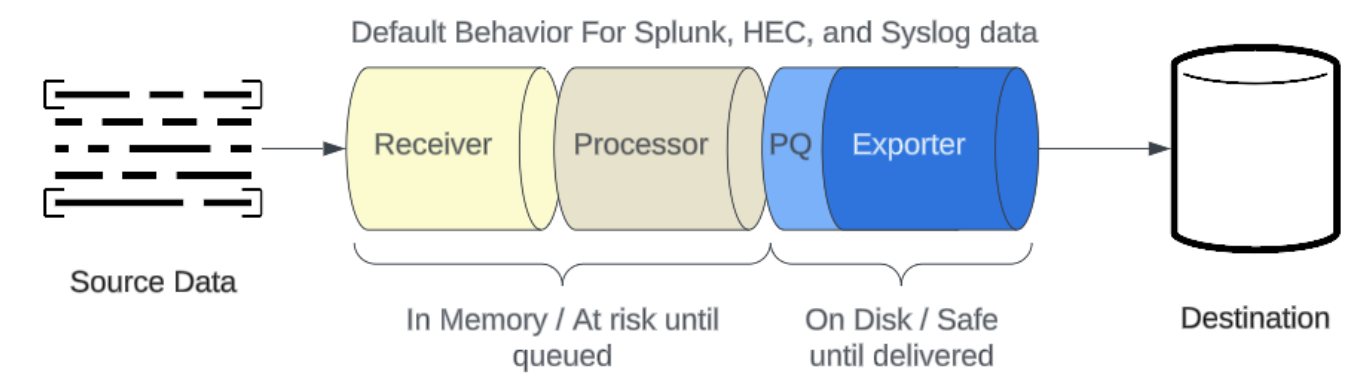

Data received by Edge Processors are stored in memory while it passes through the processing pipeline. Once an event has left the processor and is delivered to the exporter, it is queued on disk. The event will remain in the disk-backed queue until the exporter successfully sends it to the destination.

Data from a source is only ever processed by a single Edge Processor instance. Even in scenarios where events are eligible for multiple pipelines, any given event is only processed by a single Edge Processor instance. Edge Processor instances are unaware of other Edge Processor instances and data is never synchronized or otherwise reconciled between instances. Because of this, the domain for data loss is the amount of unprocessed data in memory on any given instance.

HTTP Event Collector Acknowledgement

The HEC data input for Edge Processor supports acknowledgement of events. From the client perspective, this feature operates the same as HEC Acknowledgement on Splunk. However, whereas the Splunk implementation of HEC Ack can monitor the true indexing status, Edge Processor will consider the event acknowledged successfully once the event has been received by the instance's exporter queue. It may be some time between the delivery of the event to the queue and the receipt of the event by the destination index, so sending agents may register the event as delivered before the data is indexed or searchable.

| Benefits | Limitations |

|

|

Size and scaling

Monitoring

There are many dimensions available for monitoring an Edge Processor and its pipelines. You can review all of the various metrics available using mcatalog. The list of metrics will grow over time so it's best to review all available metrics and dimensions in your environment:

| mcatalog values(metric_name) WHERE index=_metrics AND sourcetype="edge-metrics"

There's no one metric that can tell you it's time to scale up or down, instead monitor key metrics across your Edge Processors in order to establish baseline, expected usage metrics. In particular:

- Throughput in and out

- Event counts in and out

- Exporter queue size

- CPU & Memory consumption

- Unique sourcetypes and agents sending data

Additionally, consider measuring event lag by comparing index time vs. event time as a general practice for GDI health, irrespective of the Edge Processor.

Scale up/down

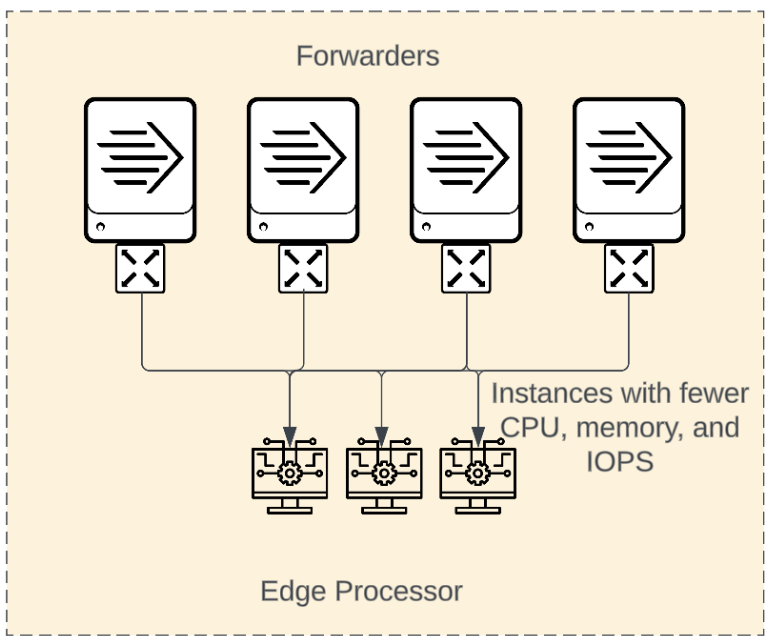

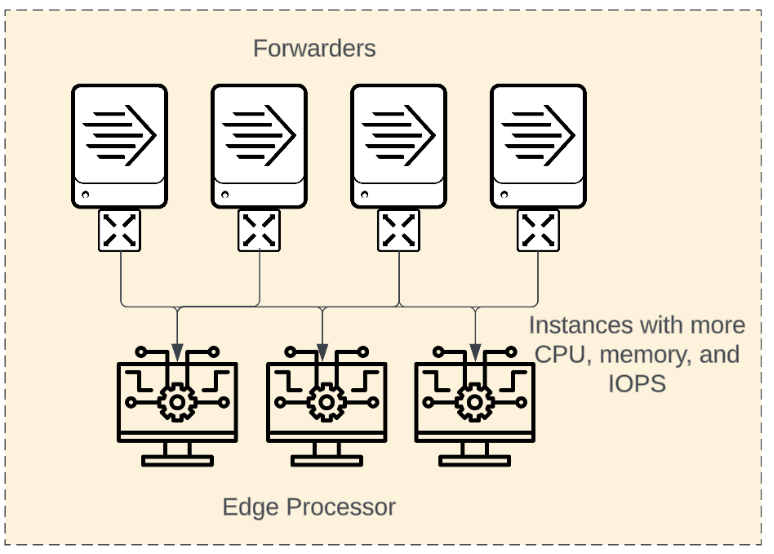

As data processing requirements change you'll have to decide whether to scale by altering the available resources for your Edge Processor instances, or by altering the number of instances doing the processing. The following are some common scenarios and the most common scale result:

As is the case with most technology, Edge Processor instances scale both vertically and horizontally depending on the circumstances, and scaling one way vs. the other can lead to different outcomes.

| Scenario Examples | Scale Example |

|

Scale out Number of data sending clients increase Need to improve indexer event distribution and avoid funneling Spread out persistent queues, require less disk space per instance Improve resiliency, reduce impact of instance failures Can address data resiliency and reporting requirements. |

to |

|

Scale up Data pipeline complexity increases such as: More complex regular expressions Multi-value evals and mvexpand Branched pipelines More destinations Significant event size or event volume increases Long persistent queue requirements |

to |

For most purposes consider any substantial change to any of the following as cause to evaluate scale:

- Event volume, both the number and size of events.

- Number of forwarders or data sources.

- Number and complexity of pipelines.

- Change in target destinations.

- Risk tolerance.

Any change to these factors will play a role in the overall resource consumption and processing speed of Edge Processor instances.

| Ingest Actions for Splunk platform | Syslog data collection |

This documentation applies to the following versions of Splunk® Validated Architectures: current

Download manual

Download manual

Feedback submitted, thanks!