Ingest Actions for Splunk platform

This Splunk validated architecture (SVA) applies to Splunk Cloud Platform and Splunk Enterprise products.

Initial publication: March 23, 2023

Last reviewed: April 9, 2025

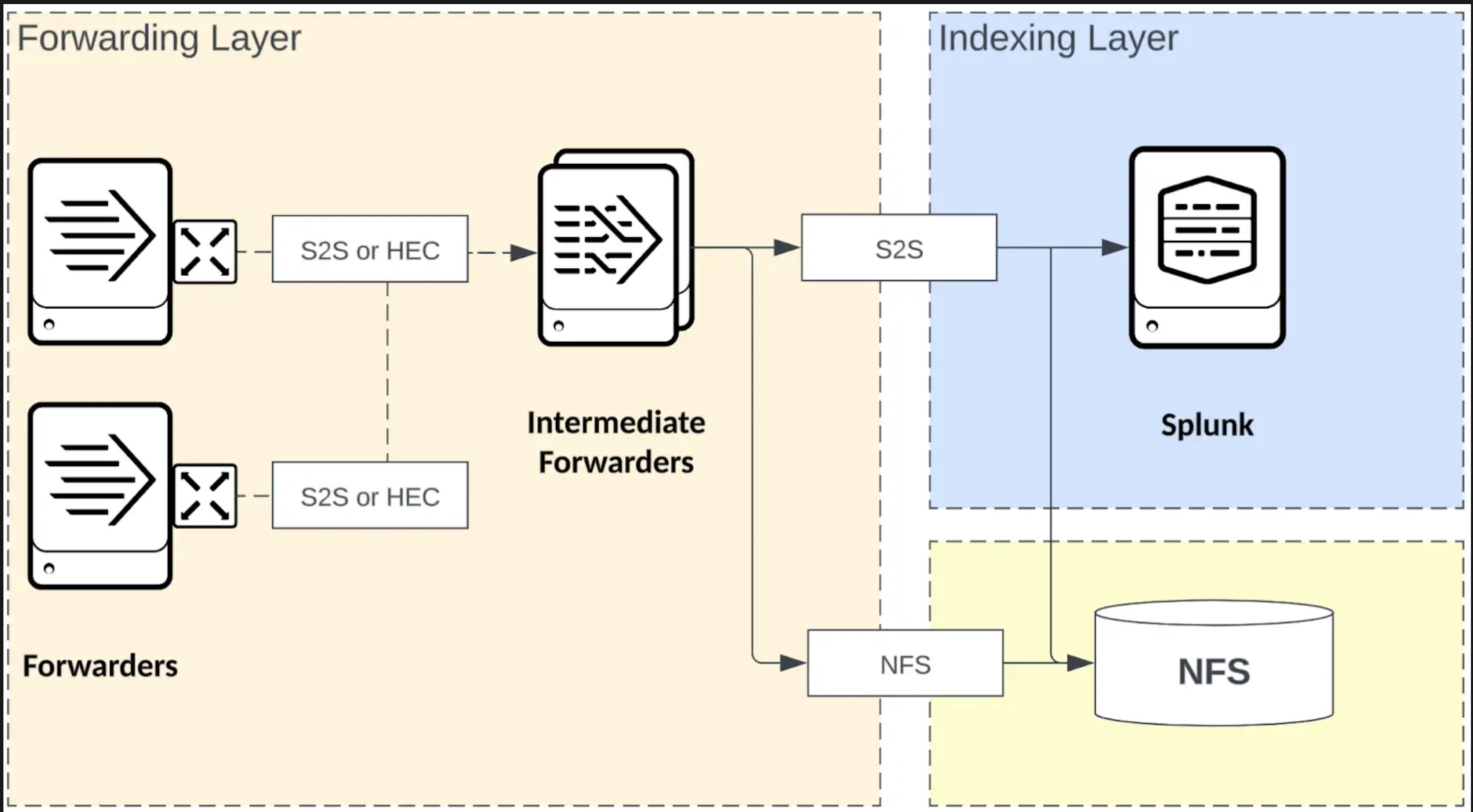

Architecture diagram

Benefits

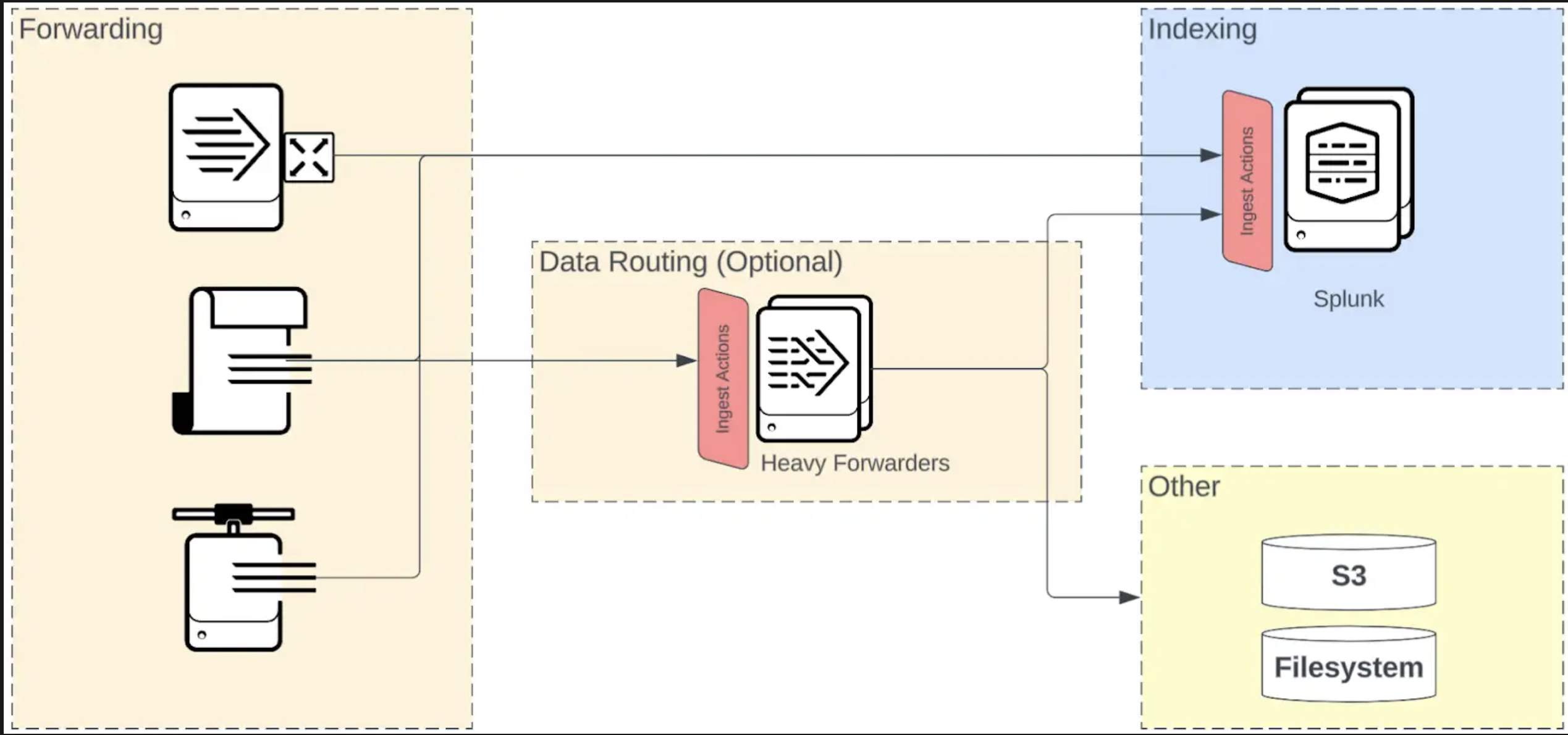

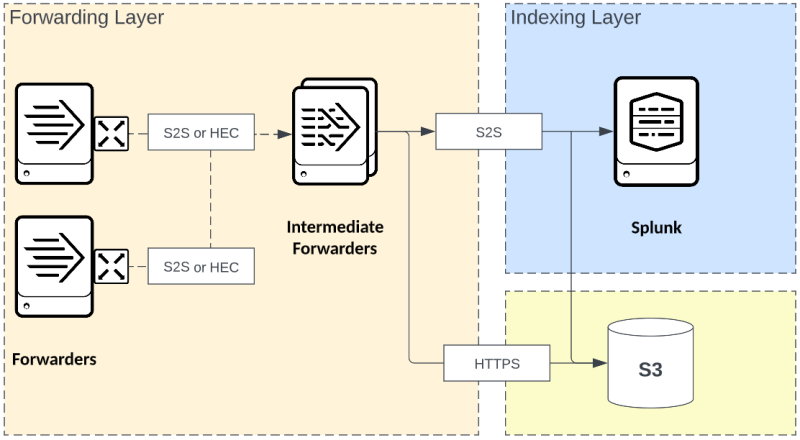

Ingest actions represents a number of capabilities related to pre-index event processing and data routing. You can use ingest actions both directly at the Splunk platform indexing layer as well as at the intermediate forwarding layer when heavyweight forwarders are used.

There are three primary benefits of ingest actions that are described in this document: rulesets, routing to S3, and the ingest actions user interface. Each of these benefits and features have their own advantages and benefits but the overall benefit is to provide users more control and flexibility over their data before it is permanently stored. Customers might want to exercise this additional control for the following reasons:

- Mask, redact, remove, or otherwise change raw data before indexing

- Tag, add, lookup, or otherwise augment raw data before indexing

- Filter entire events from being indexed

- Have tighter control over which indexes data is sent to

- Send some or all data to third-party storage either independently or concurrently with data going in to the Splunk platform

While the use of pre-index event processing has implementation and search time considerations, the data routing topologies documented throughout the validated architectures remain largely the same with or without the use of ingest actions. This includes high availability and scale decisions. Caveats and considerations to these topologies are described in the following sections.

The configuration of ingest actions uses the same props.conf and transforms.conf described in the Splunk product documentation and in other validated architectures. However, the distribution of these ingest actions can manifest in several different ways depending on the specific implementation. Those distribution methodologies are described in the following sections.

Limitations

- There are specific restrictions and requirements when ingest actions is used to route data to S3 depending on whether those ingest actions are executed in Splunk Cloud Platform versus a customer-managed environment.

- The addition of event processing can add significant overhead to the processing pipelines both on heavyweight forwarders and indexers. Take care to implement efficient and concise rules, especially with high volume data.

- Modifying data before indexing can break add-ons and other knowledge objects that rely on data to match specific shapes or patterns. Take care to evaluate search-time knowledge for any sourcetypes that you intend to modify with ingest actions to ensure consistency. This might require modifying search time knowledge to comply with new data formats as a result of ingest actions

- Management, maintenance, and distribution of ingest actions depend specifically on where in your topology those actions are implemented.

- Ingest actions rulesets are processed differently than traditional transforms and need to be understood for proper execution of rules.

Each of the main functions of ingest actions has specific benefits, limitations, and concepts worth exploring and understanding. Those details are provided in the following sections.

Rulesets

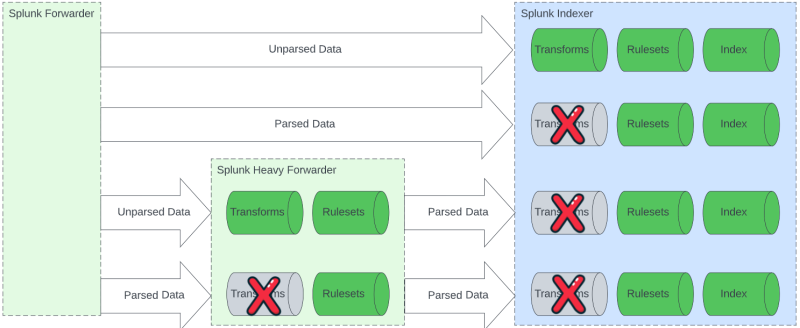

Ingest actions adds RULESET processing to Splunk heavy forwarder and indexer processing pipelines. The ruleset functionality adds a layer of capability to the pipeline such that data that has already been parsed can be re-parsed. TRANSFORMS are only able to affect unparsed data and will at most be processed once per pipeline, whereas RULESETS can be used at each stage of processing. RULESETS function as TRANSFORMS in most other ways. Users use TRANSFORMS and RULESETS to implement business logic to data prior to indexing.

It's important to understand how TRANSFORMS and RULESETS work together and their order of operations. TRANSFORMS can be processed at most one time per event and RULESETS can process data at any point along the path.

Benefits

- Support for event processing throughout the data routing pipeline. This allows for different processing of events to be applied at multiple or various stages of data routing. The ability to repeatedly modify data can be very useful when tagging data or protecting data prior to leaving specific data domains.

- Can execute event processing on parsed data. Transforms are limited to processing on unparsed data only. Rulesets allow for processing both unparsed and parsed data.

- Rulesets are defined in props and transforms and are compatible with existing configuration file management and distribution.

- Gives Splunk administrators more control over data before being indexed. Rulesets can be used as a traffic cop to allow or deny specific or arbitrary data from being sent to specific indexes.

Limitations

- Understanding transforms and ruleset processing order and precedence is critical to ensuring data is modified as intended and in the right order.

- Before modifying data using either rulesets or transforms, you must ensure that search-time artifacts are compatible with the format of data resulting from pre-index transformations. Modifying source data without evaluating search-time artifacts can result in broken dashboards, reports, alerts, and searches. This is also true for Splunkbase add-ons and apps.

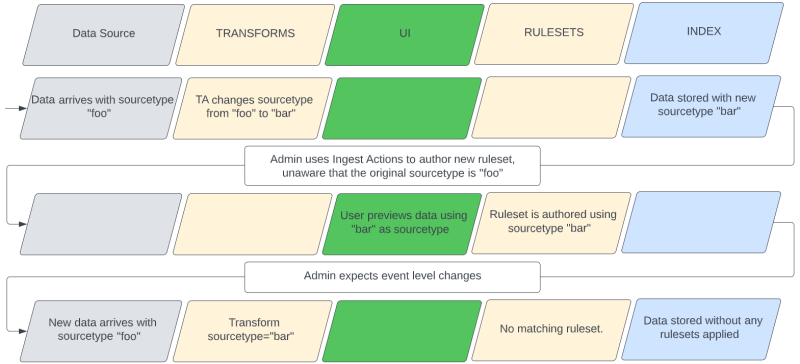

- The scoping/application of Ingest Actions rulesets depends on the original sourcetype. If a transform has modified the original sourcetype, then rulesets must be scoped using the original sourcetype and not the resultant sourcetype from the transform.

Understanding the original sourcetype limitation

The order of operations noted previously is important for understanding how the use of a transform can affect the implementation of a ruleset. It's possible that a transform can modify a sourcetype such that when previewing data for ruleset authoring, the resultant events are marked with a sourcetype that's different from the original data. This can cause confusion when authoring rulesets in the UI because the ruleset needs to be authored using the original sourcetype, but the preview will have been done using the resultant sourcetype.

In reality, the ruleset that should have been crafted in the example in the preceding image would use the original "foo" sourcetype. The UI is previewing data that has already been affected by the prior transform rather than the original data, which is the actual target data.

This situation can be mitigated in several different ways:

- Use live preview to capture real-time original events. Live previewed events will show event data before any transforms have been applied. This requires that data is actively being received by the Ingest Actions host.

- Preview and author the rulesets using the resultant sourcetype, but modify the configuration produced by ingest actions to use the original sourcetype

- Upload a file containing the sample events and explicitly select the original sourcetype, rather than using search-based event sampling.

- Implement the ruleset on a different processing tier. The sourcetype restriction is only applicable if the transforms and rulesets are executed on the same Splunk platform instance. For example, an intermediate tier could change the original sourcetype and then send it to another tier, where a final ruleset is crafted targeting the sourcetype produced by the intermediate transform.

Routing data to S3

Routing data to S3 is similar in nature to routing data to syslog or TCP. Whereas those methods are fairly straightforward, the S3 connection has more variety to consider in configuration:

- Distance from S3 or increased latency limits overall throughput which can cause the output queue to fill.

- In the case of a Splunk Cloud Platform deployment, S3 buckets must be in the same region as the deployment.

- Must choose between IAM or Secret Key methods

- Either raw JSON or compressed formats can be used in the buckets written to S3

- Both size or time-based batching can be used. Optimal settings will depend on the shape of included data.

The default settings attempt to find a middle ground between performance and risk. Data that is too big or too small can result in poor performance. Data that is held in the queue for too long is at risk of being lost.

Sizing and throughput considerations

If there is sufficient local CPU, memory, and disk performance, any given Amazon S3 RFS output thread can see write speeds of 90MBps, which is roughly 7.5TB per day. Put another way, when Amazon S3 performance is the limiting factor, 90MBps will be peak output per thread. When the S3 destination is not an Amazon destination, such as a customer managed one, the overall performance will be dictated by that infrastructure. If there are sufficient local resources, multiple RFS output threads can be used to increase overall throughput. See rfs.provider.max_workers in limits.conf. By default there are 4 threads per pipeline.

Routing to NFS

Configuring filesystem outputs that are NFS mounts adds complexity that can affect data ingestion. See Configuring file system destinations with ingest actions for more information.

- Latency: NFS read/write performance can be affected by network latency, which impacts data access times. Ensure that network paths between the Splunk instances and NFS servers are optimized for minimal latency.

- Performance: The network and hardware performance of the NFS are the primary limiting factors for how much data can be ingested and sent to a given destination. Monitoring and managing this throughput is crucial to avoid queueing and data latency. Manage blocked outputs for each Splunk instance configured to send to multiple destinations.

- Consider starting with dropEventsOnUploadError for any non-critical outputs. This can be enabled in the User Interface or manually using outputs.conf.

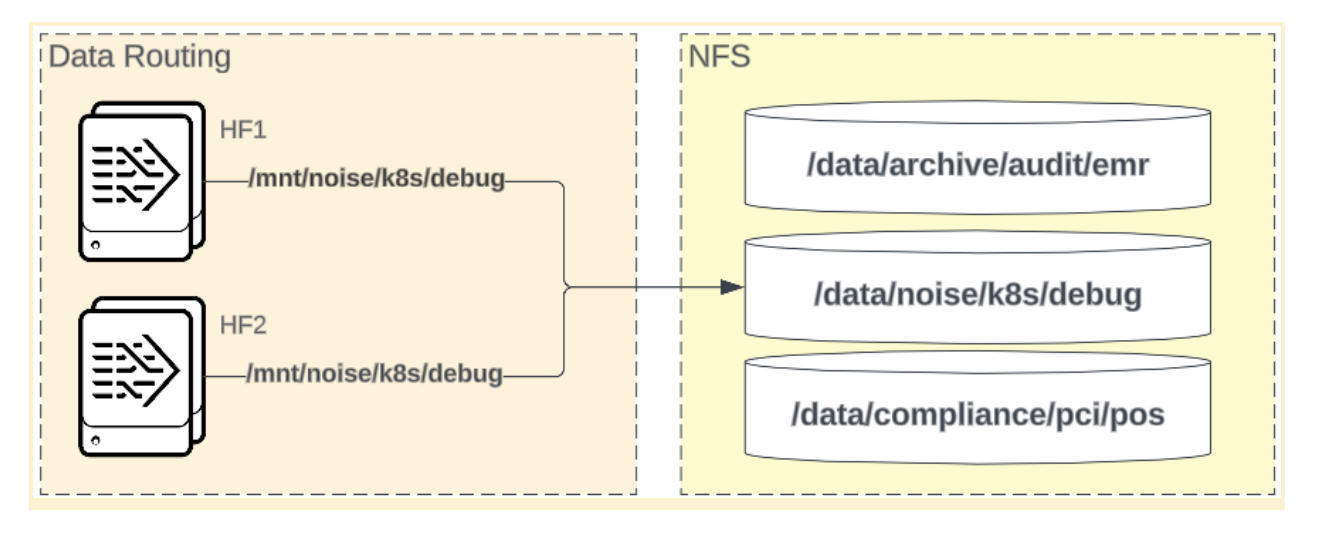

- NFS architecture model: Deciding between shared and dedicated NFS volumes is a foundational consideration. Each approach has its own set of benefits and limitations, which are listed further down this page.

- Data Format and Partitioning: Consider the intended use case(s) of the data stored on the NFS should be to reduce integration/reingestion. See an example in Using file system destinations with file system as a buffer.

Sizing and throughput considerations

Similar to S3 destination that is customer managed or otherwise not Amazon, the total possible throughput for NFS destinations will be slower compared to other local or remote resources. If the throughput capability of the Splunk server is greater than the NFS, then the Splunk server will eventually queue events, and potentially block or drop them. If the throughput capability of the NFS is greater than Splunk, then the number of NFS output threads can be scaled and managed using the same rfs.provider.max_workers in limits.conf, as with S3 destinations.

NFS architecture models

See the following NFS architecture models.

In the shared NFS volume model, multiple Splunk instances are configured to store their data on a single NFS volume, consolidating storage management and simplifying certain operational tasks.

Benefits:

- Simplified management: Centralized storage can improve backup and disaster recovery processes.

- Resource Efficiency: Reduces the need to manage multiple storage volumes.

Limitations:

- Resource Contention: Increased risk of performance bottlenecks when multiple instances access the volume simultaneously.

- Data Integrity Risks: Configure carefully to prevent data corruption and ensure reliability. See partitioning guidance on this page.

Considerations:

- Evaluate the NFS server's capacity to handle concurrent access and implement access controls.

- Plan for potential growth in data volume and its impact on performance.

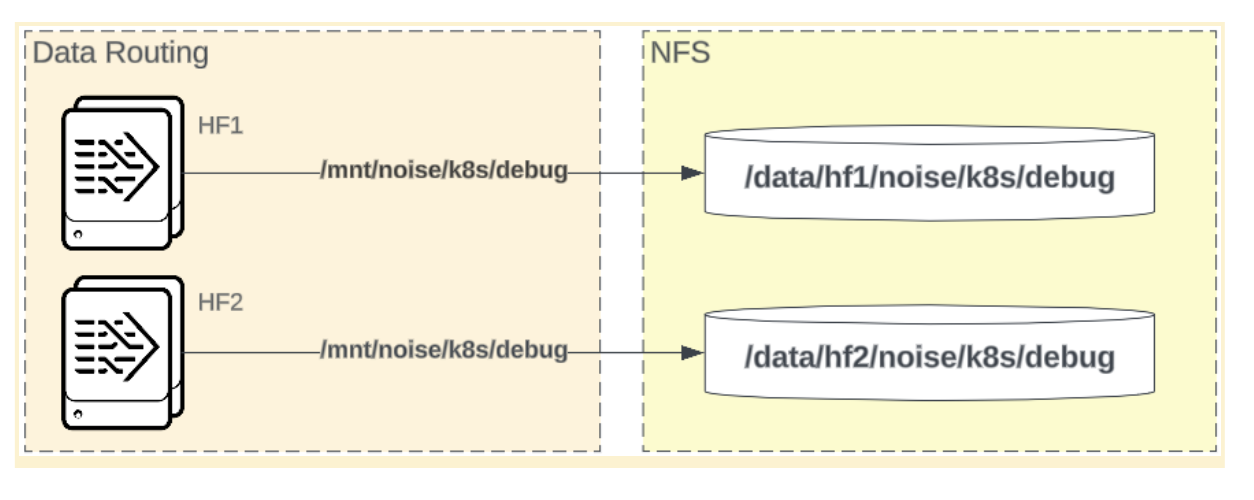

Dedicated NFS Volume Model

In the dedicated NFS volume model, each Splunk instance is allocated its own NFS volume, which is designed to optimize performance and minimize contention.

Benefits:

- Performance Optimization: It reduces the risk of resource contention, improving data access speeds.

- Tailored Configuration: It allows for specific performance tuning for each instance or class of instance.

Limitations:

- Complex Management: It increases complexity by managing separate volumes for each instance.

- Resource Allocation: There is a higher demand on infrastructure resources to maintain multiple volumes.

Considerations:

- Assess infrastructure requirements and ensure that sufficient resources are available.

- Develop a management strategy to handle multiple volumes, and their associated configurations.

Partitioning and format considerations (S3 and Filesystem/NFS)

Partitioning is a critical component of data management whether using Splunk, S3 or NFS. Effective partitioning strategies help prevent conflicts and enhance data retrieval efficiency. When sending events to S3 or NFS, the subsequent use-case of the stored data should be considered when choosing the stored format and partitioning scheme.

Collision prevention

All stored files will include a unique identifier (peer_guid) to prevent filename collisions:

/year={YYYY}/month={MM}/day={DD}/events_{lt}_{et}_{field_create_epoch}_{32b_seq_num}_{peer_guid}

Sourcetype partition

In most cases, including the sourcetype as a partition key is recommended, particularly when the stored files will be used with Federated Search for S3. However, any time events can be retrieved and replayed, as sourcetyping is integral to Splunk data management.

Sourcetype partitioning is not available as an option for NFS.

Format

Data stored remotely can be formatted in raw, ndjson, or json formats. Subsequent systems interacting with this data may have specific requirements regarding the stored format. For example, Federated Search for S3 requires the stored data be in ndjson (newline delimited json) in order to properly return results. Carefully evaluate and test these formats.

User interface and management topology

A major component of ingest actions is a user interface for crafting event processing rules. The interface provides a mechanism for filtering, masking, and routing data. While ingest actions rulesets provide a distinct processing pipeline element, the ingest actions interface is an assistive feature to help administrators author common, but potentially complex configurations.

Benefits

- Enables users to implement logic using a UI rather than only configuration files

- Live preview of ruleset logic and effect on data, leading to faster iteration and implementation time

- Easier to interpret data transformation workflow

- Provides copy/paste configuration

- Compatible with all Splunk platform topologies

Limitations

- Data preview and rulesets authoring is limited to sourcetype-based configuration

- Data preview and authoring tools may provide an incongruent view between live data and indexed data (See Understanding the Original Sourcetype limitation.)

- Only a subset of capabilities are represented in the user interface: routing, masking, and filtering. Configuration files can be used for more complex event processing.

- The UI is not case sensitive when previewing data, but the resultant configuration files are case sensitive. It's critical to author ingest actions rules in the UI using the proper case.

- All rulesets created by the UI live in the same Splunk app. Any modification of rulesets within add-ons or other apps must be done manually by configuration file.

Management techniques

As the ingest actions functionality exists within the ecosystem of Splunk architecture, common best practices for managing configuration rulesets continue to apply. Rulesets are defined within the props and transforms constructs. If you are using third-party configuration management tools, the inclusion of rulesets will use the same files. You may need to re-evaluate whether restarting the Splunk platform is required for changes to take effect.

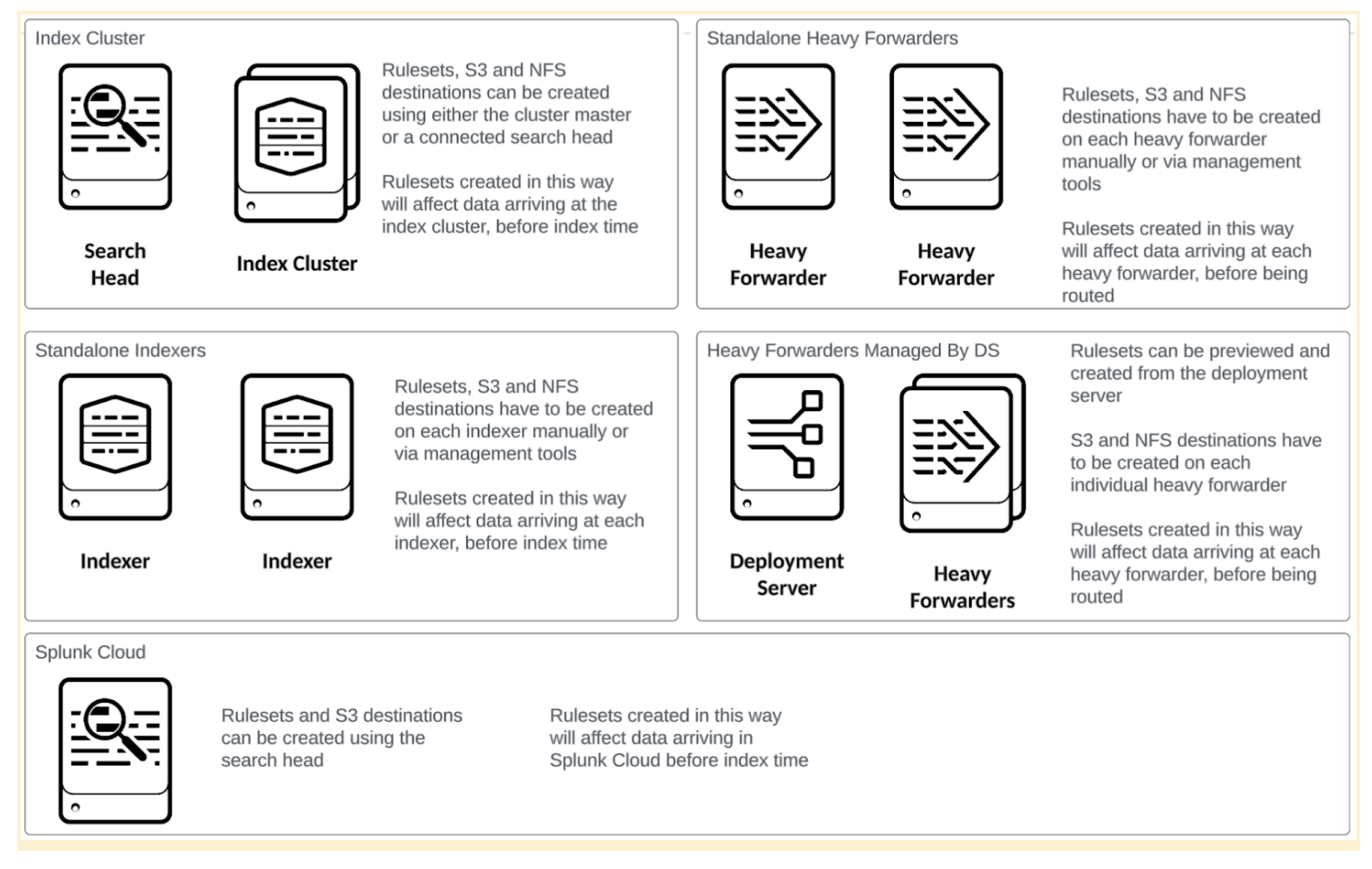

The following image shows the most common deployment topologies and the effect on management and use:

Dedicated deployment server

When you use the deployment server to manage rulesets on heavy forwarders, you must use dedicated deployment servers for those heavy forwarders rather than shared infrastructure. The Ingest Actions page on the deployment server automatically creates the IngestAction_AutoGenerated server class and assigns that class to the forwarders. This is not usually desirable on deployment servers that are servicing other server classes.

Decoupled ruleset authoring and management

It is possible to decouple ruleset authoring from ruleset deployment to some extent. The Ingest Actions UI has the capability to display the props and transforms configuration settings resulting from a ruleset preview session. The configurations produced can be integrated into your existing knowledge object and configuration and change management process. In this case, a server would be dedicated to authoring rulesets but would not be used in the management of those rulesets.

Evaluation of pillars

| Design Principles / Best Practices | Pillars

| |||||

|---|---|---|---|---|---|---|

| Availability | Performance | Scalability | Security | Management | ||

| #1 | Meet your event level business requirements by using rulesets

Filtering, masking, and routing are key elements in optimizing your data ingestions. Rulesets address those use cases directly. |

X | X | |||

| #2 | Ensure adequate resources when routing data to S3 to avoid blocking queues

Sending data to S3 happens on a single thread per forwarder. Horizontal scale may be needed. |

X | X | |||

| #3 | Choose a management technique compatible with your deployment

Management options differ from topology to topology. |

X | X | |||

| #4 | Understand which sourcetypes your rulesets are targeting and which TAs are also modifying those sourcetypes

Ensure that your rulesets are applied and downstream results are compatible with other knowledge objects |

X | X | |||

| #5 | Use proper case in your sourcetypes when building rulesets

Good practice to reduce troubleshooting |

X | ||||

| #6 | Minimize latency to S3

The faster that data can make its way to S3, the faster queues will empty and keep overall event delivery latency low to all destinations |

X | X | |||

| #7 | Use the UI to accelerate ruleset creation and understand impact of changes

The interface offers a lot of advantages over creating rulesets manually, including reducing errors and troubleshooting |

X | X | |||

| Intermediate data routing using universal and heavy forwarders | Edge Processor Validated Architecture |

This documentation applies to the following versions of Splunk® Validated Architectures: current

Download manual

Download manual

Feedback submitted, thanks!