Intermediate data routing using universal and heavy forwarders

Guidance in this document is specific to routing data using the Splunk platform and splunkd-based universal and heavy forwarders, in particular. There are other intermediate routing options, including both OpenTelemetry and Edge Processor, which are not covered here. This document outlines a variety of Splunk options for routing data that address both technical and business requirements.

Overall benefits

Using splunkd intermediate data routing offers the following overall benefits:

- Data routing variety provides flexibility and capability for users working with data that must be addressed or processed at arbitrary data boundaries.

- Specific configuration and sizing are tuned according to customer requirements. The details in this document aim to clarify these configuration choices.

- You can deploy intermediate routing in a highly available and load-balanced configuration, and use it as part of your enterprise data availability strategy.

- The routing strategies described in this document enable flexibility for reliably processing data at scale.

- Intermediate routing enables better security in event-level data as well as in transit.

- The following is a list of use cases and enablers for splunkd intermediate data routing:

- Possessing a central data egress surface area. For example, on-premises to Splunk Cloud.

- Routing, forking, or cloning events to multiple Splunk deployments, S3, Syslog, and such systems or environments.

- Masking, transforming, enriching, or otherwise modifying data in motion.

- Reducing certificate or security complexity.

- Removing or reducing ingestion load on indexing tier.

- Separating configuration changes from the indexing tier to reduce the need for rolling restarts and other availability disruptions.

Routing architectures

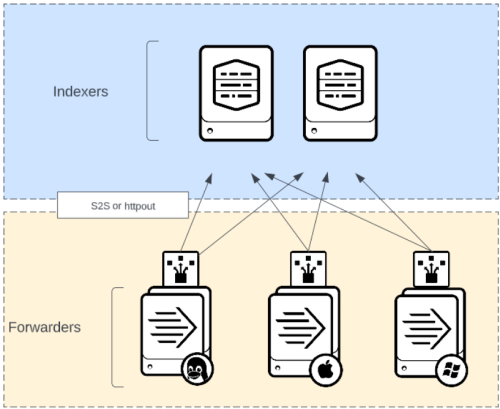

Direct forwarding

In the most direct scenario, each universal forwarder (UF) sends data directly to Splunk.

Benefits

- Minimal complexity.

- Indexing is optimized to handle many connections. Forwarders balance over time.

- Fastest time to index, with the lowest number of hops.

- Proxy compatible using httpout (S2S encapsulated in HTTP).

Limitations

- Direct network access to indexers may not be possible.

- Network bandwidth or capability may be limited at egress.

- Splunk Cloud compatibility limited to specific forwarder versions.

- Event level processing would generally be executed on the indexing tier.

- For Splunk Cloud forwarder certificates and applications, there is an inability to revoke keys for a business unit experiencing an increase in ingest, thus affecting the indexers.

- There is a performance impact on clients if you enable event processing.

- PCI, PII, Fedramp, and other sensitive environments often require IP allow lists, which may limit the number of UFs that can feasibly send direct.

When the UF is the correct choice for data collection, the most straightforward approach is to send data directly to Splunk from the forwarder. When the indexing tier is customer-managed, network routing is often straightforward, and the S2S protocol is optimal. When the forwarder must send across the internet via an HTTP proxy, when deep packet inspection is required, or when hardware load balancing is preferred, you can configure the forwarder to send data in HEC format. If guaranteed delivery is a requirement, see About HTTP Event Collector Indexer Acknowledgement.

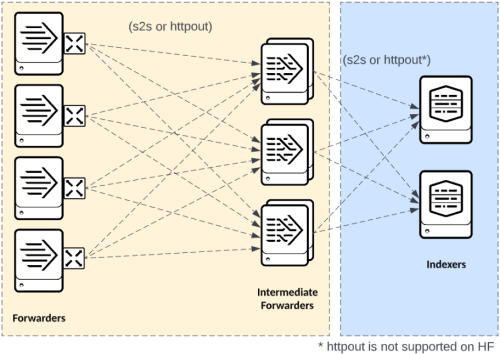

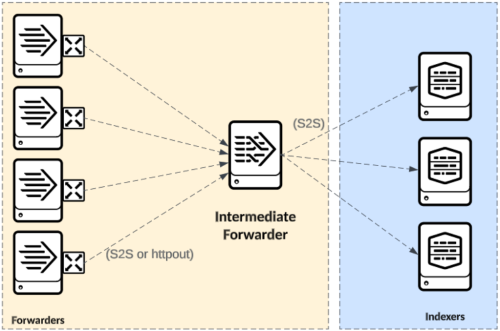

Intermediate forwarding

In some situations, intermediate forwarders (IFs) are needed for data forwarding. IFs receive log streams from Splunk forwarders (or other supported sources) at a data edge and forward to an indexing tier and other supported destinations. IFs require careful design to avoid potentially negative impacts on a Splunk environment. Most prominently, intermediate forwarding can concentrate connections from many forwarders to just a few Splunk Indexers. If configured with default settings, this can affect the data distribution across the indexing tier because only a subset of indexers will receive traffic at any given point in time. Also, IFs add an additional architecture tier to your deployment, which can complicate management and troubleshooting, and may add latency to your data ingest path.

Conditions that favor using an intermediate tier

- Strict security policies that do not allow for direct connections between data sources and indexers, such as multi-zone networks or cloud-based indexers.

- Bandwidth constraints between endpoints and indexers that require a significant subset of events to be filtered.

- Routing, forking, or cloning events to multiple Splunk deployments, S3, Syslog, or other supported destinations.

- Masking, transforming, enriching, or otherwise modifying data in motion.

- Reducing certificate or security complexity.

- Removing or reducing ingestion load on indexing tier.

Limitations

- Requires dedicated and appropriately scaled infrastructure.

- Increases configuration and troubleshooting complexity.

- Can be a bottleneck if improperly sized or scaled. See Funnel with caution/indexer starvation below.

- Can add complexity to indexer and search implementation or optimization.

Heavy and universal intermediate forwarders

Intermediate heavy forwarder

Using heavy forwarders (HFs) as intermediates is the default implementation of a splunkd-based intermediate forwarding tier. However, you should consider both heavy and universal options to find the best solution for your needs.

HFs offer the most predictable and reliable intermediate forwarding, can be tuned to optimize performance even in lightweight scenarios, and offer in-place flexibility to adapt to more advanced event-processing use cases.

Benefits

- The HF parses the data, which can offload significant processing workload from indexers.

- Enables event-level processing by default using props and transforms.

- Supports HTTP Event Collector inputs and can act as HEC tier.

- Can reprocess cooked data using RULESETS.

- Supports more output destinations, including syslog and S3.

- Appropriate for useAck scenarios.

Limitations

- Likely requires content and knowledge management for the intermediate tier. Data payload increases in size over UF. Take care not to unnecessarily increase event size.

- Using httpout rather than Splunk protocol can result in throughput bottlenecks and should be carefully considered before implementing. Consult with Splunk professional services or architects before using httpout with intermediate heavy forwarders.

Implementation considerations

| Detail | Consideration |

|---|---|

| Event processing | EVENT_BREAKER is not used on heavy forwarders. You can execute Ingest Actions and bespoke props/transforms-based processing on this tier. To fully understand how Splunk parses data to prevent downstream processing problems, see parsing in the Splexicon. |

| useAck | If S2S or HEC ack is enabled, you should enable it on both the inputs and outputs of the IF to decrease the risk of duplicate events and runaway queue growth. You should always review the splunkclouduf.spl TA that Splunk Cloud provides before use, as useAck is one of the default settings. |

| Volume Base Load Balancing | You should set proper output autoLBVolume and connectionTTL for Splunk Enterprise and Splunk Cloud outputs to ensure that the heavy forwarders smoothly distribute events to indexers. Carefully read the Asynchronous load balancing section below. |

| Time Base Load Balancing | Volume-based load balancing is advised over time-based balancing (forceTimebasedAutoLB). |

| TA/Knowledge | TAs that contain event-processing knowledge such as line-breaking, time-stamping, field extractions, and sourcetype renaming must be installed on heavy intermediate tiers. |

Intermediate universal forwarder

Only use UFs as IFs in very basic forwarding scenarios.

While UFs may offer a somewhat lighter-weight footprint than heavy forwarders, attempting to use the UF in any advanced scenario can lead to duplicate or missing data and generally unpredictable results.

Benefits

- The UF does not parse data by default. It passes along compression savings and optimizations from the source.

- The UF default configuration consumes fewer system resources than a heavy forwarder. In simple routing scenarios, UFs may outperform HFs as IFs and result in less overall network traffic.

- Proxy compatible using httpout protocol (S2S encapsulated in HTTP).

Limitations

- Data scenarios requiring useAck or event-level processing should not use UF.

- Ensuring timezone consistency requires special consideration.

- S2S and httpout are mutually exclusive.

- Large events may be truncated or lost.

- Complex configurations can result in runaway queuing and lost data.

Implementation considerations

| Detail | Consideration |

|---|---|

| Event processing | A UF intermediate tier and any upstream UFs should have EVENT_BREAKER configured on all sourcetypes to safely split streams across multiple receivers. Other than EVENT_BREAKER, UFs should not be used to do any event processing. |

| useAck | You should not employ useAck with UF as intermediate, and if using as part of the data path for Splunk Cloud Victoria, you should review the provided splunkclouduf.spl TA, as useAck is enabled in the default settings. |

| Volume Base Load Balancing | Same as guidance for heavy forwarders. Note that you should not use volume-based load balancing on client UFs, only intermediate UFs. Using volume-based load balancing on a large number of clients can lead to port exhaustion on the indexing tier as well as internal log bloat. |

| Time Base Load Balancing | Volume-based load balancing is generally preferred over time-based balancing (forceTimebasedAutoLB). |

| TA/Knowledge | UFs should not have any event processing TAs installed. |

General intermediate considerations

Intermediate size and scale

Intermediate forwarding tiers can scale both horizontally and vertically. For vertical scaling, you must tune the number of processing pipelines to utilize unused system resources. By increasing the available processing on a single node and increasing data throughput per node, you can achieve outcomes similar to horizontal scaling. However, reducing the number of physical nodes increases the amount of data affected by the loss of any given intermediate node.

Consider sizing and configuration needs for any intermediary forwarding tier to ensure its availability, provide sufficient processing capacity to handle all traffic, and support good event distribution across indexers.

The IF tier has the following requirements:

- A sufficient number of data processing pipelines.

- A properly tuned Splunk load balancing configuration.

- The general guideline suggests having twice as many IF processing pipelines as indexers in the indexing tier. If your indexers are behind a load balancer, as is the case with Splunk Cloud Victoria Experience, you do not need to follow this guideline. However, you do still need to provide sufficient processing pipelines and resources.

A processing pipeline does not equate to a physical IF server. Provided sufficient system resources (CPU cores, memory, and NIC bandwidth) are available, you can configure a single IF with multiple processing pipelines.

Follow asynchronous forwarding best practices and ensure (# of indexers * 2) pipelines between intermediate tier and indexers for even distribution of events across the indexing tier. You must tune parallelIngestionPipelines for the capabilities of the IF hardware. While 2 is a typical value, consult with Splunk professional services or architects to determine an appropriate value.

The Splunk Cloud Migration Assistant can provide interactive guidance for hardware sizing of IFs.

Funnel with caution/indexer starvation

Proper configuration and scaling of resources is critical when the number of forwarders is significantly greater than the number of IFs. The following risks are associated with having many forwarders send to a few intermediates:

- Large amounts of data from many endpoints through a single or unoptimized pipe exhausts system and/or network resources.

- Insufficient failover targets for the forwarders in the case of IF failures.

- Indexer hotspots and uneven data distribution due to fewer indexers connections.

When sizing an intermediate tier, understand the peak data volume and the number of sending forwarders. Use parallel ingestion pipelines and horizontal scaling to ensure adequate resources for your forwarders and to ensure even event distribution. See Asynchronous load balancing.

Condensing many inputs to a smaller number of IFs can lead to indexer starvation and uneven event distribution. The following is a strategy for mitigating these side-effects:

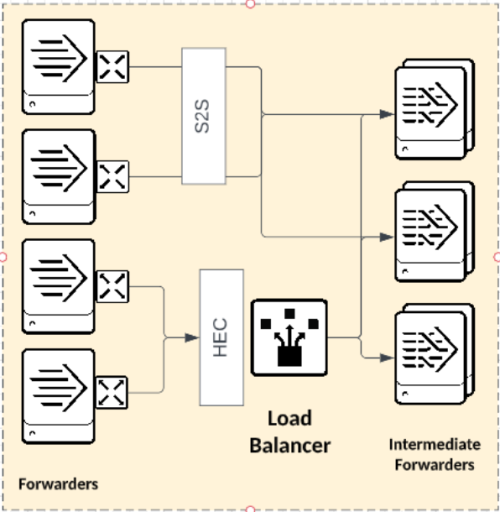

Asynchronous load balancing

When determining the proper scale of an intermediate tier, the ability to evenly distribute data streams to the indexers is a critical consideration for customer-managed indexer deployments. With a larger number of intermediate parallel processes writing events to indexers, the balance of data is spread across the indexers, which increases both search and index performance.

One approach to increasing intermediate indexing parallelism is to increase the number of actual forwarders. However, as outlined in the funneling section, vertical scaling may be preferred over horizontal. In the case where you desire fewer IFs, you must optimize S2S output such that the IFs are appropriately using new connections to new indexers.

The following are technical considerations for outputs.conf when ACK is enabled:

forceTimebasedAutoLB=falseautoLBVolume = <average_bps/ingest pipeline>maxQueueSize = (5 or more) x autoLBVolumeconnectionTTL = 300

The following are technical considerations for outputs.conf when ACK is disabled:

forceTimebasedAutoLB=falseautoLBVolume = <average_bps/ingest pipeline>maxQueueSize = (5 or more) x autoLBVolumeconnectionTTL = 75 (less than the receiver shutdown timeout (90 default)

You can use the following SPL to build the proper autoLBVolume :

index=_internal sourcetype=splunkd component=metrics earliest=-24h group=per_index_thruput host=<one intermediate HWF> | stats sum(kb) as kbps | eval kbps=kbps/86400

In particular, using a connection TTL allows the output processor to move on to the next destination for sending data, which results in asynchronous load balancing.

Caveats

- The above technical considerations are only applicable with version 9.0 or greater

- When indexers are behind an NLB, as in the Splunk Cloud Victoria Experience, asynchronous load balancing is less effective, as the NLB distributes events to the indexers. In this condition, you should use the following settings (as of Splunk Enterprise 9.0.4):

dnsResolutionInterval = 3000000000connectionsPerTarget = 2 * <approximate number of indexers>heartbeatFrequency = 10

- You should only enable asynchronous load balancing on IFs and not on UFs deployed on clients.

- You should not enable asynchronous load balancing when there are a large number of IFs, as it can lead to indexer connection starvation. Please work with Splunk professional services to determine optimal configuration at very large scale.

For additional information, see Splunk Forwarders and Forced Time Based Load Balancing in the Splunk community forum.

Resiliency and queueing

Like all data routing infrastructure, you must understand and optimize failure thresholds and behavior to reduce the risk of data loss. There are two primary considerations when building a strategy for IF resiliency: infrastructure and queuing.

Infrastructure resiliency

Whenever possible, IFs should only function to receive, optionally process, and send data. They should not serve other functions like data collection nodes (DCNs), as DCNs often use checkpoints and other stateful configurations that require special consideration for persistence. When purely functioning in an intermediate capacity, these forwarders are stateless other than any persistent or memory-based queues. As such, with a proper deployment and configuration strategy, replacing a node is relatively easy. Observe the following recommendations and considerations:

- Use automation to manage the scale and deployment of the intermediate tier. There are many ways to approach this, with Kubernetes, Docker, and Ansible being some of the most common.

- You may use persistent input queues, provided that the $SPLUNK_HOME/var is retained.

- You need to reconfigure forwarders that send to the intermediate tier via S2S unless you use consistent intermediate naming.

- If the IFs are acting as HEC listeners, you may need to reconfigure the load balancer.

- The unexpected failure of an intermediate node without persistent queues configured results in the loss of whatever data was in memory at the time of the failure.

Queues

In the context of resilience, queues are used to manage data that has been received but not yet sent. You must configure queues to align with your threshold for data loss and compatibility with deployment and recovery techniques. Each upstream device sending data has its own failure mode, and you should make considerations for what happens when all available queues on the intermediate tier become full and upstream data is rejected.

This is a significantly simplified version of queues to describe data routing. More details about all of the queues can be found in the Splunk documentation.

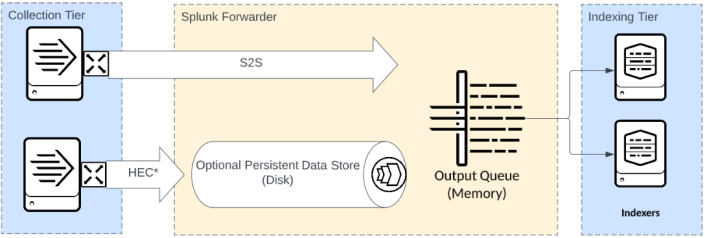

Output queues

Output queues are memory-based and are lost if a node unexpectedly fails. In general, you should optimize the size of this queue such that as little data is stored in memory as possible while providing an adequate buffer for normal processing delays. All data moving through a forwarder is processed through this queue.

Input queues

Persistent input queues are disk-based queues available for TCP, HEC, and several other input types. Persistent queues provide an additional buffer available for the forwarder to retain data prior to processing and sending. Using persistent queues allows the forwarder to continue to accept data during output downtime considerably longer than using the memory-based queue. Persistent queues begin to fill once the output queue is full.

Importantly, persistent queues are not available for S2S. The S2S protocol shifts the queuing burden as far upstream as possible.

Management

Like other splunkd-based forwarding infrastructure, you can use the Deployment Server to manage the configuration at any given intermediate tier and it is the recommended default in most scenarios. There are specific validated architectures available for the Deployment Server.

Note that Ingest Actions require a dedicated Deployment Server for managing rulesets on intermediate heavy forwarders. If you use your intermediate tier for event processing with Ingest Actions, carefully review the guidance and options for Ingest Actions management.

You should configure IFs at scale identically, and their configuration should not drift. CI pipelines can work in lieu of Deployment Servers to manage intermediate forwarding infrastructure, and you can combine them with frameworks like Ansible and Kubernetes to further automate and operationalize deployments.

Splunk data routing and blocked outputs

A common cause of blocked queues results from the configured data output destinations becoming unavailable. When a configured destination is unavailable, its output queue fills up. Then, if configured, the persistent input queue begins to fill until the entire queue is full and the inputs stop accepting data.

When the IF has multiple output destinations configured and any of those destinations become unavailable, the forwarder blocks all queues which can lead to data loss. You can configure the forwarder to drop new events on blocked outputs, which allows data to be delivered to other functioning outputs, but new data to the filled queue will be permanently lost.

For specific options, see the dropEventsOnQueueFull entry in the outputs.conf documentation.

Using httpout for forwarding

Splunk's S2S protocol is the default and recommended protocol for sending data between forwarders and indexers. All Splunk instances support that protocol in both unparsed and parsed formats, and it is the most performant and supportable option. However, there are some cases when S2S is not possible, as HTTP proxies and/or load balancers are required in the data flow. In those cases, Splunk UFs support using S2S over HTTP (httpout) as an alternative to S2S for outbound communication. httpout traffic is standard HTTP and can be load-balanced or proxied like other HTTP traffic. It's important to note that httpout, while HTTP, contains an S2S payload and is only intended for use with the Splunk S2S HEC endpoint.

Evaluation of pillars

| Design Principles / Best Practices | Pillars

| |||||

|---|---|---|---|---|---|---|

| Availability | Performance | Scalability | Security | Management | ||

| #1 | Use HF to forward data by default.

|

X | X | |||

| #2 | Ensure an adequate number of forwarding pipelines to indexers.

|

X | X | X | ||

| #3 | Secure traffic behind SSL.

|

X | ||||

| #4 | Use Splunk native LB for S2S, network or application load balancing for HEC.

|

X | X | X | ||

| #5 | Ensure configuration of volume-based load balancing for intermediate routing tiers, and optimize outputs for the destination.

|

X | X | X | ||

| #6 | Optimize persistent and output queues for data throughput.

|

X | X | |||

| #7 | Have a plan for what happens when queues fill.

|

X | X | |||

| #8 | Monitor queues to ensure proper sizing of intermediate tier.

|

X | X | |||

| #9 | Use distinct DS management tier if IFs are used for Ingest Actions.

|

X | ||||

| #10 | Have a plan for adding, removing, or rebuilding individual IFs.

|

X | X | |||

| #11 | Do not mix DCN and IF workloads.

|

X | X | X | ||

| Federated Search for Amazon S3 | Ingest Actions for Splunk platform |

This documentation applies to the following versions of Splunk® Validated Architectures: current

Download manual

Download manual

Feedback submitted, thanks!