Federated Search for Amazon S3

Federated search for Amazon S3 lets you search data in your Amazon S3 buckets from your Splunk Cloud Platform deployment.

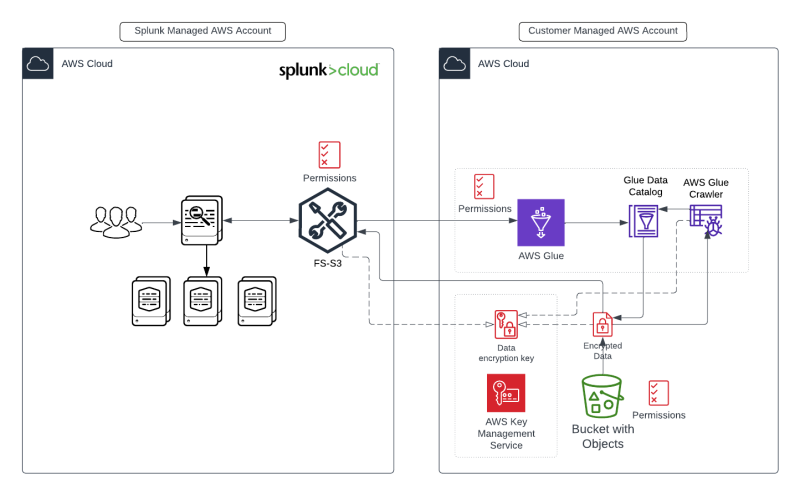

Architecture diagram

Benefits

Federated search for Amazon S3 lets you search data in your Amazon S3 buckets from your Splunk Cloud Platform deployment without needing to ingest or index it into the Splunk platform first.

With Federated Search for Amazon S3, you run aggregation searches over AWS Glue Data Catalog tables that represent the data in your Amazon S3 buckets.

See About Federated Search for Amazon S3 in the Splunk Cloud Platform Federated Search manual for detailed documentation.

Customers can leverage this feature to gain access to cloud datasets in situations when:

- Data ingestion is not an option

- Data has been generated independently of Splunk and stored in Amazon S3

- Data has been routed to AWS S3 via Splunk Ingest Actions or Splunk Edge Processor

To access S3 data, customers use a new SPL command, sdselect. See sdselect command overview in the Splunk Cloud Platform Federated Search manual . The sdselect command follows a SQL-like syntax.

Common use cases for this feature are low-frequency ad-hoc search of non-mission critical data, wherein data historically resided in Amazon S3, or where Splunk data ingestion is not a desirable option due to time/cost/volume constraints. Our recommendation is to use traditional Splunk indexing for mission critical use cases and complement it with Federated Search for Amazon S3 for infrequent searches, data enrichment, data exploration and break-glass scenarios.

Use Cases

The following are scenarios that benefit from using Federated Search for Amazon S3:

- Security Investigations Over Historical Data: Security users can run infrequent investigation searches over data in Amazon S3 which may contain multiple years worth of logs. This use case is an improvement over a process that would typically require data rehydration on an ad-hoc basis, which is a lengthy and expensive process that impacts customers Time-to-Detection. Data can be searched via FS-S3 and summarized by collect command to reduce the consumption of the DSUs.

- Statistical Analysis Over Historical Data: Splunk users can run infrequent statistical analysis over data in Amazon S3 that may contain multiple years worth of logs to obtain key insights based on properties of their logs. Customers would typically use external tools to run statistics or aggregate searches.

- Data Enrichment: Splunk users can enrich their existing data with additional context and information by creating lookups from data in S3. When trying to understand more about your data, manually looking up additional information in Amazon S3 takes time. You can enrich Splunk indexed events and automatically add information from Amazon S3, accelerating the overall process.

- Data Exploration: Customers would typically use external tools to explore their Amazon S3 datasets, or take a conservative approach with new datasets to minimize Splunk costs. Splunk admins can now explore new datasets stored on Amazon S3 in order to determine the usefulness of the data. Post this analysis, customers can plan to ingest business critical subsets of the data from Amazon S3 into Splunk. Customers can also cross-reference their existing Splunk events with new datasets stored in Amazon S3. This can happen in the form of lookups or by joining datasets with common fields. Examples: WAF or VPC flow logs against IP addresses with known issues.

- Archive Scale Management: Customers need to keep a historical record of data for compliance purposes. They want to achieve that using Amazon S3 while still leveraging Splunk search in case investigations are needed. Customers can use DDAA to manage their Splunk Cloud Platform data but they are conscious of costs and delays when restoring, if they ever need to search that data.

- Manage Infrequently Accessed Data: Customers try to store as much data as they can in case they need to search it due to regulation, compliance or an investigation. However, this often results in storage of redundant data, or datasets that are not frequently searched.

Limitations

To use this feature, a customer must meet all of the following requirements:

- Existing or new Splunk Cloud Platform AWS region stack.

- Splunk Cloud Platform stack on release 9.0.2305.200 or higher. Check the Splunk Cloud Platform version before attempting to activate this feature. (Splunk Cloud Platform AWS region customers using either Classic Experience or Victoria Experience can use Federated Search for Amazon S3).

- This feature is not available for Splunk Enterprise (On-premise or BYOL) and not available on Splunk Cloud Platform GCP regions or FedRAMP High and DoD IL5 stacks.

- Purchase the Splunk "Data Scan Units" license (Splunk Federated Search for 3rd party cloud object stores). Purchase order processing of this license will trigger the activation of the feature on the corresponding Splunk Cloud Platform stack. Activating this feature does not require restarting the Splunk Cloud Platform stack.

- Requires an AWS Glue Data Catalog database and tables in the same AWS region as their Splunk Cloud Platform stack. AWS S3 bucket can be in any available region. See the section, Available regions and region differences, in Splunk Cloud Platform Service Description.

- Federated Search for Amazon S3 is not intended for high frequency and real-time searches. Customers should not replace their existing search scenarios involving high frequency searches or real-time latency requirements. Customers attempting to use this feature for real-time searches will perceive slower performance and reduced search functionality when compared with indexed Splunk searches on ingested data. Customers attempting to use this feature for high frequency searches will likely incur higher costs than natively ingesting and searching in the Splunk platform.

Requirements

To use this feature customers need to create an AWS Glue Data Catalog database and tables in the same AWS region as their Splunk Cloud Platform stack. Note that AWS S3 buckets can be in regions outside of the Splunk Cloud platform region. AWS Glue Data Catalog database with tables will be required for each of the buckets that is needed to be read with Federated Search for Amazon S3.

In order to create an AWS Glue table, Splunk or AWS Administrators can use the AWS Glue Crawler to generate a catalog. As the crawler results can struggle in practice for complex structures such as JSON, it is recommended to manually create a definition in the Glue console, using the CreateTable operation in the AWS Glue API, or via AWS Athena DDL (in addition to other methods supported by AWS). Note that many well known source/service formats have pre-defined glue definitions available either from AWS or in the public domain. Note that Splunk recommends using Athena DDL to create Glue table definitions in production.

During the Glue table definition process, it is important to configure table partitioning. It is very likely that S3 buckets (especially if based on AWS Services) will already have prefix / partitions defined by account, service name and year/month/day. However this should be included in the AWS Glue table definition to ensure that search "pruning" of the data is done to restrict the amount of data that is scanned per search, both on the basis of performance and cost. Using date partitioning also allows the Splunk Search time selector to be used for narrowing down the search to specific partitions. (This is critical for buckets that are larger than 10 TB).

Any existing Amazon S3 buckets with data can be searched, with formats of CSV, TSV, JSON (as new-line JSON or .ndjson), or text files. Open-source columnar formats, such as ORC, Avro and Parquet are supported. Federated Search for Amazon S3 also supports compressed data in Snappy, Zlib, LZO, and GZIP formats (only for archives containing a single object) as well as reading data encrypted with SSE-KMS. You can improve performance and reduce your costs by compressing, partitioning, and using columnar formats.

Federated Search for Amazon S3 can be used with data processed by a Splunk Edge Processor or Splunk Ingest Actions, routed to AWS S3 buckets.

When setting up and configuring this feature, it will require Permissions to modify access policies for Amazon S3 buckets and AWS Glue Data Catalog tables and databases. These settings will be defined and provided by the Federated Search S3 setup UI.

Security

Federated Search for Amazon S3 uses data encryption from the customers AWS cloud using AWS SSE-KMS (Key Management Service) and SSE-S3, which encrypts and decrypts at the customers account,therefore Splunk does not need any permissions it just needs to know what key the data is encrypted with.

When creating federated indexes use RBAC (role based access control) to give the required permissions for access control over Splunk Cloud users to the data stored in the S3 bucket(s).

Splunk uses AWS Cross-Account Authentication using application level access for the customer S3 bucket(s).

Licensing

Customers need to acquire additional licensing to enable Federated Search for Amazon S3. This is based on "Data Scan Units", and is described as the total amount of data that all searches using Federated Search for Amazon S3 have scanned in the customer's Amazon S3 buckets. This licensing SKU is independent from Splunk Virtual Compute (SVC) and any Ingest based licenses that customers may have acquired. Data volume is based on "size on disk", so objects in the S3 bucket that are compressed can provide savings. Note that over the course of a year, the DSUs operate on a 'use it or lose it' model.

Using |loadjob, |collect, outputlookup and summary indexes with sdselect search results can help to prevent re-running "expensive" searches.

Performance considerations

Customers should not expect to leverage Federated Search for Amazon S3 as a solution to improve their current search performance in Splunk.

While it is impossible to generalize the expected search performance, a good rule of thumb is that every 1 TB of data scanned can take approximately 100 seconds to search, process and retrieve results. Splunk users should also expect every search to take 3 seconds at the minimum. Other aspects that impact search performance are the following:

- Amount of data searched in Amazon S3.

- Number of individual files in Amazon S3 inside a bucket or location.

- Region separation between Amazon S3 bucket and Splunk stack.

- Search query complexity.

- Data compression.

These are a few things customers can use to improve their search performance:

- Splunk searches should try to leverage filtering in the sdselect command as much as possible.

- Splunk/AWS Admins should use AWS Glue partitioning, and configure partition pruning in Federated Search for Amazon S3. This can significantly reduce the volume of data being scanned by a search. See Work with partitioned data in AWS Glue on the AWS website.

- Splunk/AWS Admins should configure timefield filtering in their federated Indexes that are targeting Amazon S3 buckets. This may require processing their existing data in Amazon S3, but in many cases (such as AWS logs) the buckets generally already use a YYYY/MM/DD prefix format that can be used for time filters in the search.

- Note that if using partition projection, the Glue definition of the "range" or "list" (enum) of values for partitions will restrict the search to that range - so for example if only 2023 was set as the value in the available projection for year, an all time search will only ever search the "2023" partition even though the bucket may contain other years, and the search set to all time. This is also possible with a list (e.g. regions for aws logs), whereas if one region is specified in the projection "enum", only that region will be searched by Splunk.

- If Amazon S3 buckets contain small files (generally less than 128 MB), Splunk Admins should merge their files into larger files. The ideal file size depends on the search scenarios and expected filtering, but it is considered optimal to have objects that are greater than 128MB.

- While there isn't a specific limit of data in Amazon S3 buckets, Federated Search for Amazon S3 is currently optimized for a maximum of 10TB of data scanned per search. To search buckets / data sets that are larger than this, customers will need to leverage AWS Glue partitioning or subdivide their searches within the same bucket. There is a configurable limit of data scanning per search set to 10TB (see guardrails below).

- Using

|loadjobfor searches that use the same base datasets - Using

|collectto store the results for further analysis.

System guardrails

The following mechanisms are in place to avoid unintentional consumption of Data Scan Units:

- Every search in Federated Search for Amazon S3 requires a LIMIT clause that will determine the number of events returned. If no limit clause is explicitly provided, the system will enforce an implicit default of 100,000 events. This default can be configured by Splunk customer support in the

limits.conffile, and overridden in a search by setting a LIMIT value in the query. - Each search has an independent maximum data scan size of 10 TB (total size of all S3 objects on "disk" vs expanded). This limit can be increased by contacting Splunk customer support. An attempt to search >10 TB results with the search failing with a corresponding error message; no scan cost is applied.

| Federated Search for Splunk platform | Intermediate data routing using universal and heavy forwarders |

This documentation applies to the following versions of Splunk® Validated Architectures: current

Download manual

Download manual

Feedback submitted, thanks!