SmartStore for Splunk platform

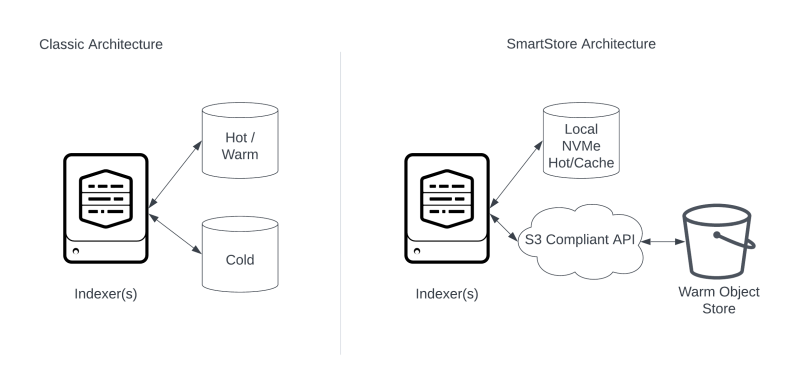

The following diagram shows a classic architecture compared to SmartStore. In the case of SmartStore, one or more indexers connect to a single S3-compliant object store.

Overview

The Splunk platform is a distributed, map-reduce platform that stores data on disk, then performs searches on that data on disk. As such, careful consideration for the underlying storage of a Splunk deployment is the most critical step in designing a scalable, stable architecture.

Splunk SmartStore Architecture was created primarily to provide a solution for decoupling of compute and storage on the indexing tier to enable a more elastic indexing tier deployment. Elasticity allows you to add compute as needed, for example to satisfy higher search workload demands, without also having to add storage. Similarly, increasing the amount of storage to extend your data retention interval does not incur additional infrastructure cost for compute.

In the case of Classic Splunk Architecture deployments, all searchable buckets are either in the "Hot / Warm" volume or in the "Cold" volume. These volumes reside on mounted local or network attached file systems. Splunk also stores copies of buckets on these volumes to meet the desired replication factor.

SmartStore is a significantly different architecture when compared to Classic. A local, mounted file system, which we can refer to as "Hot / Cache" is used for Hot Buckets and to cache any other buckets. Hot Buckets, which typically have a life of one day or less, behave exactly like hot buckets in Classic Architecture. Hot buckets are replicated by Splunk to other indexers to meet the desired replication factor. For a bucket to roll from hot to warm, it is uploaded to a remote object store. This object store, such as AWS S3 or Azure Blob Storage is not a mounted file system and is only accessible through HTTP API calls such as PUT and GET. Once the object store acknowledges receipt of the bucket, the object store is now responsible for resiliency of that bucket so any copies that are currently on indexers are eligible for eviction. Non-primary copies of warm buckets on indexers are evicted first, leaving a searchable warm primary in the cache until space is required and the bucket is evicted. Splunk can only search localized or cached buckets and cannot search the object store directly. When a search requires buckets that are not in the cache, the cachemanager process must first download those buckets from the object store and write them to the local cache.

Benefits

The most tangible benefit is the reduction in cost for storage in a cloud computing environment. AWS S3 storage is far more economical than Elastic Block Storage (EBS). In a classic, non-SmartStore architecture on AWS, EBS typically comprises the bulk of the infrastructure cost. SmartStore changes that equation, drastically reducing the overall infrastructure cost. This difference in cost becomes more significant the longer the searchable retention period is.

It's important to note that SmartStore was written for Splunk Cloud Platform running on AWS before this feature was made available for Splunk Enterprise on-premises or BYOL deployments. The best SmartStore architectures will mimic that of SmartStore on AWS, including indexers using local NVMe or SAS SSDs, dedicated bandwidth per indexer as the architecture scales out, and the ability of the object store to deliver uncached buckets to the indexers quickly enough to meet search expectations and to avoid search time-outs.

When searching uncached Splunk buckets in a SmartStore deployment, the buckets must first be downloaded to their assigned indexers and written to the cache before they are read like other local buckets. The download operation uses 8 parallel processes per indexer by default which perform GET operations to the S3 bucket. For example, if the deployment has 30 indexers, a historical search of uncached data will generate 240 (8x30) simultaneous download requests from S3 and sustain those requests throughout the life of the historical search, until all required uncached data is written to cache.

When deploying SmartStore, the documented index setting for maxDataSize is "auto", which will result in new buckets that are about 750 MB in size. The recommended AWS instance types are i3en, i3, or i4i which can sustain writes of over 1000 MB/s. The connection to S3 is using 10 gigabit ethernet per indexer, which allows downloads at over 1000 MB/s per indexer. A single GET request from S3 can sustain about 90 MB/s, times 8 parallel processes yields about 720 MB/s per indexer to download and write uncached buckets. This means that the additional time required to download historical buckets is about 1 second per bucket divided by the number of indexers. This is a reasonably balanced architecture that optimizes performance and the cost of infrastructure.

Limitations

While SmartStore is a great fit for most common Splunk usage patterns, there are situations where it might not be appropriate. A full list of restrictions is available in the SmartStore documentation. See Current restrictions on SmartStore use in the Splunk Enterprise Managing Indexers and Clusters of Indexers manual.

Significant All Time Searches

Splunk software can't read data from S3 directly, so all buckets must first be localized to the cache before they are searchable. Searches that require a significant number of buckets, such as all time or wildcard searches, can have a negative impact on the intended behavior of the cache as more recent and heavily used data will be evicted to make room for the data from an all time search. This can lead to slower search performance of more recent data until the cache is repopulated. There are options to mitigate this behavior including the lruk algorithm for the cache manager, the srchTimeWin attribute for a user role, a rule in Workload Management, or storing the data that requires all-time searches in a much smaller summary index or in a summary index outside of SmartStore. However, if all-time searches constitute a significant portion of the workload, this type of deployment might not be a good fit for SmartStore.

Stringent Hardware Requirements

To maintain acceptable performance for on-premises deployments, it's very unlikely that existing server and storage hardware will meet the requirements. This almost always requires a significant investment in new hardware, thus is best during a hardware refresh. This is a challenge for primarily for on-premises deployments.

Data Integrity Control

SmartStore enabled indexes are not compatible with the data integrity control feature available with traditional indexes as documented in About SmartStore in the Splunk Enterprise Managing Indexers and Clusters of Indexers manual. One method to provide similar functionality to a SmartStore enabled index is to enable versioning in AWS S3 and to capture S3 access logs. Any edits or deletions of buckets will create a new copy of the S3 object and preserve the previous versions. Customers utilizing this method have the ability to maintain data integrity. S3 versioning is fully supported by Splunk Enterprise and is enabled by default for all Splunk Cloud Platform deployments.

Restoring Frozen Buckets

Frozen buckets cannot be restored to SmartStore enabled indexes. They must be restored to a classic architecture index, which can be on the same indexers hosting SmartStore indexes, or on a completely separate cluster.

Topology Options

Cloud Service Provider (CSP), Customer Managed, Single-Site

This deployment option is colloquially known as "Bring Your Own License" or BYOL and the name of the provider, such as AWS, GCP or Azure. It is by far the best platform for SmartStore and is relatively easy to implement and scale successfully. The three main Cloud Hosting Providers satisfy the networking and object store throughput requirements by default. The only key considerations for architecture are choosing the right instance types and sizing the deployment and cache properly for a given workload.

Cloud Service Provider (CSP), Customer Managed, Multi-Site

Multi-site clusters using SmartStore on AWS are only supported across different Availability Zones (AZs) within the same region. Clusters that span multiple regions are not supported.

On-Premises, Single-Site

Deploying SmartStore on-premises is much more challenging than in a cloud hosting provider. Due to the performance requirements for throughput, it's rarely possible to re-use existing hardware. This topology consists of a local object store from a Splunk-validated vendor, as well as a cluster of indexers with local SSD drives. It is not supported to use on-premises indexers with an object store hosted in the cloud, such as AWS S3.

On-Premises, Multi-Site

This deployment choice requires an object store at each site. The object store is responsible for near-synchronous, bi-directional replication between the two sites.

Requirements

Additional requirements are detailed in the SmartStore documentation. See SmartStore system requirements in the Splunk Enterprise Managing Indexers and Clusters of Indexers manual.

On-Premises

- An S3-compliant object store, certified by Splunk, deployed in the same data center as the indexers. Contact your sales team to discuss which vendors and platforms are validated.

- Indexers with local (direct attached) SAS or NVMe Solid State Drives for the cache, capable of writing at 700 MB/s per indexer.

- A network connection to the object store of 10 gigabits per second per indexer.

- The ability of the object store to deliver read throughput of 700 MB/s per indexer concurrently.

- A modular object store that scales out as more indexers are added. A ratio of indexers to object store servers should be established to maintain the desired 700 MB/s per indexer of read throughput.

- If the deployment is multi-site, an object store is required for each data center and the object store is responsible for near synchronous, bi-directional replication. AWS Cross-Region-Replication (CRR) is not supported at this time.

- SmartStore expects exactly one object store endpoint per index. This can be defined globally for all indexes or per index. To manage the single endpoint in a multi-site deployment, a Global Server Load Balancer (GSLB) is required. The GSLB provides a single endpoint, and manages the resolution of that endpoint to the local object store for a given site.

Customer Managed Platform (CMP) AWS

- i3 or i3en instance types. These instance types offer local NVMe storage.

- Multisite can span multiple availability zones, but must all be within the same region. Specifically, cross-region replication for S3 is not currently supported.

Customer Managed Platform (CMP) Azure

- VM types with local NVMe storage such as the Lsv3 series. The Standard_L32s_v3, with 32 vCPUs or 16 full CPU cores, is the smallest VM type to meet the minimum indexer specification in the Reference hardware section of the Splunk Enteprrise Capacity Planning Manual.

Customer Managed Platform (CMP) GCP

N1 high-mem or N2 high-mem (n2-highmem-*) instance types with local SSD. GCP allows you to define the number of local disks. Currently, the maximum number of disks is 24, providing 9 TB of cache per indexer.

Single-Site

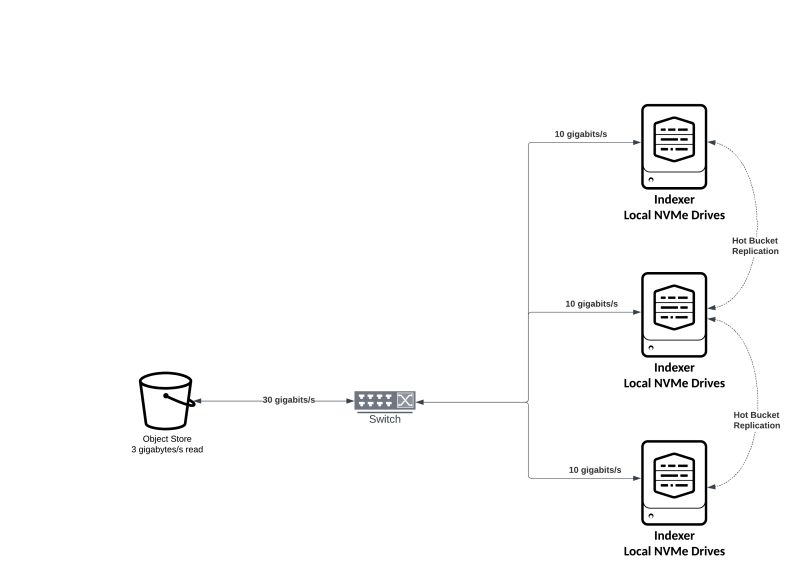

The diagram below shows a simple single-site deployment with three indexers.

- Each indexer has local NVMe or SAS solid state drives (SSD).

- Required bandwidth per indexer is 10 gigabits per second.

- Note: TCP Window and OS tuning will be required.

- The object store should support an aggregate read throughput of 30 gigabits per second, since there are three indexers.

- Any switch infrastructure between the object store and the indexers should also support 30 gigabits per second of throughput. This requirement includes all switches in the data path, potentially including rack switches, end-of-row switches, and aggregation switches.

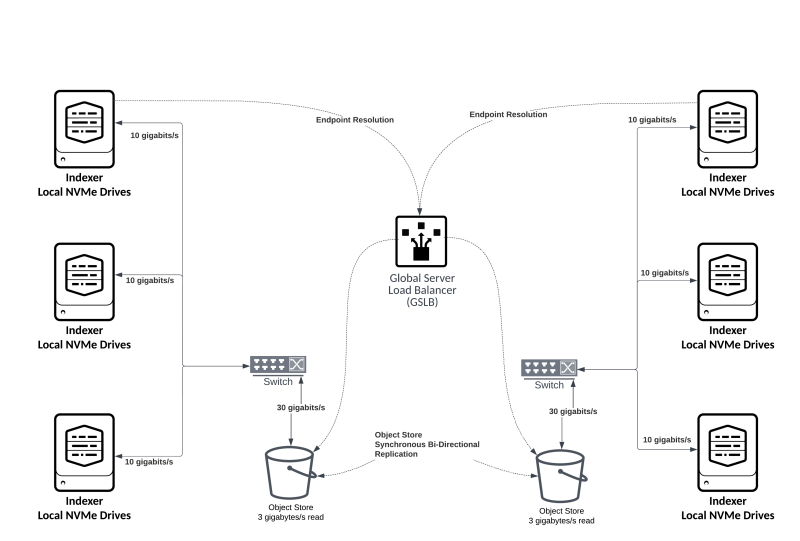

Multi-Site

The diagram below depicts a multi-site architecture, consisting of two sites.

- The aggregate throughput requirements of 10 gigabits per second per indexer are maintained between the indexers and the object store at each site.

- The Global Server Load Balancer (GSLB) is responsible for name resolution from each indexer to the object store such that there is only one endpoint presented to the indexers. Behind the scenes, the GSLB should point indexers to the object store at their local site. If there is an outage of the object store at one site, the GSLB should point indexers to the surviving site.

- While Search Heads are not shown in this diagram, they must be configured with no site affinity such that they can results from indexers at either their local or remote site.

- Site to site, bi-directional replication is handled by the object store vendor, not the Splunk platform.

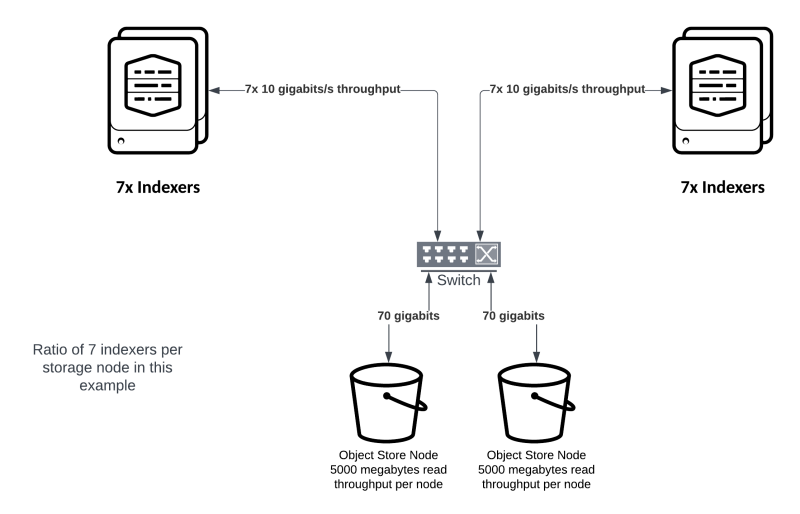

Modular Object Store Scaling

The diagram below illustrates the desired modular architecture of an object store.

- Select an object store comprised of smaller, modular components.

- Establish the read throughput of a single node of the object store.

- Based on the read throughput requirements of 700 megabytes per second per indexer, establish a ratio of indexers to object store nodes. In this example, each object store node supports 5000 megabytes per second. Dividing 5000 by 700 yields a result of 7 indexers per object store node.

- As more indexers are added, continue to add object store nodes to support the ratio defined in the previous bullet point.

Resources

To utilize the SmartStore indexer architecture, you need an S3 API-compliant object store. This is readily available in AWS, but may not be available in other cloud and on-premises environments. This object store needs to provide the same or better availability as your indexing tier.

For details on how to implement SmartStore, see SmartStore system requirements in the Splunk Enterprise Managing Indexers and Clusters of Indexers manual. For a list of current SmartStore restrictions, see Current restrictions on SmartStore use.

Important: If you intend to deploy SmartStore on-premises with an S3-compliant object store in a multi-site deployment spanning data centers, make sure that you understand the specific requirements for the object store documented in the On premises hosted, across data centers section of the "Deploy multisite indexer clusters with SmartStore" topic in the Splunk Enterprise Managing Indexers and Clusters of Indexers manual.

| Design principles and best practices for deployment tiers | AWS BYOL high availability |

This documentation applies to the following versions of Splunk® Validated Architectures: current

Download manual

Download manual

Feedback submitted, thanks!