Splunk Validated Architecture for Splunk AI and ML

Splunk provides Artificial Intelligence (AI) and Machine Learning (ML) capabilities throughout the entire product portfolio. For example, Splunk has developed and embedded pre-built AI/ML features directly within premium products, such as Splunk Enterprise Security (ES) and Splunk IT Service Intelligence (ITSI). These features have been designed to apply AI/ML to specific, high-demand use cases. In addition, Splunk's flexible data platform allows users to build custom AI/ML solutions to achieve outcomes and address bespoke AI/ML requirements, beyond what is provided out-of-the-box.

What is in scope for this Splunk Validated Architecture?

In this Splunk Validated Architecture (SVA), we focus exclusively on architectural considerations needed to leverage Splunk's flexible data platform to build custom AI/ML solutions. Specifically, the technical fundamentals of Splunk's AI and ML capabilities across Splunk Enterprise and Splunk Cloud, including Splunk's Search Processing Language (SPL), the Splunk Machine Learning Toolkit (MLTK), and the Splunk App for Data Science and Deep Learning (DSDL).

Architectures described in this document include details on infrastructure, communication requirements, and example use cases. In addition, advantages and limitations are supplied for each architecture, and where appropriate, sample code and SPL, as well as links to relevant Splunk documentation.

What is out of scope for this Splunk Validated Architecture?

This SVA does not cover architectural considerations needed to leverage pre-built AI/ML features embedded within premium products such as Splunk Enterprise Security (ES) or Splunk IT Service Intelligence (ITSI). Additionally, this SVA does not cover architectural considerations for Splunk's generative AI products, such as Splunk AI Assistant for SPL.

For more information on deployment and architectural considerations for these pre-built features, see the appropriate Splunk product installation and deployment documentation.

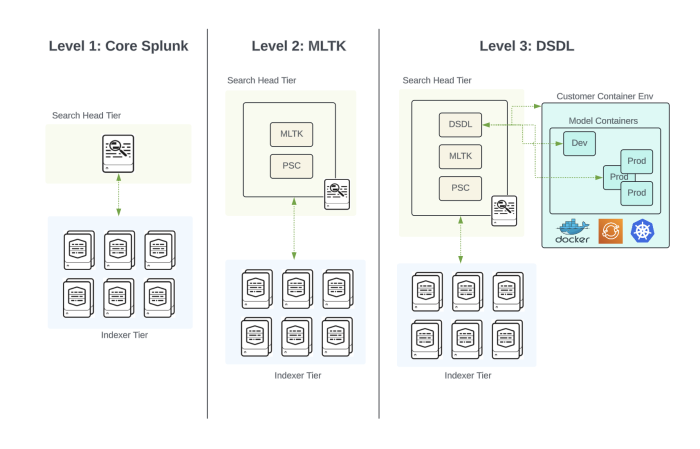

AI and ML in Splunk: A 3-Tiered Capability Model

While Splunk products embed AI/ML capabilities as use cases (e.g. domain-generation algorithm detection/ DGA), users also can build and configure their own AI/ML use cases on their own data. Splunk Cloud and Splunk Enterprise offer 3 user-configurable AI/ML development tiers. These 3 tiers correspond with the following diagram:

| Tier | Description |

|---|---|

| Tier 1: Getting Started with Core Splunk | The first tier uses Core Splunk and some embedded commands that provide statistical and base functions for ML, as well as predefined ML commands for clustering, anomaly detection, and prediction and trending. |

| Tier 2: Using MLTK to Advance ML Use Cases | The second tier uses the Splunk Machine Learning Toolkit (MLTK), which requires installing the Python for Scientific Computing (PSC) add-on.

This second tier provides access to traditional ML algorithms through examples and guided workflows (Showcase), the ability to manage multiple models (Experiments), and support for ONNX models (Models) that can be trained on external or third-party systems and get imported into MLTK. |

| Tier 3: Custom Use Cases with DSDL | The third tier uses the Splunk App for Data Science and Deep Learning (DSDL), which requires installing PSC and MLTK, and leverages a separate container environment that connects to DSDL. In this container environment users can develop new AI/ML models with any open source libraries within Jupyterlab, and also manage production container models.

DSDL allows leveraging of multi-CPU and multi-GPU computing for advanced model building (e.g. deep learning). |

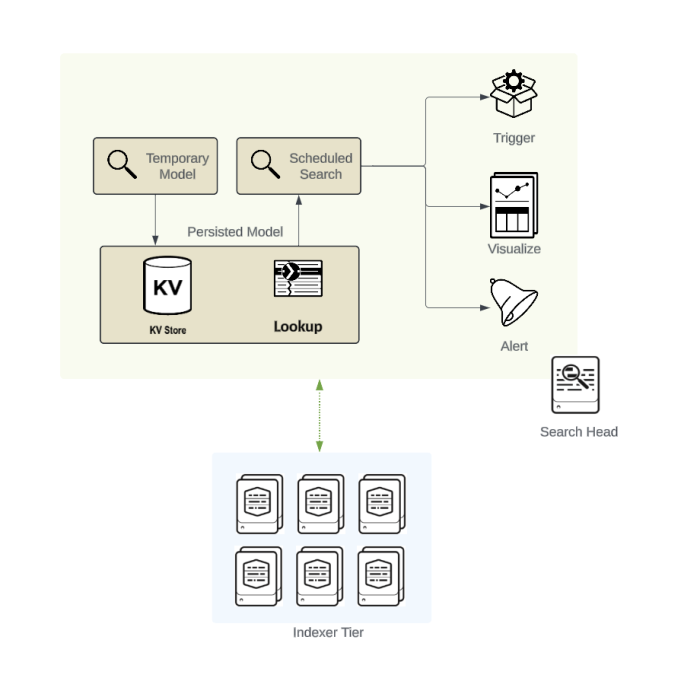

Tier 1: Getting Started with Core Splunk

Core Splunk functions provide versatile, scalable analytics capabilities, without requiring additional application installation (e.g. MLTK). While MLTK offers additional tooling for applying ML, implementing certain ML workflows within Splunk is possible without using MLTK.

This section outlines the benefits, use cases, requirements, and limitations of applying ML using Splunk's core functionalities, without using MLTK.

Benefits

Benefits of core Splunk functions include data management, and data preprocessing and feature engineering.

Data Management

Splunk's core capabilities for data management are scalable and flexible, using optimized data stores such as time-series indexes (tsidx), metrics, key value stores, and lookups. These features facilitate efficient data retrieval and storage, providing a solid foundation for ML-based workflows.

| Data management benefit | Description |

|---|---|

| Data transformation | SPL enables complex data transformation tasks, preparing raw data for analysis. This includes "schema on read," which allows for more flexibility and agility in data processing, as new data can be ingested into Splunk without requiring a pre-defined schema. |

| Normalization | Data from diverse sources can be normalized to a consistent format, making it easier to analyze and compare. |

| Data cleaning | SPL supports data cleaning operations, removing noise and correcting inconsistencies to improve data quality. |

| Enrichment | Data can be enriched by adding contextual information, enhancing the data's value for subsequent analysis. |

Data Preprocessing and Feature Engineering

Data preprocessing and feature engineering is crucial for ML model creation, and SPL provides scalable tools to handle a variety of tasks.

| Data preprocessing task | Description |

|---|---|

| Feature Extraction | Identifies and extracts relevant features from raw data for use in ML models. |

| Scaling | Uses bin, discretize, and makecontinuous commands to create bucketed or continuous variables.

|

| Handling missing values | Manages incomplete data to prevent model bias, errors, and inaccuracies. Uses replace, rare, fillnull, and filldown commands.

|

| Handling outlier values | Removes outlying numerical values. |

| Sampling | Allows users to reduce data volumes either by picking a custom value or by preset values (by 1/10, 1/100, up to 1/100,000) and is available in the Splunk search bar user interface (UI). |

| Filtering data | Reduces data volumes for model training with fields, where, and dedup commands.

|

| Combining datasets | Uses append, format, and join commands.

|

Use Cases

Splunk's search and reporting capabilities can support various ML-related use cases including statistics, correlation, finding anomalies, and prediction and trending.

| Use case | Description |

|---|---|

| Statistics | Splunk provides a range of statistical commands that are essential for data analysis and model preparation:

See Reports commands. |

| Correlation | Correlation analysis helps in understanding relationships between different data fields, which is critical for feature selection and hypothesis testing:

See Correlation commands. |

| Finding Anomalies | Detecting anomalies is a key ML application in Splunk and is used for identifying unusual patterns or outliers in data:

|

| Prediction and Trending | Predictive analytics and trend analysis can be performed using SPL commands designed for forecasting:

|

Requirements

To implement ML within Splunk without using MLTK, the following requirements must be met.

A Splunk Enterprise or Splunk Cloud instance, including:

- Single Server Deployment (S1)

- Distributed Non-Clustered Deployment (D1 / D11)

- Distributed Clustered Deployment - Single Site (C1 / C11)

- Distributed Clustered Deployment + SHC - Single Site (C3 / C13)

- Distributed Clustered Deployment - Multi-Site (M2 / M12)

- Distributed Clustered Deployment + SHC - Multi-Site (M3 / M13)

- Distributed Clustered Deployment + SHC - Multi-Site (M4 / M14)

Knowledge of SPL:

- Proficiency in SPL is essential for performing data transformations, feature engineering, and running analytical searches.

- Knowledge of whether commands scale across indexers or whether the search head constrains computations provides guidance on the efficiency and scalability of functions used.

Limitations

Applying ML within Splunk without MLTK has the following limitations:

| Limitation | Description |

|---|---|

| Specified Commands Set in SPL | Splunk's SPL commands have predefined parameters and functionalities. Users are limited to using these available options to control command behavior without access to the underlying source code or implementation details. This limitation might restrict the customization and flexibility required for certain advanced ML tasks. |

| Scaling and other pre-processing algorithms are also available in MLTK, which augment the SPL commands. See Preprocessing (Prepare Data) in the MLTK User Guide. | |

| Performance Considerations | Parallel execution: Certain streaming search commands can execute in parallel on the indexer level, enhancing performance.

See Types of commands. |

| Search head execution: Most reporting search commands are executed on the search head, which can become a performance bottleneck, especially in cases where search heads are heavily used. | |

Resource constraints: The runtime cost of individual commands might lead to out-of-memory issues or prolonged execution times, especially with large datasets or complex searches. It is important to monitor and test sampling commands such as head or tail, or Splunk's native search sampling. Initially using a reduced dataset limits computation time and ensures the model being built is performant both in computation time and model performance metrics.

|

In conclusion, while applying ML within Splunk without MLTK has some limitations, it provides a range of benefits and use cases that can be effectively leveraged using SPL and Splunk's core functionalities. Understanding the requirements and constraints can help design efficient and scalable ML workflows within the Splunk environment.

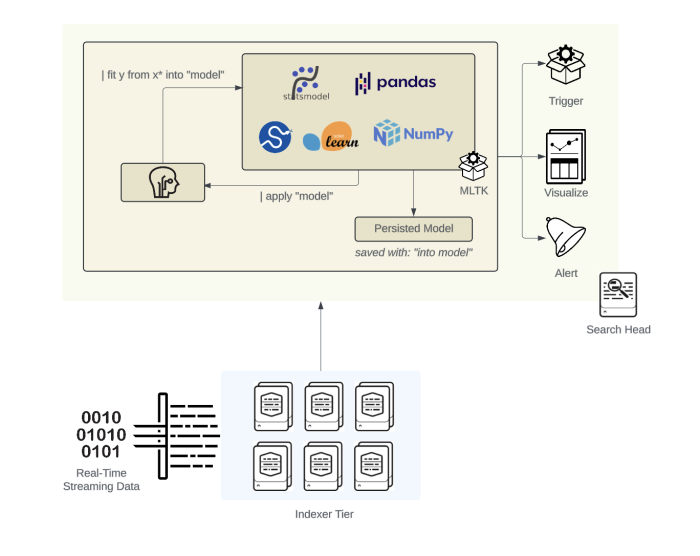

Tier 2: Using MLTK to Advance ML Use Cases

MLTK extends Splunk's powerful data analytics capabilities by providing advanced ML features. MLTK integrates with Splunk to offer guided workflows, experiment management, and pre-configured use case patterns. This section outlines the benefits, use cases, requirements, and limitations of using MLTK to enhance ML capabilities within Splunk.

Benefits

Using MLTK for your ML use cases includes the following benefits:

| MLTK benefit | Description |

|---|---|

| Guided Workflows via the Showcase | MLTK's Showcase provides guided workflows that help users get started with ML in Splunk. These workflows include step-by-step instructions and pre-built examples, facilitating user understanding and implementation of ML models. This tooling is primarily provided for citizen data scientists and Splunk users to explore their data interactively to operationalize business/IT insights. |

| Experiment Management via the Experiments Page | The Experiments page in MLTK allows users to manage their ML experiments efficiently. It provides a centralized interface to track different experiments, compare results, fine-tune model parameters, and manage versions of ML models. |

| Use Case Patterns in MLTK's Deep Dives | MLTK offers deep dive examples for various use cases, providing users with detailed patterns and best practices for implementing ML solutions. These patterns help in identifying relevant algorithms, understanding data preparation steps, implementing feature engineering techniques, and interpreting model results. |

| Model Stacking in SPL | MLTK can stack models, allowing users to run multiple fit or apply commands in a chain within SPL. This capability facilitates the creation of more complex and sophisticated ML workflows by combining different models and techniques.

MLTK allows administrators to configure compute resources from the search head available to MLTK. For both Splunk Enterprise and Splunk Cloud, the default is set to 1 CPU core to maintain search head performance, but can be configured to provide necessary resources for larger ML workloads. MLTK configuration settings allow administrators to set limits for the performance cost associated with individual ML models developed by Splunk users. See Configure algorithm performance costs. |

Use Cases

MLTK includes more than 40 algorithms from Scikit-learn, covering a broad range of traditional ML techniques including:

- Classification

- Regression

- Clustering

- Dimensionality reduction

- Anomaly detection

- Feature selection

These algorithms can be used for various purposes, including predictive maintenance, fraud detection, customer segmentation, and more. See Algorithms in the Splunk Machine Learning Toolkit.

Requirements

To use MLTK the following must be installed on the Splunk search head, using either Splunk Enterprise or Splunk Cloud.

Splunk Enterprise or a Splunk Cloud instance, including:

- Single Server Deployment (S1)

- Distributed Non-Clustered Deployment (D1 / D11)

- Distributed Clustered Deployment - Single Site (C1 / C11)

- Distributed Clustered Deployment + SHC - Single Site (C3 / C13)

- Distributed Clustered Deployment - Multi-Site (M2 / M12)

- Distributed Clustered Deployment + SHC - Multi-Site (M3 / M13)

- Distributed Clustered Deployment + SHC - Multi-Site (M4 / M14)

Python App for Scientific Computing (PSC) add-on:

- Provides the necessary Python runtime and libraries required for executing ML algorithms within Splunk.

Limitations

Using MLTK to enhance ML capabilities within Splunk has the following limitations:

| Limitation | Description |

|---|---|

| Search head computation | MLTK's computation is limited to the search head where MLTK is running. This means MLTK does not leverage Splunk indexers' scalable search and compute capabilities on fit and apply commands, including subsequent SPL commands. This potentially creates a bottleneck for resource-intensive tasks such as training models on larger historical datasets.

|

| Impact on search performance | If the search head is not dedicated to MLTK, training large models can impact overall search performance. Where MLTK operations heavily use a search head, it is advisable to use a dedicated search head for MLTK operations to mitigate performance impacts or to apply other controls or restrictions to maintain search workload performance. For setting limits, see Configure algorithm performance costs.

Transitioning to a dedicated search head might be necessary when time required for initially training ML models is long. This is also true for situations involving numerous inference models that require high-frequency searches and repeated re-training. To move a model between a search head and a search head for MLTK, an administrator can migrate all settings of the search head or migrate the .ml files at <SPLUNK_HOME>/etc/apps/Splunk_ML_Toolkit/. Migrating to a dedicated MLTK search head allows for offloading the MLTK related workloads to a dedicated search head, while the indexing tier is then used like in any other search workload. See Migrate settings from a standalone search head to a search head cluster. |

| CPU core utilization | By default, only 1 CPU core is used for search jobs and MLTK operations, including model training and inference. This can limit the performance and speed of ML tasks, especially when dealing with large datasets or complex models. MLTK search head sizing can vary widely depending on training/inference load for compute and memory. A standard sized search head will accommodate typical MLTK workloads. You can also configure Workload Management rules for the prioritization of ML-based workloads. See Workload Management overview. |

| Search head scalability | Although the search head layer can be horizontally scaled through search head clustering to run multiple MLTK models in parallel, there are still constraints regarding the scalability of individual ML tasks.

Each node in the cluster must be capable of handling the specific ML workloads assigned to it. |

| Model size limitations | MLTK models have recommended or restricted size limits, particularly in search head clusters. By default, the maximum model size is 15 MB for both MLTK models and MLTK supported ONNX (Open Neural Network Exchange) models, but can be configured through the mlspl.conf file. This ensures that model sizes remain manageable and do not adversely affect system performance, especially regarding knowledge bundle replications in a search head cluster. See Upload and inference pre-trained ONNX models in MLTK and Configure algorithm performance costs. |

| Algorithm settings and restrictions | MLTK algorithms have specific settings and limitations that can be configured and tuned. However, users must be aware of inherent restrictions, such as the maximum number of events for training or the maximum runtime for training. See Configure algorithm performance costs. |

| Limited and extendable algorithm set | The set of available algorithms in MLTK is limited but extendable. Users can integrate additional algorithms by leveraging the PSC add-on, although this comes with its complexities and limitations. In addition, users can also deploy their own or pre-built open source models based on the ONNX format. See Upload and inference pre-trained ONNX models in MLTK. |

In conclusion, MLTK provides powerful tools and capabilities to advance ML use cases within Splunk. Users can move beyond core Splunk and leverage MLTK to build sophisticated ML solutions and drive insights from their data by understanding MLTK's benefits, use cases, requirements, and limitations.

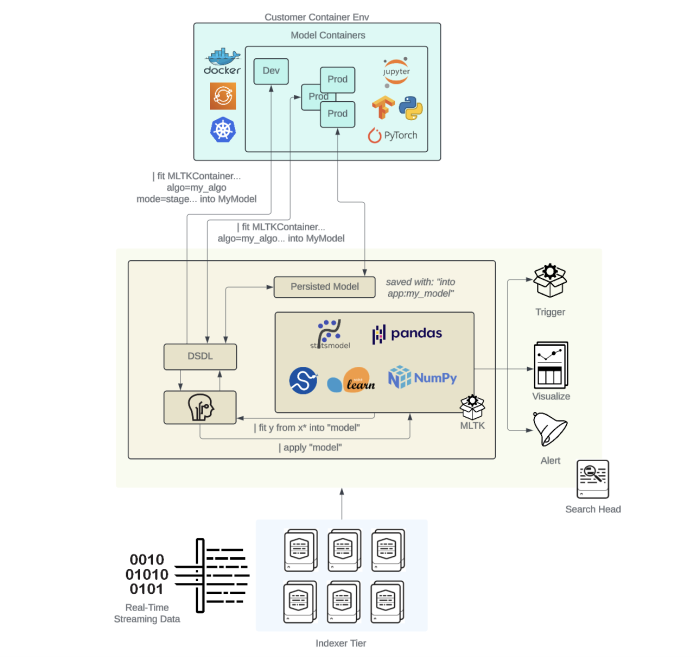

Tier 3: Custom Use Cases with DSDL

What is the Splunk App for Data Science and Deep Learning?

The Splunk App for Data Science and Deep Learning (DSDL) enhances Splunk's ML capabilities. Installed on a Splunk search head, DSDL allows data scientists and developers to create custom ML models. DSDL extends the functionality of MLTK by connecting to a containerized JupyterLab environment. This environment connects directly to the Splunk search head, enabling data transfer for model building and experimentation. After installation DSDL resides on the Splunk search head and enables containers which use Splunk-provided container images to run in a container environment. These containers connect to Splunk for data transfer.

This section outlines the benefits, use cases, requirements, and limitations of using DSDL for custom use cases.

Benefits

Custom use cases with DSDL offer the following benefits.

JupyterLab Environment for Python Development

Splunk provides DSDL-compliant container images to connect to Splunk. These include a JupyterLab environment - a powerful and interactive development interface widely used by data scientists:

| Benefit | Description |

|---|---|

| Access to public Python libraries | Users can leverage a wide array of publicly available Python libraries, such as NumPy, Pandas, Scikit-learn, TensorFlow, and PyTorch, for data analysis, preprocessing, and model development. |

| Interactive development | JupyterLab supports interactive coding, allowing users to write, test, and iterate on their code in real time. This iterative approach is beneficial for data exploration and model experimentation. |

| Visualization tools | The environment includes robust visualization tools, such as Matplotlib and Seaborn, to create detailed plots and graphs that can help you understand data patterns and model performance. |

Offloading Compute and Training to a Separate Environment

DSDL allows for offloading computationally intensive tasks from the Splunk search head to a dedicated container environment:

| Benefit | Description |

|---|---|

| Dedicated compute resources | Use separate container environments equipped with dedicated CPUs and attached GPUs, which are required for training complex deep learning models. |

| Enhanced performance | The Splunk search head avoids performance bottlenecks associated with heavy computational loads by offloading compute tasks. |

Model Management (MLOps) Capabilities

DSDL integrates with several tools to provide robust model management capabilities from within the Splunk search head:

| Benefit | Description |

|---|---|

| MLFlow integration | Facilitates the tracking of experiments, packaging of code into reproducible runs, and sharing and deploying models, allowing data scientists to manage the entire ML lifecycle. |

| TensorBoard integration | Provides visualization and tooling support to track and understand model training, including metrics like loss and accuracy, and visualization of the model graph. |

| DSDL app management | DSDL offers features for managing models, including version control (e.g. Git), deployment, and monitoring. Model management ensures that models are not only developed, but also maintained and updated effectively. |

| Logging | Each DSDL container can be instrumented on a code runtime level and infrastructure level. This ensures full visibility into AI/ML workloads and provides valuable operational insights for troubleshooting and resource optimization. |

Use Cases

DSDL is ideal for implementing the following advanced analytics, machine learning, and deep learning use cases:

| Use case | Description |

|---|---|

| Generative AI | Applying state-of-the-art open-source Large Language Models (LLM) for text summarization, classification, and text generation. |

| General data science tasks | Applying freeform Python-based iterative data science approaches or using specialized libraries for exploratory data analysis or data mining tasks. Practical for anomaly detection or deriving insights from datasets. |

| Natural Language Processing (NLP) | Applying models like transformers for sentiment analysis, text summarization, and named entity recognition. |

| Time Series Forecasting | Use recurrent neural networks (RNNs) and long short-term memory (LSTM) networks to predict future data points in a time series. |

| Anomaly Detection | Detecting unusual patterns in data for use cases like fraud detection, network security, and predictive maintenance. |

| Clustering | Apply advanced clustering and dimensionality reduction techniques to complex datasets to discover patterns of similarities or spot anomalies. |

| Predictive Analytics | Building models to predict future trends and behaviors based on historical data. Useful in finance, marketing, and operations. |

| Graph Analytics | Use graph modeling and graph algorithms to derive insights from connected entities. Useful for fraud use cases, behavioral analytics, or asset intelligence applications. |

Requirements

To use DSDL the following requirements must be met:

Splunk Enterprise or Splunk Cloud instance, including:

- Single Server Deployment (S1)

- Distributed Non-Clustered Deployment (D1 / D11)

- Distributed Clustered Deployment - Single Site (C1 / C11)

- Distributed Clustered Deployment + SHC - Single Site (C3 / C13)

Python for Scientific Computing (PSC) add-on:

- Provides the Python runtime and libraries required for executing ML algorithms within Splunk.

Splunk Machine Learning Toolkit (MLTK):

- Must be installed on the Splunk search head or search head cluster to leverage its functionalities.

Container Environments:

- Splunk Cloud requires an internet-connected Docker, Kubernetes, or OpenShift environment.

- Splunk Enterprise requires the same container environments as Splunk Cloud, but only requires connecting to a Splunk Enterprise instance on a local network or internet connectivity to a containerized cloud environment.

Limitations

Consider the following architecture and deployment limitations when using DSDL.

Supported Environments

DSDL architecture supports Docker, Kubernetes, Amazon Elastic Kubernetes Service (EKS), or OpenShift environments.

| Limitation | Description |

|---|---|

| Data processing location | All data is retrieved from indexers, processed by the search head, and sent via REST API to the container environment. |

| Data transfer limitations | The amount of data sent between the search head and the container environment depends on the physical connection between servers. Running within a local container environment can also cause limitations. |

| Data staging | Typically limited to transfer data samples via the Splunk REST API. Although larger datasets (several GBs) can be staged, this is more limited in interactive Jupyter SPL searches due to REST data transfer constraints. For more information see the next section on Performance Considerations which compares transfer throughputs using mode=stage and the interactive pull data command.

|

Performance Considerations

| Limitation | Description |

|---|---|

| Search head performance | Offloading compute tasks helps maintain search head performance, but large models can still impact performance if the search head is not dedicated to DSDL operations. |

| Search head resource constraints | Search head operations use 1 CPU core per search job, including MLTK operations. Dedicated search head resources are necessary for optimal performance. Therefore, data preparation operations running on the search head for the containerized environment can lead to performance bottlenecks, even with appropriate containerized resources. |

| Indexing performance | Offloading model inference and training can reduce demands on the search head tier. On the indexing tier the demand will be similar to other search workloads that retrieve the results dataset before being sent to the container environment. |

| Data transfer throughput | The recommended method for large datasets is to push the data into a container using mode=stage in the fit command. This can transfer several gigabytes of data within minutes. Performance of the Splunk REST API constrains the use of the interactive pull data command within the JupyterLab environment which can be used for smaller datasets in the range of hundreds of megabytes. Additional information regarding search and staging performance is found in DSDL under Operations > Runtime Performance.

|

| Container environment sizing | For large ML workloads (e.g. deep neural networks with many layers/parameters, ensemble models, high-throughput with low latency inference with near real-time applications, or long training times) an Nvidia-compatible GPU is recommended. |

| Smaller ML workloads | Traditional algorithms (e.g. decision trees, K-means) can use standard container workload-sizing specifications. |

Security Management

| Limitation | Description |

|---|---|

| Data security | HTTPS sends data between the container environment and the search head. Administrators must apply standard container and network security practices to ensure data protection. |

| Container-model mapping | There is a 1:1 mapping of containers and models. |

| Model constraints | Models must not contain whitespaces, which can restrict naming conventions and organizational standards. |

| Container certificates | When a container image for DSDL is created, a new certificate is created as well. Therefore, all images downloaded from Docker Hub use the same certificate. If a custom image is created, a new certificate is generated. |

| New certificate creation | A user must first create their own certificate, deploy the root certificate to DSDL, then deploy the service certificate to Docker for an encrypted and secure connection. This is completed in DSDL under Container Setup > Certificate Settings. |

| Ports exposed | Production containers only expose the single API URL port, whereas development containers expose each of the service ports (i.e. JupyterLab, MLFlow, TensorBoard, Spark, API URL). |

| Shared volumes | Models are shared across all containers within the containerized environment in a shared volume. |

| Allowlisting | An administrator must allowlist a specified IP within the Splunk Admin Config Service and configure a security group within Amazon EKS or similar environment for an allowlisted IP address range from the search head. |

| Customer managed centralized SOC architectures |

This documentation applies to the following versions of Splunk® Validated Architectures: current

Download manual

Download manual

Feedback submitted, thanks!