Syslog data collection

Syslog serves as a universal protocol for transmitting log data across an enterprise. Its simplicity, extensibility, and compatibility with various devices and applications make it a key component of scalable and reliable enterprise data collection frameworks.

Multiple architecture topologies exist to consolidate syslog data into Splunk catering to diverse IT environments and requirements. From direct ingestion methods to more complex setups involving intermediary forwarding and aggregation layers, each topology offers distinct attributes specific to scalability, reliability, and performance needs. Similarly, the choice of implementation, whether through native Splunk features, third-party tools, or custom solutions, is guided by factors such as volume, velocity, and variety of data, as well as the specific analytical goals of the Splunk deployment.

Core syslog concepts

A number of core concepts guide architectural decision-making. This section introduces several concepts relevant to the architectures presented in subsequent sections.

Request for Comments

Multiple requests for comments (RFCs) describe message formats used for the syslog protocol. The existence of multiple message format standards requires evaluation of all components of your syslog architecture to ensure compatibility. See https://www.rfc-editor.org/search/rfc_search_detail.php?title=syslog for more information.

Transmission Control Protocol versus User Datagram Protocol

Syslog messages can be transmitted over both Transmission Control Protocol (TCP) and User Datagram Protocol (UDP), each with its pros and cons:

TCP: Offers a reliable connection-oriented service, ensuring that messages are received in order and without loss. While TCP reduces the risk of lost messages, it introduces more overhead and complexity due to connection management. UDP: Traditionally, syslog used UDP for its simplicity and low overhead. UDP does not guarantee delivery, making it faster but less reliable. In high-volume environments, this can lead to lost messages. UDP may be more commonly used in the same subnet solutions.

Data identification and categorization

Identifying and categorizing syslog data is important for several reasons. First, it directly impacts system performance. Properly sorted and identified data streamlines processing and analysis, allowing quicker insights and responses. Misidentified or unsorted data can clog analysis pipelines, leading to inefficiencies and delays. Manageability is another key consideration. Syslog data comes from a variety of sources, each potentially using different formats or standards. Without clear identification and organization, managing this data becomes cumbersome. Administrators must constantly adjust filters, parsers, and analysis tools to cope with the incoming data, which takes resources and attention away from more strategic tasks.

Compliance presents a further challenge. Regulations regarding data handling, storage, and analysis govern many industries. Identifying syslog data is essential to ensure that sensitive information is treated appropriately and audit trails are complete and accurate. Failure to comply due to mismanaged data can result in hefty penalties and loss of trust.

Lastly, syslog data formats are subject to change, often without notice. Devices and applications are updated, logging levels are adjusted, and new types of events are logged. Each change can alter the data format, potentially rendering existing parsers and analysis tools ineffective. These format changes may not be immediately identified, leading to gaps in data analysis and potential security risks.

Strategies for syslog data management

Effective management of syslog data requires consideration of strategies to optimize the collection, identification, and processing of syslog data, focusing on distributing responsibility and employing advanced data triage techniques.

Distributing responsibly

One foundational strategy is to diversify syslog entry points by establishing different entry points tailored to specific types or data sources. This shifts the responsibility for sending the correct data to the appropriate destination onto the shoulders of the data owners. This approach has several advantages:

- Reduced processing load: The workload on Splunk administrators and the central processing system is significantly reduced by ensuring that data is pre-sorted at the source. It allows for more efficient data handling and analysis within Splunk.

- Enhanced data accuracy: With data sources configured to send data to specific entry points, the likelihood of misclassification and misidentification decreases, leading to higher data accuracy and reliability.

This strategy is not without challenges. Technical limitations of syslog data sources or the environmental context may not always permit such a configuration. Some devices may lack the capability to direct logs to different targets based on content or type, limiting the effectiveness of this approach.

Data categorization

Categorizing and sorting incoming data helps simplify syslog data management. Categorization is commonly achieved through the following techniques:

- Reception port differentiation: Assigning different ports for different types of syslog data can serve as a simple yet effective separation method, allowing for preliminary sorting at the network level.

- IP source analysis: Filtering or categorizing data based on the source IP address enables administrators to identify the data's origin, facilitating more granular management and analysis.

- Host identification: Utilizing the syslog metadata or reverse DNS lookup to identify the host sending the data can help classify logs more accurately.

- Syslog header and content analysis: Deep analysis of syslog headers and content through pattern matching or keyword detection allows for sophisticated sorting and categorization of logs.

Solutions like Splunk Connect for Syslog (SC4S) simplify this process. SC4S is a pre-processing layer that efficiently manages syslog data before it reaches Splunk, packaging and categorizing logs according to predefined rules and configurations. This not only eases the burden on Splunk administrators but also optimizes the performance and Total Cost of Ownership (TCO) of the Splunk environment.

Syslog data format

Syslog data can vary significantly in format, with some logs represented as single-line entries and others spanning multiple lines. This variability presents unique challenges:

- Single-line entries: Generally straightforward to process and analyze, single-line entries must be accurately timestamped and categorized to ensure they are correctly interpreted within their operational context.

- Multi-line entries: Pose a greater challenge for log management systems. Ensuring that related lines are correctly grouped and processed as a single log entry requires advanced parsing techniques.

Preserving original log format

A fundamental principle in syslog data management is preserving the original format of the log data as much as possible, with two primary considerations:

- Syslog header: The syslog protocol, especially in its more modern form (RFC 5424), mandates the inclusion of a structured header. This header provides essential context, including priority, timestamp, and facility code. Ensuring this header is preserved and parsed is crucial for accurate log analysis.

- Splunk metadata: When syslog data is ingested into Splunk, it's important to define and assign appropriate metadata, such as index and sourcetype. This metadata facilitates efficient data organization, retrieval, and analysis within Splunk, allowing users to glean insights more effectively.

Syslog data collection implementations

The following sections describe supported architectures using Splunk Forwarders and Splunk Connect for Syslog. The Edge Processor SVA describes high-level architectures suitable for syslog ingestion.

- Direct TCP/UDP input with Universal Forwarder (UF)/Heavy Forwarder (HF): Splunk is configured to listen on a TCP or UDP port, with the default port set as UDP 514. It is also configured to accept syslog traffic directly. Splunk strongly discourages this practice in any production environment. Direct TCP/UDP input is not supported in Splunk Cloud.

- Rsyslog or Syslog NG combined with Universal forwarder (UF)/heavy forwarder (HF): Use a Splunk UF or HF to monitor and ingest files written out by a syslog server, such as rsyslog or syslog-ng. This solution is widely used by customers with existing syslog infrastructure.

- Splunk Connect for Syslog (SC4S): SC4S provides a Splunk-supported turn-key solution and utilizes the HTTP Event Collector to send syslog data to Splunk for indexing. It scales well and addresses the shortcomings of other methods. If you have existing syslog infrastructure and very specific requirements, you might prefer one of the alternative solutions.

- Edge Processor with syslog: Receiving syslog directly on Edge Processor is supported. This is convenient for customers with data transformation requirements. However, ensure the syslog you plan to implement is supported in the current version.

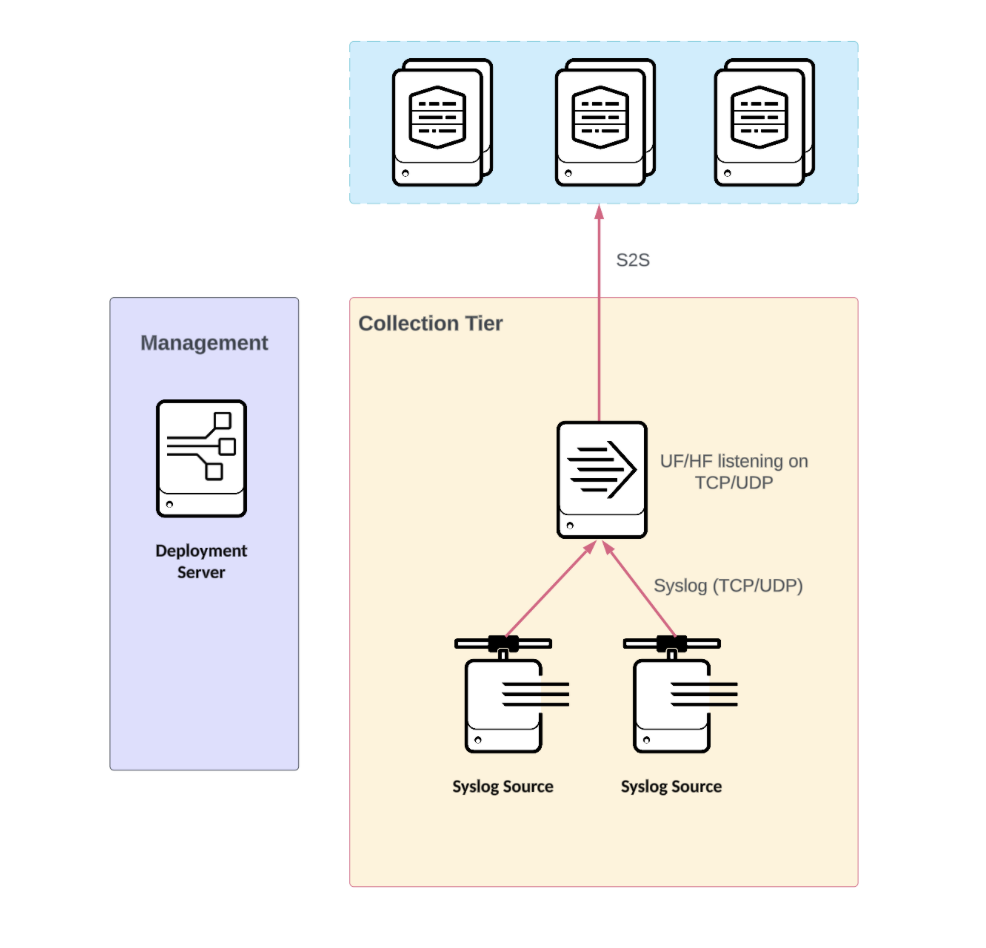

Direct TCP/UDP input with Universal Forwarder (UF)/Heavy Forwarder (HF)

Benefits

- Easily deployed for non-production environments

- Sourcetype assignment by port

- Splunk AutoLB ensures even data distribution

- Deployment server available to centrally manage the UF configuration

- Encryption through S2S protocol

- Index acknowledgement support on-premise and Splunk Cloud for S2S

- Supports deployment scenarios with proxy requirements

- If you are using a HF, you can leverage Ingest Actions to route and clone to an S3 or NFS compatible filesystem

- If you are using a HF, you can clone all or a subset of data to a 3rd party syslog server

Limitations

- Syslog port restrictions and admin privileges need to be considered

- No ability to continue ingesting syslog when the UF/HF is in a backpressure situation, resulting in a risk of data loss. You can mitigate this by queue tuning and using persistent queues on the output. This is especially useful for cloning situations, requiring very recent versions of Splunk.

- Updates requiring a reload to restart might result in a loss of data in the time taken to restart the Splunk forwarding service.

- There is no automatic sourcetype recognition, but it can be achieved through custom props and transforms.

- It has a single point of failure.

- It is not highly available.

- There is a long recovery time in case of disaster.

It is highly recommended not to send Syslog directly to indexers in a distributed or cluster mode as this would likely not provide the required availability and data distribution. This applies to all Search and Indexing topologies including Standalone S1.

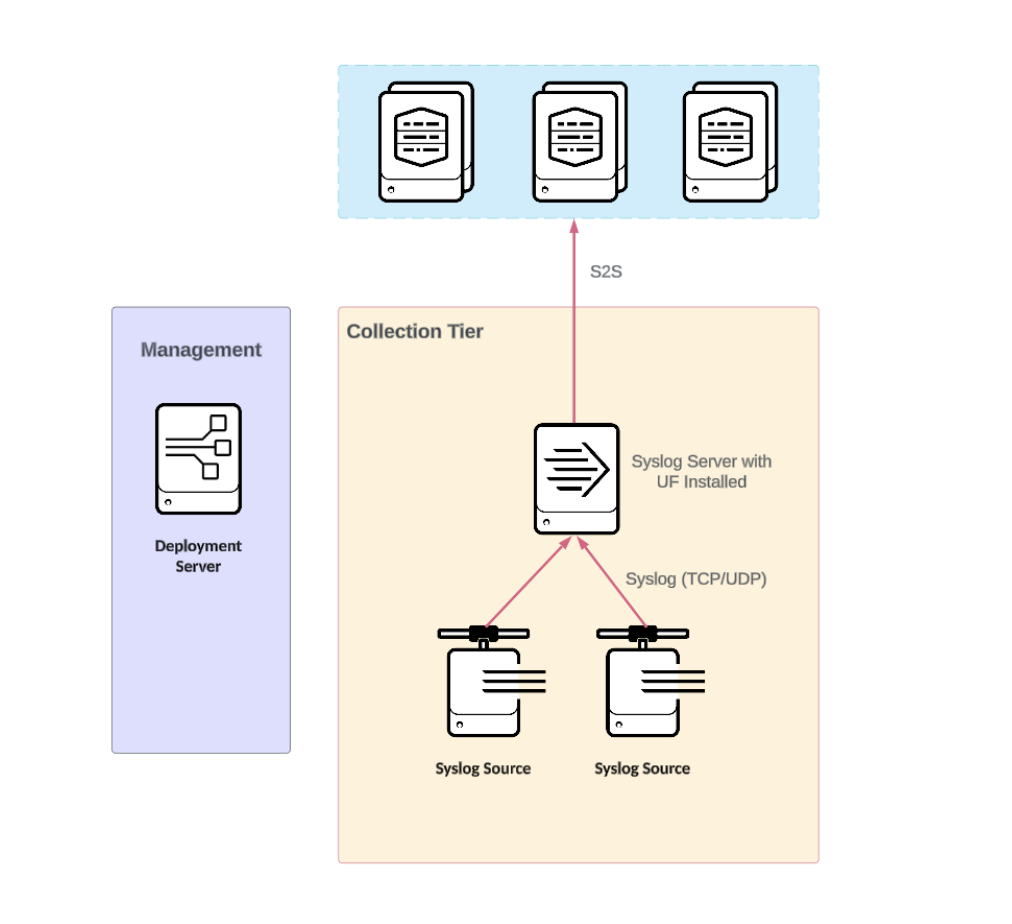

Rsyslog or Syslog NG combined with Universal Forwarder (UF)/Heavy Forwarder (HF)

This architecture uses a Splunk UF/HF to process and ingest syslog sources that are written to disk on an endpoint by a syslog server. Most commonly encountered, rsyslog and syslog-ng, offer commercial and free solutions that are scalable and simple to integrate and manage in small-scale to large-scale distributed environments.

This architecture supports data onboarding using standard inputs on UF/HFs and is widely used by customers with existing syslog infrastructure and with heavy syslog customizations. The syslog server is configured to identify log types and write out log events to files and directories where a Splunk forward can pick them up. Writing events to disk adds limited persistence to the syslog log stream, limiting exposure to data loss for messages sent using the unreliable UDP as transport.

Benefits

- Syslog events are configured to write files and directories for each source type simplify management.

- Events persisted in files offers buffering, subject to infrastructure limitations.

- The Universal Forwarder monitors fields and forwards data to the configured index.

- It supports sourcetype assignment by port.

- Splunk AutoLB ensures even data distribution.

- Deployment server is available to centrally manage the UF configuration.

- It has encryption through S2S protocol.

- It has index acknowledgement support on-premise and Splunk Cloud for S2S.

- It supports deployment scenarios with proxy requirements.

- There is Splunk Support for forwarders (UF/HF) and a recommended Enterprise support contract with rsyslog/syslog-ng.

- If you are using a Heavy Forwarder, you can leverage Ingest Actions to route and clone to S3 or NFS compatible filesystems.

- Data can be cloned fully or as a subset at the syslog server level to a 3rd party, which may facilitate deployments in some environments as data sent to 3rd party never flows through a Splunk component, helping meet some supportability requirements.

Limitations

- Single syslog server and forwarder configurations experience capacity limits, and any capacity limited to vertical scaling.

- There is no automatic sourcetype recognition, but it can be achieved via custom props and transforms.

- Support contract(s) might be necessary for third-party syslog servers.

- Updates requiring a reload or restart might result in a loss of data in the time taken to restart the service.

- It has a single point of failure.

- It is not highly available.

- There is a long recovery time in case of disaster.

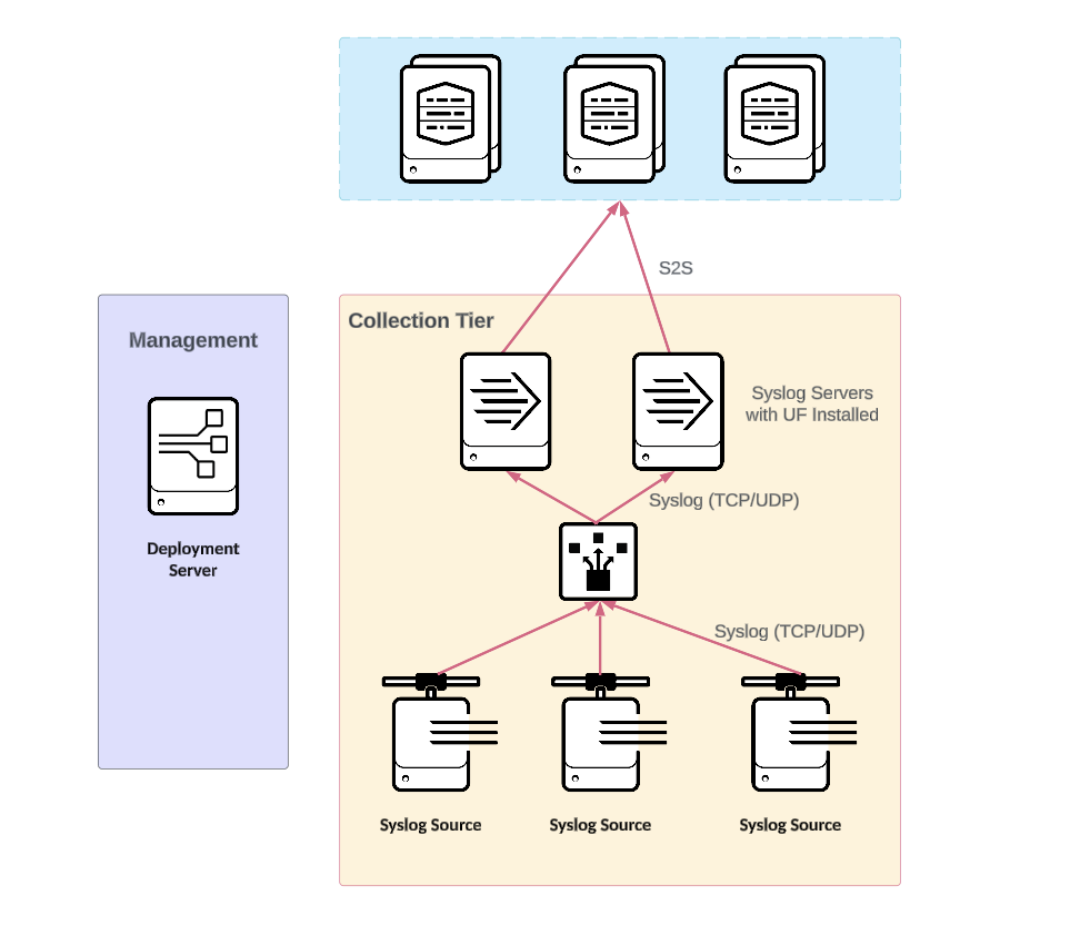

Rsyslog or Syslog NG combined Universal Forwarder (UF)/Heavy Forwarder (HF) high availability deployments

Architectures with an active or passive Splunk forwarder, where syslog data has been duplicated, either by data sources, sending twice, load balancer configuration or storage replication, are not recommended in most cases due to a high risk of data reingestion or duplication due to a failover.

Universal Forwarder benefits

- It offers increased capacity through horizontal scaling.

- The use of multiple syslog servers with forwarders installed offers HA for the collection tier.

- It offers protection against data loss during maintenance operations.

- A single IP simplifies client configuration.

- It uses significantly less hardware resources than other Splunk products, see Benefits of the universal forwarder in the Forwarder manual for more information.

Universal Forwarder limitations

- Syslog collection is dependent on the availability of the Load balancer.

- The load balancer might cause unbalanced data distribution, due to the inability to consider the volume weight of a connection, and split events if session management is configured incorrectly.

Heavy Forwarder benefits

- It has additional capacity through horizontal scaling.

- Multiple syslog servers with forwarders installed offers HA for the collection tier.

- It offers protection against data loss during maintenance operations.

- A single IP simplifies client configuration.

Heavy Forwarder limitations

- It uses significantly more hardware resources than Splunk Universal Forwarders, see Forwarder comparison in the Forwarder manual for more information.

- Syslog collection is dependent on the availability of the load balancer.

- The load balancer might cause unbalanced data distribution, due to the inability to consider the volume weight of a connection and split events if there is an incorrectly configured session management.

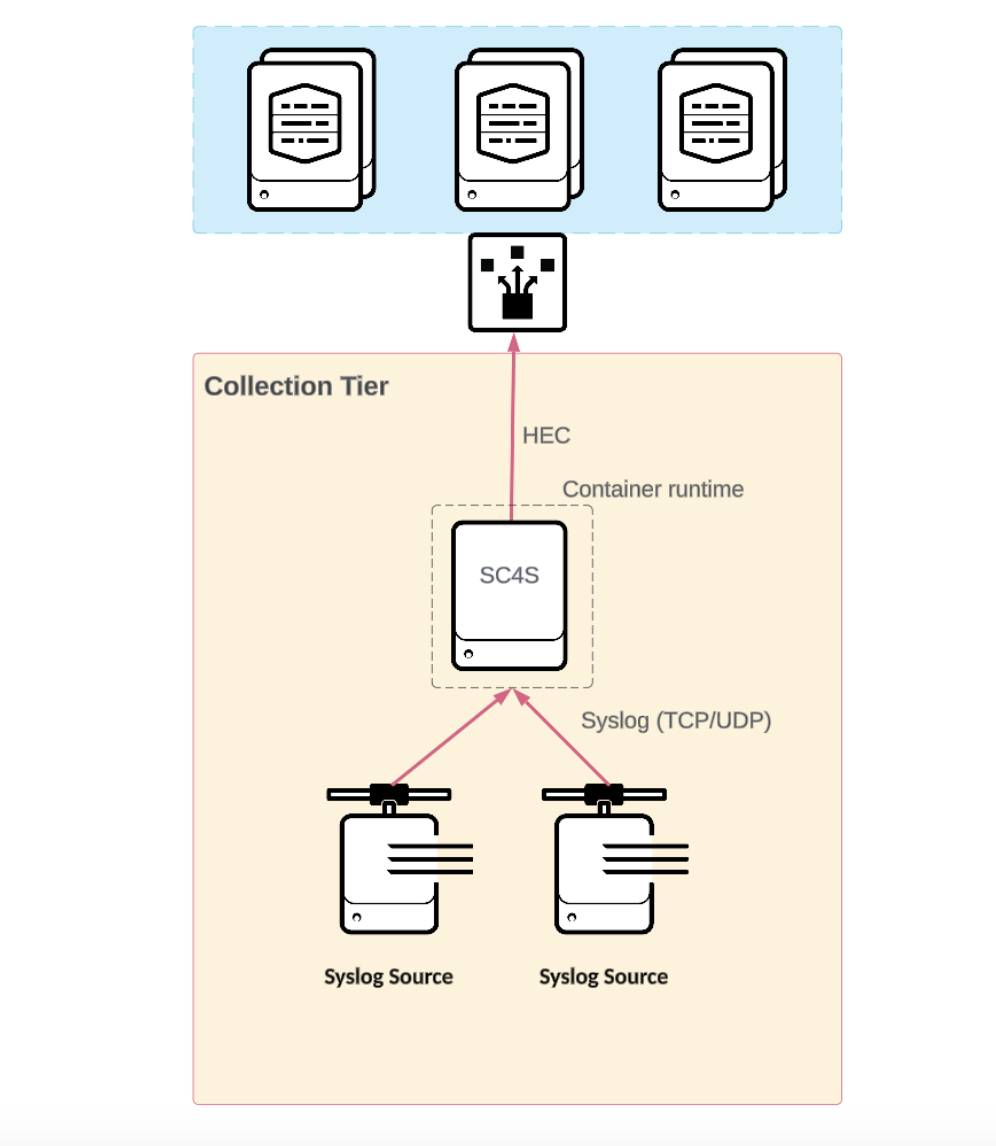

Splunk Connect for Syslog (SC4S)

Syslog data collection is complex at scale. To help customers address these issues, Splunk developed Splunk Connect for Syslog (SC4S).

Splunk Connect for Syslog is offered as an OCI-compliant container and a "Bring Your Own Environment" (BYOE) option if you prefer not to use containers. Both options are based on Syslog-ng server software and encapsulate the same set of configurations, while differing on how Syslog-ng itself is instantiated. Splunk recommends the SC4S containerized solution for all customers who are able to adopt it. Refer to the SC4S Getting Started guide for supportability considerations for non-containerized SC4S deployments.

Events from a source with a 'filter' log path configuration will be identified, parsed, and formatted into JSON before being streamed to Splunk via HTTP/HTTPS. A network load balancer between SC4S and the Indexers helps achieve the best data distribution possible. The events hitting the Indexer are already parsed, so an add-on on the indexer(s) is not needed in most cases.

There is also SC4S—SC4S Lite, which by default disables modules for improved performance and simplified diagnosability.

Benefits

- It is a prescriptive Splunk solution for syslog.

- It is officially supported by Splunk if you use a supported deployment topology.

- It reduce and removes the need for add-on installation and management on indexers.

- A turnkey deployment is available via the SC4S container architecture.

- It has a custom deployment option through a "Bring Your Own Environment" distribution.

- A community-maintained data source filter limits the implementation level of effort.

- It enhances data enrichment beyond the standard Splunk metadata of timestamp, host, source, and sourcetype.

- It supports custom filters for additional sourcetypes beyond those supported out of the box.

- Events are persisted to disk in the event of a target failure. It is subject to infrastructure limitations.

- It has the aility to send to multiple destinations. See destinations in the Splunk Connect for Syslog manual for more information.

- It has the ability to send through an HTTP proxy.

- It has the ability to archive in a JSON format.

- Performance tests and sizing are published in the full edition documentation for SC4S.

Limitations

- Indexer acknowledgement support for HEC is only offered in Splunk Enterprise.

- Updates requiring a reload or restart might result in data loss in the time taken to restart the service.

- Not all container topologies are currently documented, which can impact deployment and supportability,

- In a container mode deployment, without any external LB, SC4S availability is de facto limited by the availability of the underlying infrastructure.

- Heavily customized syslog environments with a different logic than Splunk Connect for Syslog may not be suitable for conversion to SC4S without reengineering.

- Unexpected log format changes may impact the ability to parse logs.

- It is not currently possible to do a port by port sourcetype logic with a default sourcetype catch all. If the log is not correctly identified, it will not be sent.

- App parsers have increased support for technologies over time, resulting in improved default detections. However, parsers can impact performance and increase complexity for identifying issues

- Advanced filtering with SC4S requires advanced syslog-ng syntax knowledge.

- SC4S lite is currently in experimental status, with no specific performance tests available. However, it is designed to be better than the published SC4S full edition.

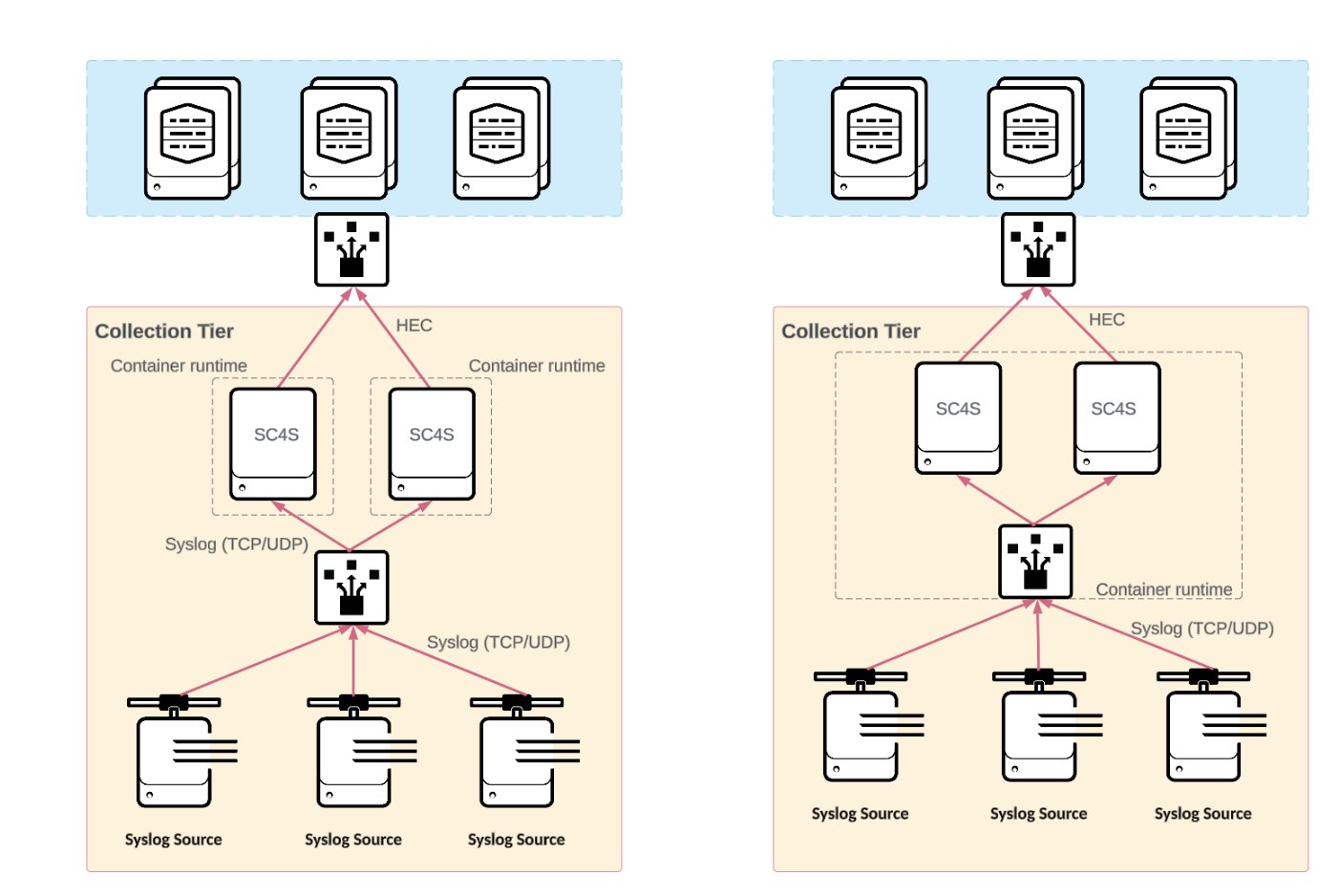

SC4S high availability deployments

Container runtimes and orchestration tools offer varying capabilities to support HA deployments of SC4S such as ensuring workload availability, scaling, and load balancing. Runtime specific guidance is available in the SC4S documentation. Although Splunk maintains container images, it doesn't directly support or otherwise provide resolutions for issues within the runtime environment.

Benefits

- Multiple servers increase the availability for the collection tier when it is implemented behind a load balancer.

- It offers additional capacity through horizontal scaling.

- It offers protection against data loss during maintenance operations.

- The single IP simplifies client configuration.

- It has deployment through multiple, independent SC4S containers, which are behind an external load balancer, or through container runtimes supporting built in load balancing.

Limitations

- The load balancer might result in an unbalanced data distribution due to the inability to consider the volume weight of a connection.

- A load balancer is recommended in front of HEC for optimal distribution purposes, which might not be available in all environments.

- SC4S configurations might require synchronization through external configuration management tools.

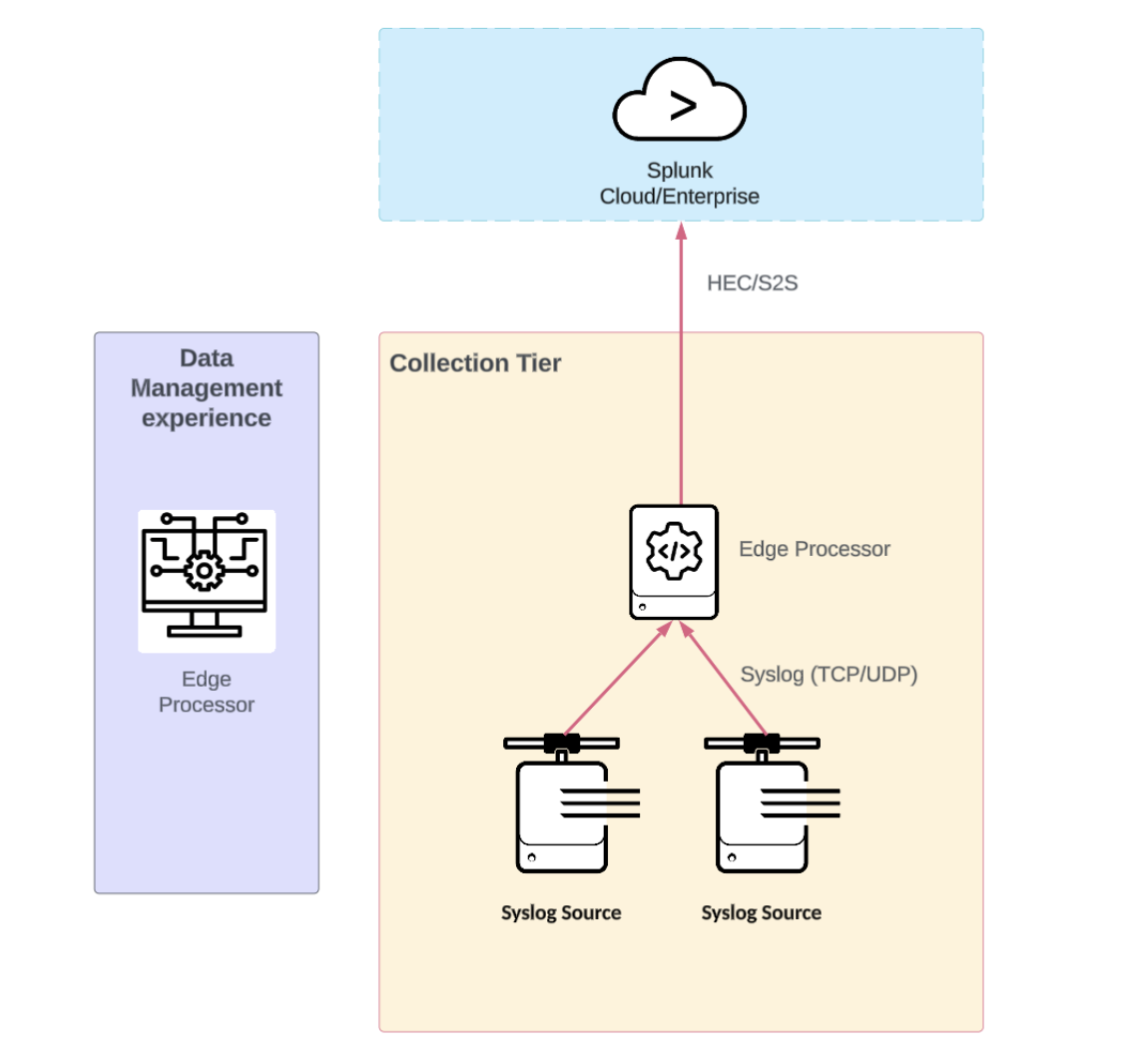

Splunk Edge Processor syslog receiver

Splunk Edge processor supports accepting syslog traffic directly on edge processor nodes. Edge Processor uses Splunk's SPL2 to filter, route, mask, and otherwise transform data close to the source, before routing it to supported destinations.

Review the Edge Processor SVA for additional details on architecting Edge Processor deployments that are outside the scope of this document.

Benefits

- It has a centralized node management through Splunk Cloud.

- It supports routing syslog data to Splunk Cloud, Splunk Enterprise, and Amazon S3.

- It increases capacity through horizontal and vertical scaling.

- Edge processor nodes can process multiple protocols and are not limited to the syslog protocol.

- It has encryption in transit via HEC or S2S protocols.

- Events are persisted to the disk in the event of a target failure, but that is subject to infrastructure limitations.

- It supports sourcetype assignment by the port.

Limitations

- It requires a Splunk Cloud Platform subscription.

- There are pitfalls in load balancing UDP and syslog over TCP/TLS, irrespective of the Edge Processor used.

- There is no automatic sourcetype recognition.

- When applying updates and modifications, in general, even though this is a very fast restart, there could be a very minimal loss of data in the time taken to restart the service.

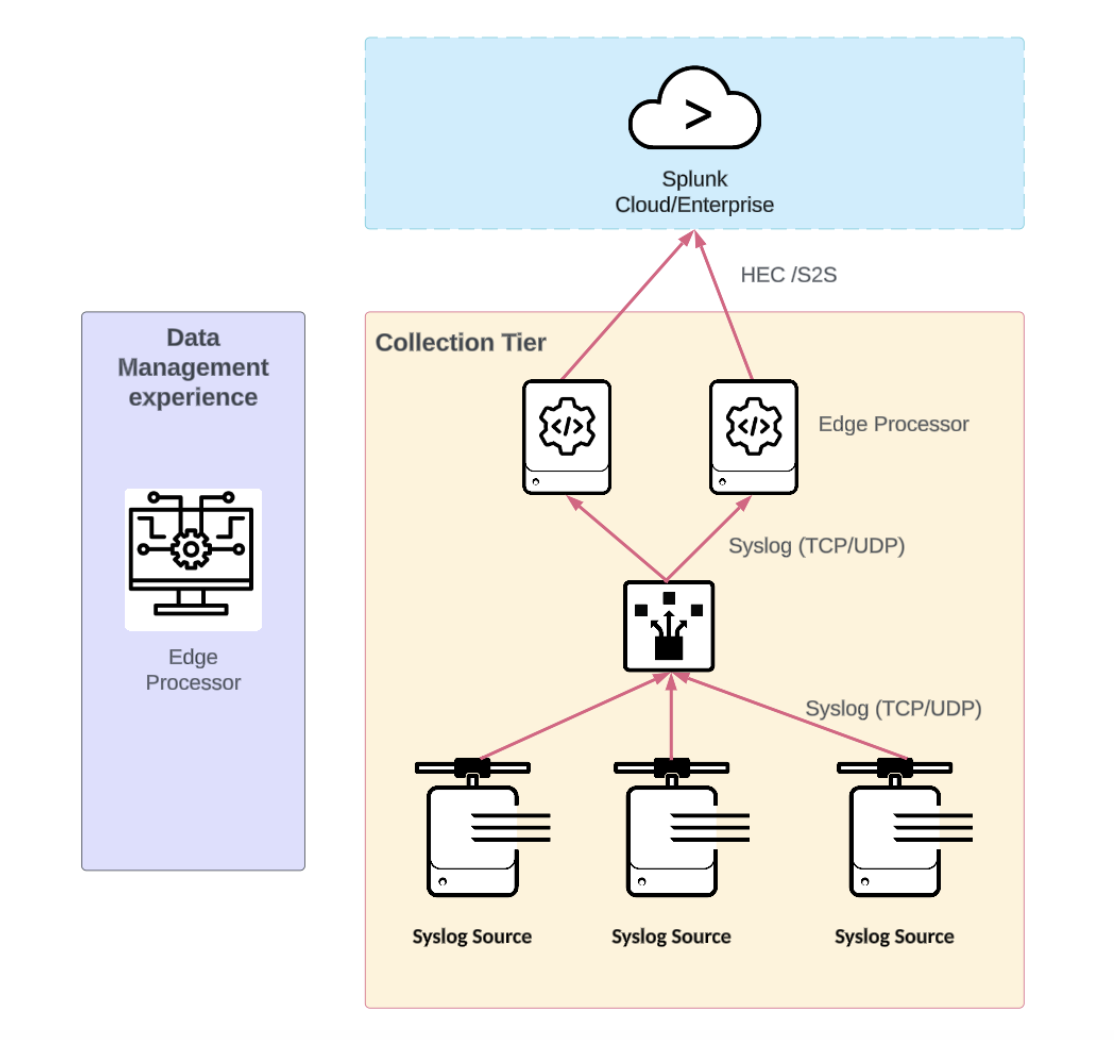

Splunk Edge Processor high availability syslog deployments

Benefits (in addition to non-HA)

- It has additional capacity through horizontal scaling.

- Multiple servers increase availability for the collection tier when implemented behind a load balancer.

- It offers protection against data loss during maintenance operations.

- The single IP simplifies client configuration

Limitations

- A load balancer is recommended in front of HEC in Splunk Enterprise deployments, which may not be available in all environments.

- Syslog collection is dependent on the availability of the Load balancer.

- The load balancer might result in unbalanced data distribution due to the inability to consider the volume weight of a connection.

General syslog architecture topology descriptions

The topology chosen plays an important role in balancing the trade-offs between scalability, reliability, and cost in the design of a syslog infrastructure. The topologies identified in this section describe the most common deployment topologies and the benefits and limitations of each topology. The combination of the specific implementation described in the previous section and the topology selection offers attributes suitable to meet common syslog architectures.

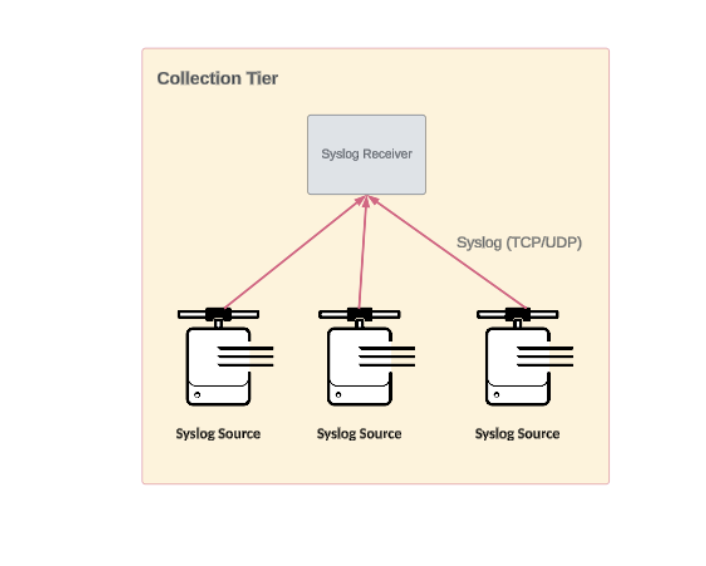

The diagrams and descriptions below include the term syslog receiver intended to genetically represent a specific implementation described in the Syslog data collection implementations section.

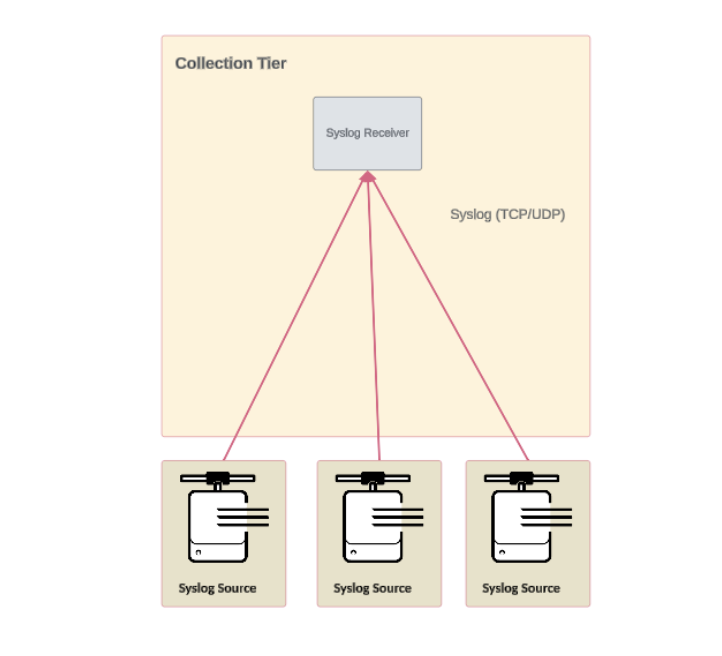

Basic deployment topology

The most basic syslog topology is a single site with a single syslog receiver. The syslog receiver accepts data from the syslog sources, might perform some data processing, and forwards the data for further processing and indexing.

Benefits

- It is cost-effective.

- It has ease of management.

- It is suitable for smaller networks.

Limitations

- Its capacity is limited by the processing single nodes and vertical scaling.

- It has a single point of failure.

- It is not highly available.

- There is a long recovery time in case of disaster.

Distributed syslog sources with centralized syslog deployment topology

This topology provides a centralized log collection tier for distributed syslog sources. This approach simplifies the operation of the syslog infrastructure at the expense of potential data loss.

Benefits

- It is cost-effective.

- It has an ease of infrastructure management.

- It is suitable for small to midsize organizations.

Limitations

- Its capacity is limited by the processing of single nodes and vertical scaling.

- The risk of data loss increases with longer network paths between log sources and the central receiver. UDP protocol transmission is particularly susceptible to data loss.

- It has a single point of failure.

- It is not highly available.

- There is a long recovery time in case of disaster.

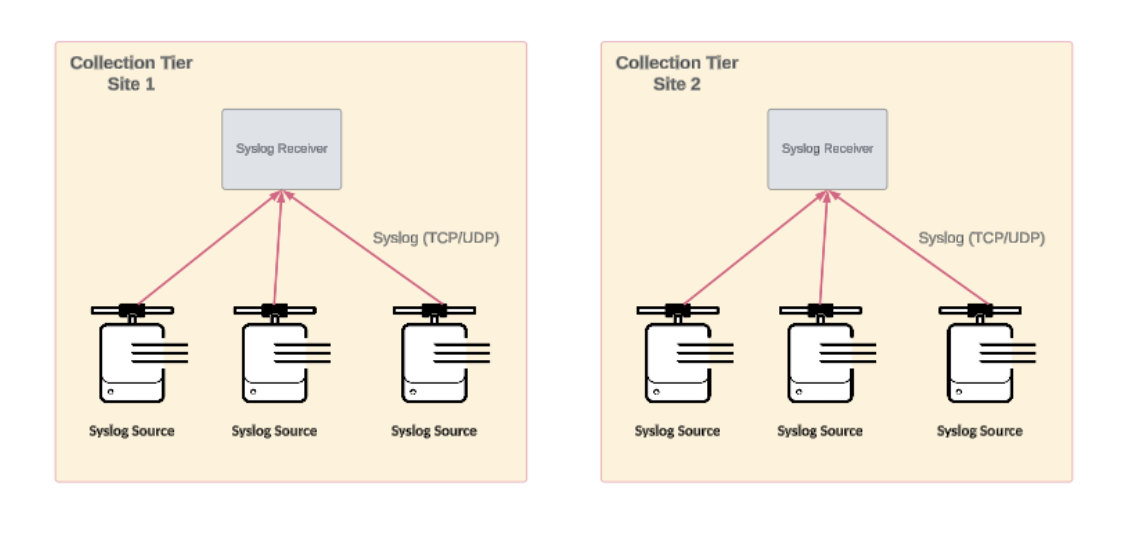

Distributed syslog sources and collection topology

A distributed syslog architecture scales horizontally and helps minimize potential bottlenecks. In such configurations, syslog receivers are strategically deployed across network segments or geographical locations closer to the log data sources. This proximity reduces the risk of data loss and latency, which is critical for environments generating high volumes of log data across dispersed locations. A distributed approach reduces the risk of data loss and improves log collection efficiency by employing a syslog collection tier in each logical or physical segment.

Benefits

- It is suitable for distributed or highly segmented environments.

- It reduces the risk of data loss by locating the collection tier near the data source.

Limitations

- The capacity is limited by the processing of single nodes and vertical scaling.

- The risk of data loss increases with longer network paths between log sources and the central receiver. UDP protocol transmission is particularly susceptible to data loss.

- There are higher initial setup costs.

- Support contract(s) might be necessary for third-party syslog servers.

- It has more complex management and maintenance requirements.

- It has a single point of failure.

- It is not highly available.

- There is a long recovery time in case of disaster.

| Edge Processor Validated Architecture | Splunk OpenTelemetry Collector for Kubernetes |

This documentation applies to the following versions of Splunk® Validated Architectures: current

Download manual

Download manual

Feedback submitted, thanks!