Splunk On-CallのAWS CloudWatchインテグレーション 🔗

Splunk On-Call (旧VictorOps)とAWS Cloudwatchのインテグレーションにより、AWS CloudwatchアラートをSplunk On-Callに転送し、適切なオンコールユーザーに通知できます。Splunk On-Callでオンコール・スケジュール、ローテーション、エスカレーションポリシーを作成し、それらのパラメータに基づいてAWSアラートをルーティングします。

イベントが事前に設定された監視基準を満たすと、AWSはアラート通知を送信します。その後、Splunk On-Callのタイムラインで、ユーザーは重要なアラートデータをルーティングし、適切な担当者にエスカレーションすることができます。Splunk On-CallとAWSのインテグレーションにより、オンコール対応者はシステムデータをもとにリアルタイムで連携し、MTTA/MTTRを削減し、インシデントを迅速に解決することができます。

要件 🔗

このインテグレーションは以下のバージョンのSplunk On-Callと互換性があります:

Starter

Growth

エンタープライズ

このガイドでは、SNSのキューにアラームを送信するようにCloudWatchを設定済みで、メールなど他の手段でアラームを受信していることを前提としています。新しいアラームを作成する方法の詳細が必要な場合は、Amazonの公式ドキュメントを参照してください。

注釈

このインテグレーションはAmazon CloudWatchでのみ動作します。他のAmazonサービスから送信されたSNSメッセージは、Splunk On-CallのCloudWatchエンドポイントに直接送信すると失敗します。

Splunk On-CallでAWS Cloudwatchをオンにする 🔗

Splunk On-Callで、Integrations、AWS CloudWatch の順に選択します。

インテグレーションがまだ有効化されていない場合は、Enable Integration を選択してエンドポイントURLを生成します。新しいエンドポイントの $routing_key セクションを、使用するルーティングキーに置き換えてください。エンドポイントURLの一部にルーティングキーが含まれていない場合、サブスクリプションは確認されません。詳細は Splunk On-Callでルーティングキーを作成する を参照してください。

Splunk On-Callでは、複数のSNSサブスクリプションを同じAWS CloudWatchエンドポイントに向けることができます。異なるエンドポイントにヒットした場合、サブスクリプションは確認されません。

注釈

設定後、AWSは数分後に自動的にサブスクリプションを確認します。

AWS Simple Notification Service (SNS)でのSplunk On-Callのリンク 🔗

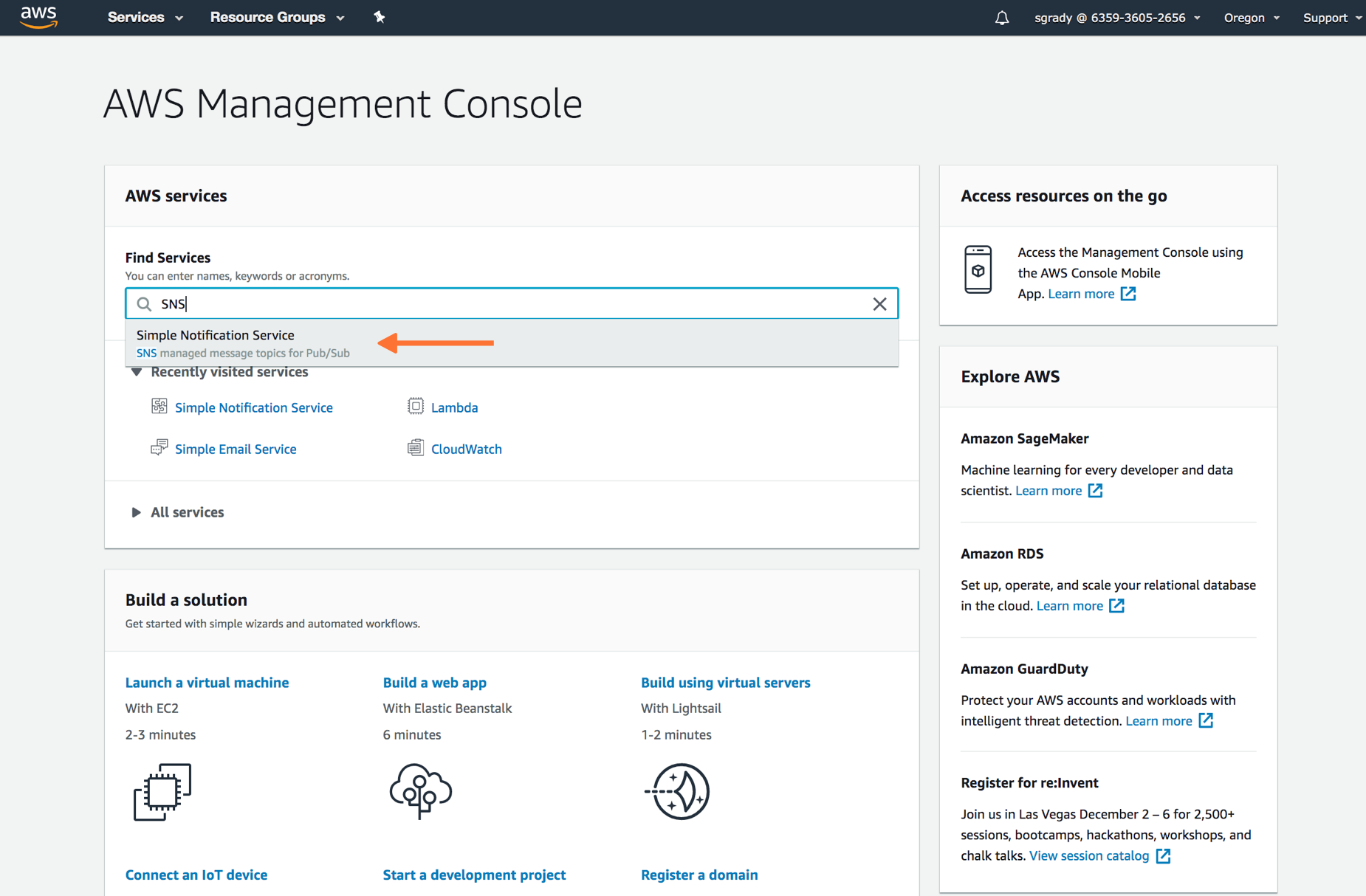

メインのAWSマネジメントコンソールから、SNSコントロールパネルに移動します。

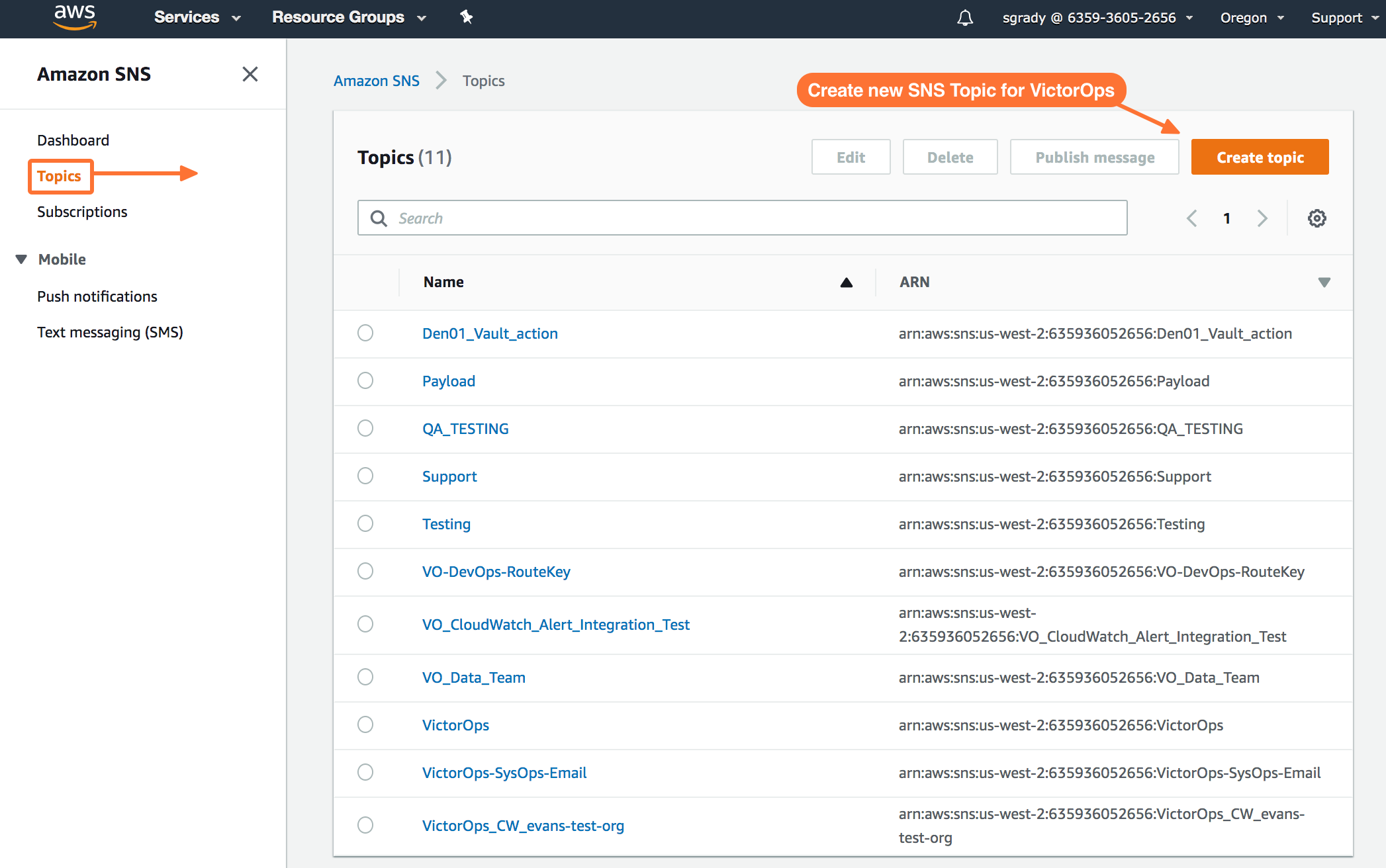

SNSダッシュボードから、Topics を選択し、Create topic を選択します。

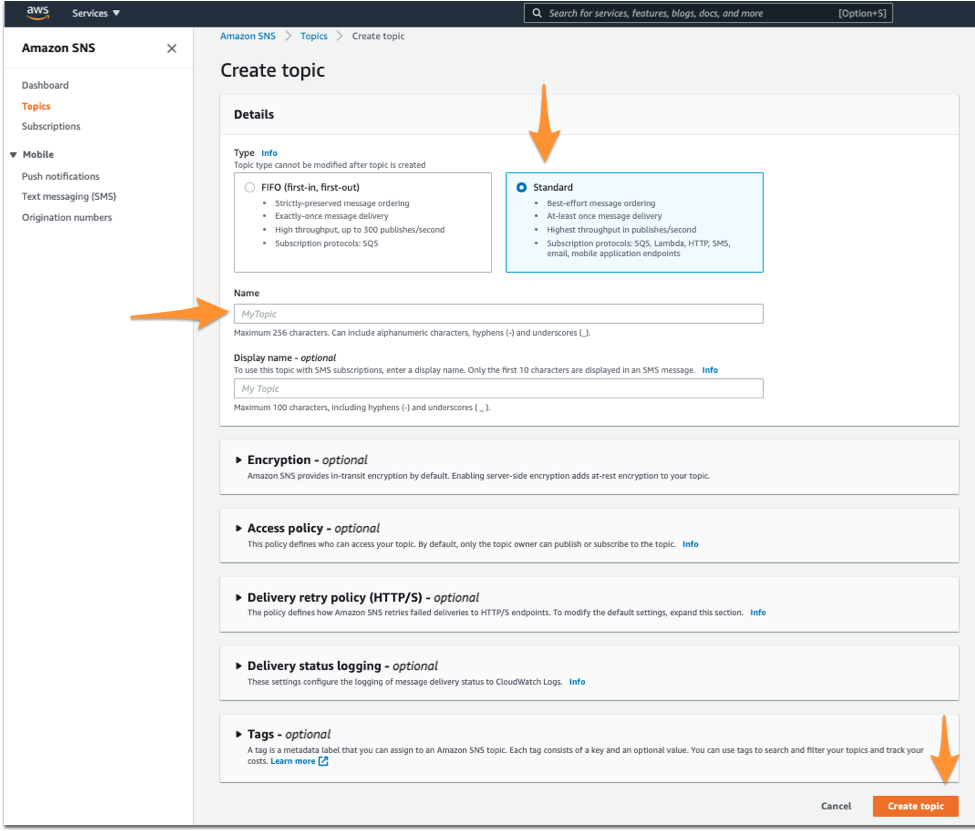

タイプに Standard を選択します。トピックを Name します。次に、Create Topic を選択します。

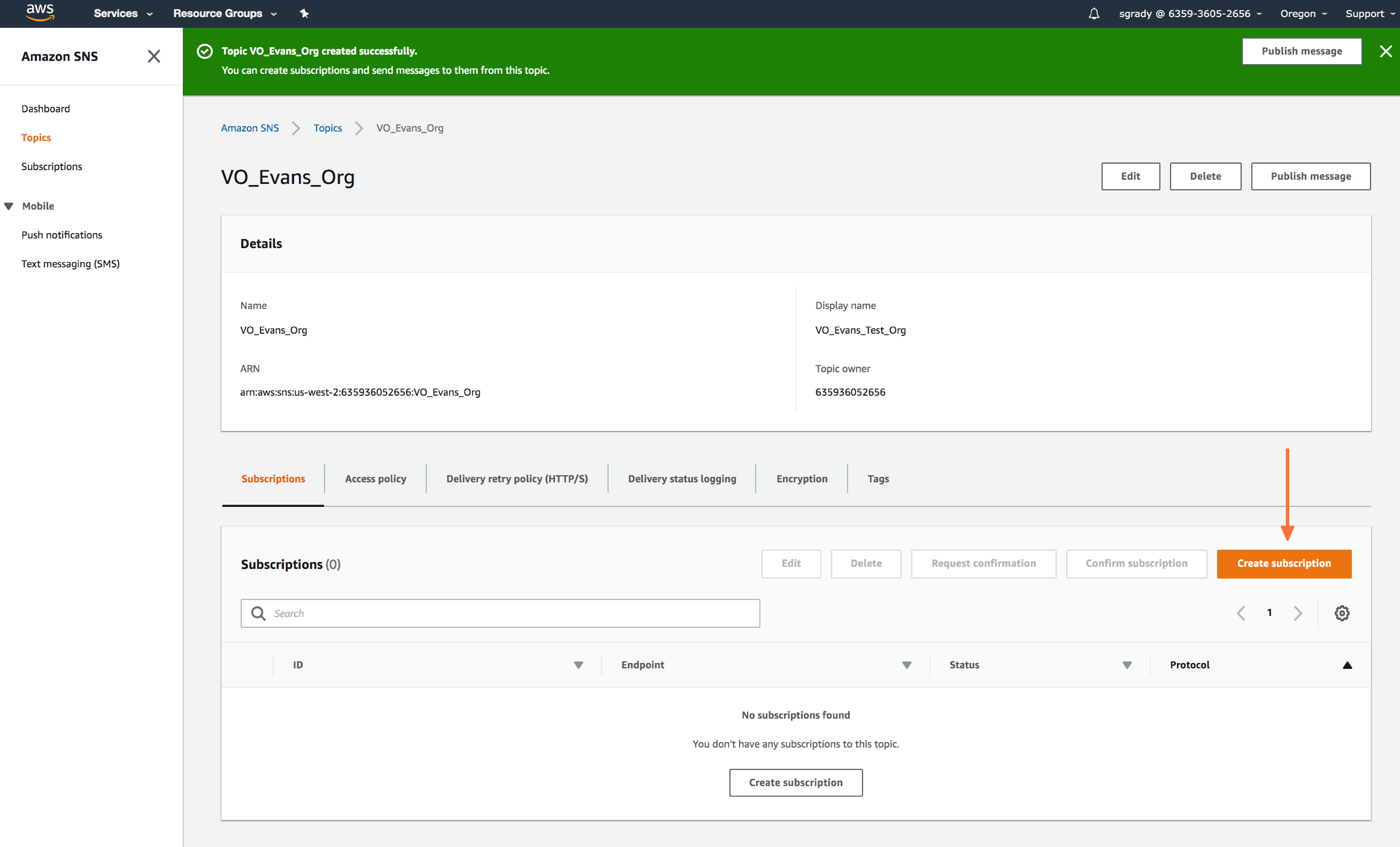

Splunk On-Call関連のトピックを作成したら、作成した新しいトピックへの Subscription を作成します。

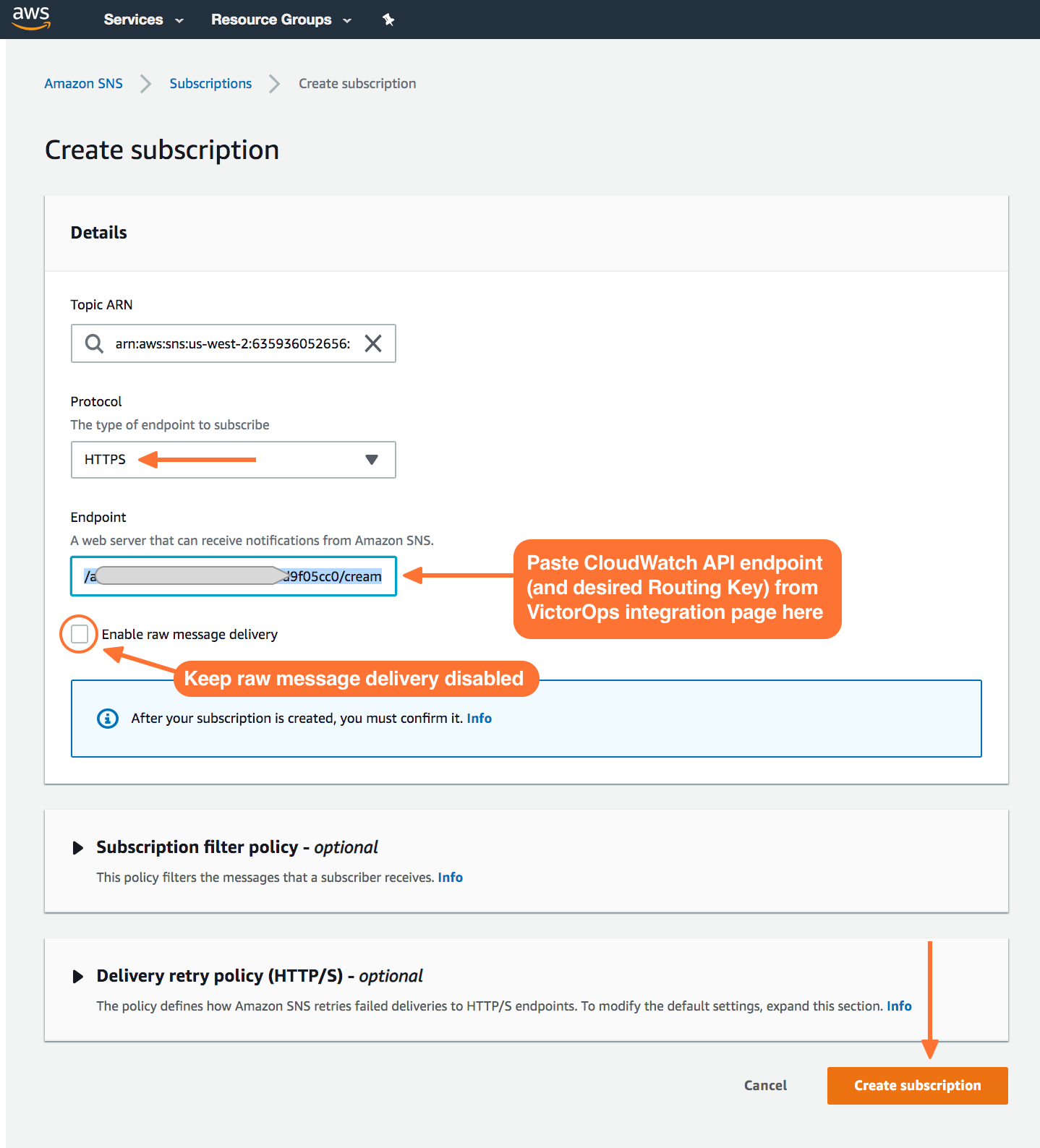

プロトコルの種類を HTTPS と定義し、カスタムエンドポイントに希望のルーティングキーを貼り付けます。

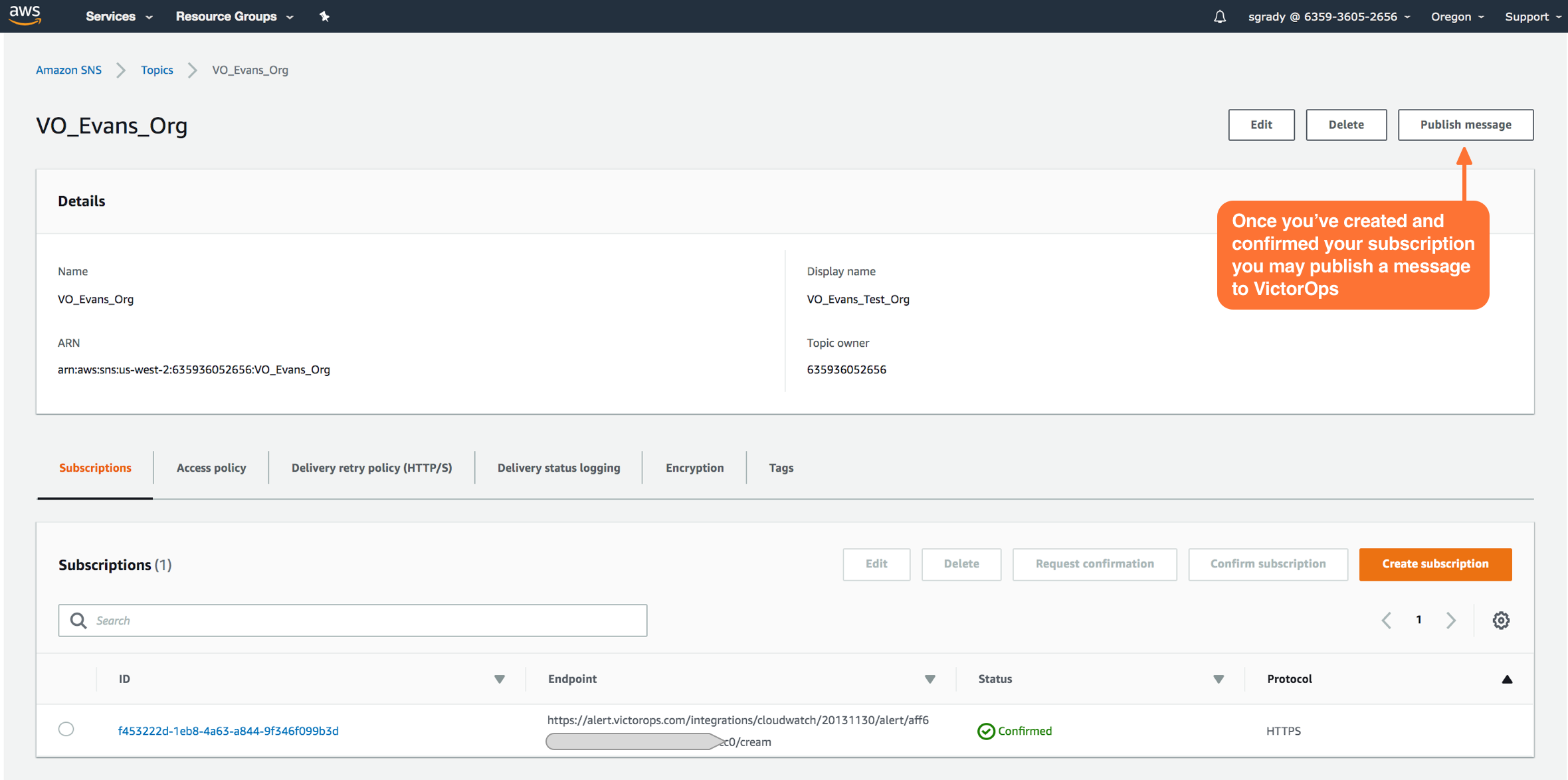

サブスクリプションが確定したら、Publish Message を選択します。

インテグレーションのテスト 🔗

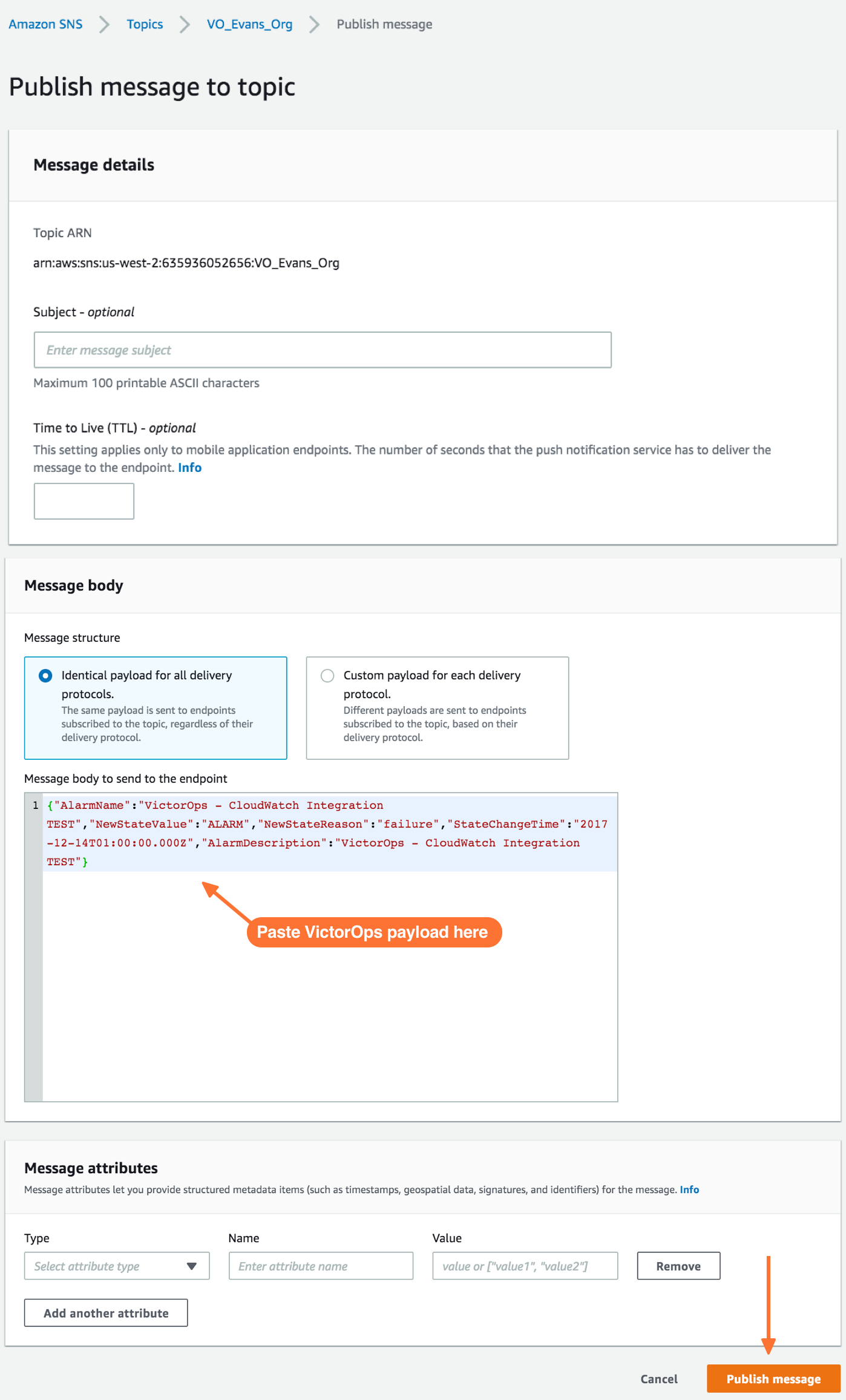

Publish message ページで、Message ボックスに以下のペイロードを追加します。フォーマットは変更しないでください。StateChangeTime の粒度をマイクロ秒やナノ秒に変更するなど、ペイロードを変更すると、CloudwatchのインシデントをSplunk On-Callに配信できなくなります。

{“AlarmName”:“VictorOps - CloudWatch Integration TEST”,“NewStateValue”:“ALARM”,“NewStateReason”:“failure”,“StateChangeTime”:“2017-12-14T01:00:00.000Z”,“AlarmDescription”:“VictorOps

- CloudWatch Integration TEST”}

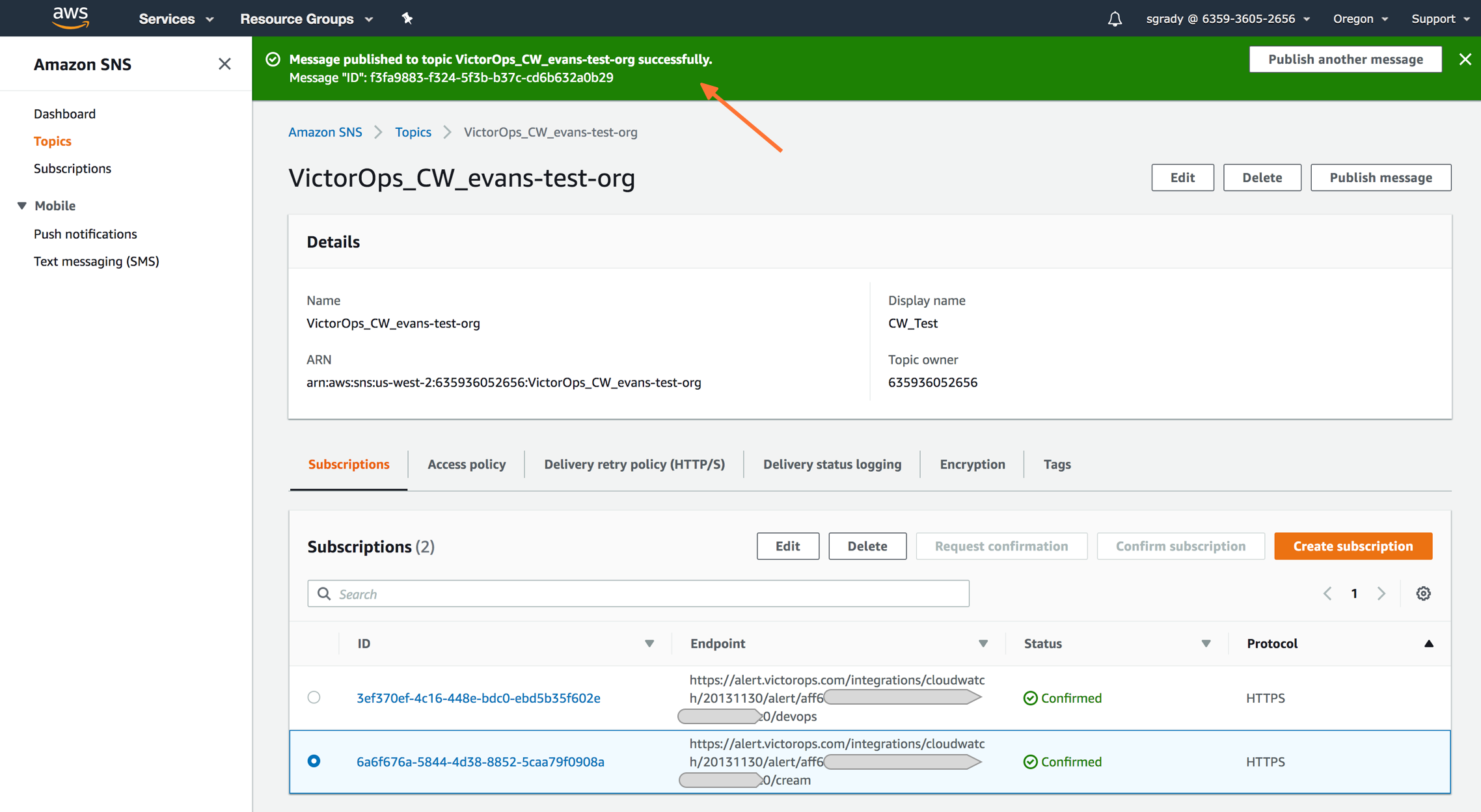

必要なSplunk On-Callペイロードを持つトピックにメッセージを公開すると、CloudWatchで緑色のバーに成功メッセージが表示されます。

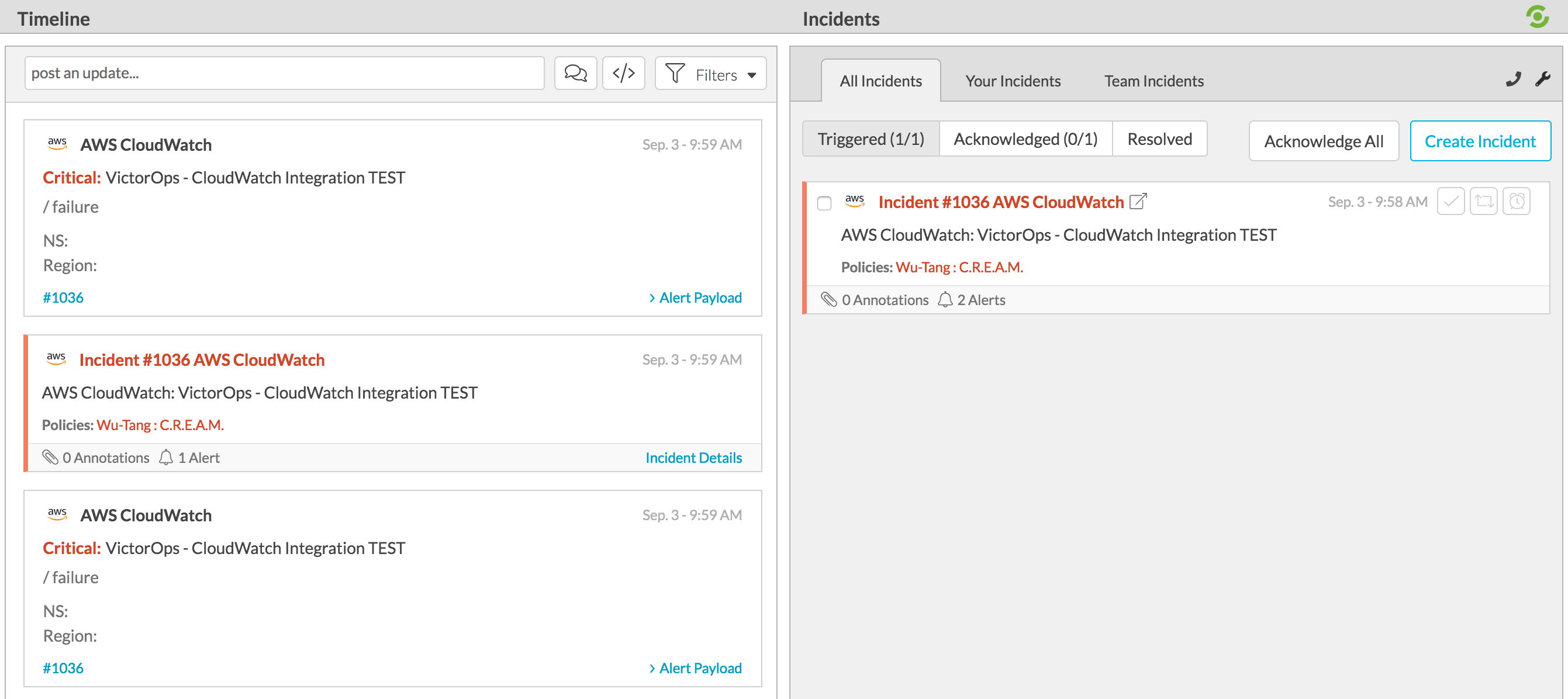

Splunk On-Callに戻り、作成された新しいインシデントを確認します。

Splunk On-Callに RECOVERY を送信するには、提供されたペイロードのフィールド NewStateValue の変数 Alarm を OK に置き換えて、再度メッセージを発行します:

“NewStateValue”:“OK”

CloudWatchからの自動回復アラーム 🔗

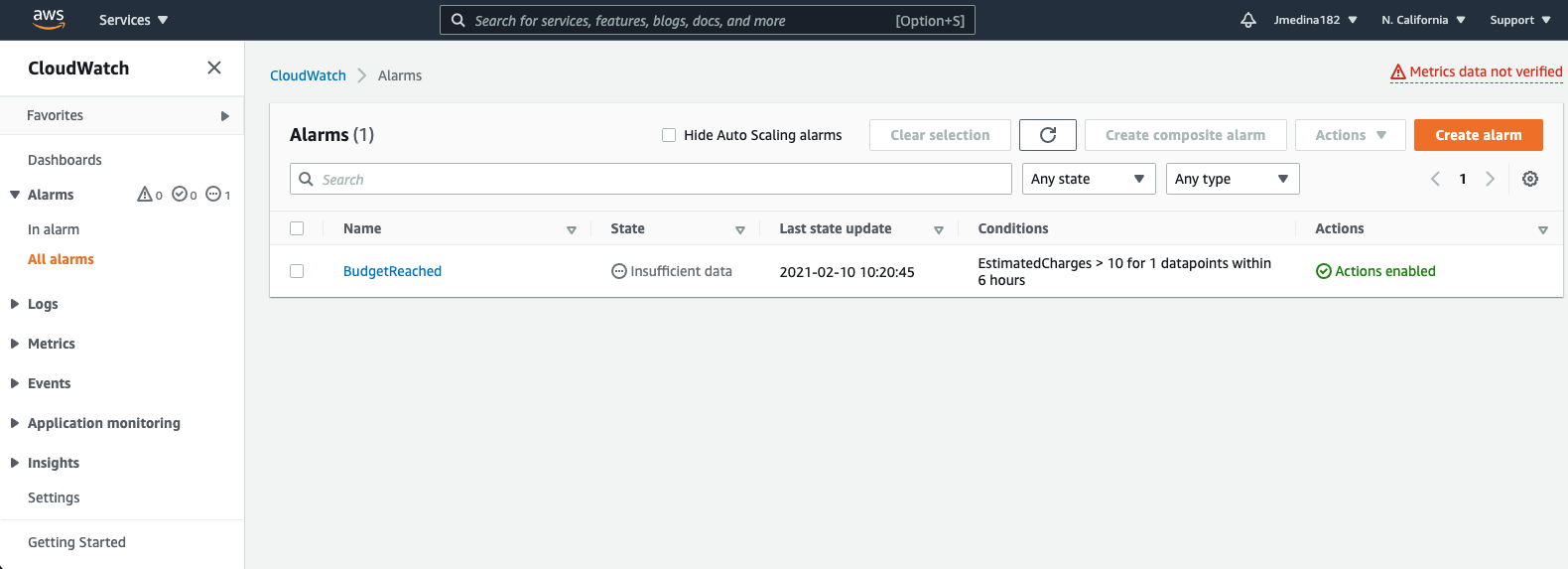

Cloudwatchは、Splunk On-Callにインシデントを送信するイベントをトリガーするアラートを設定する場所です。

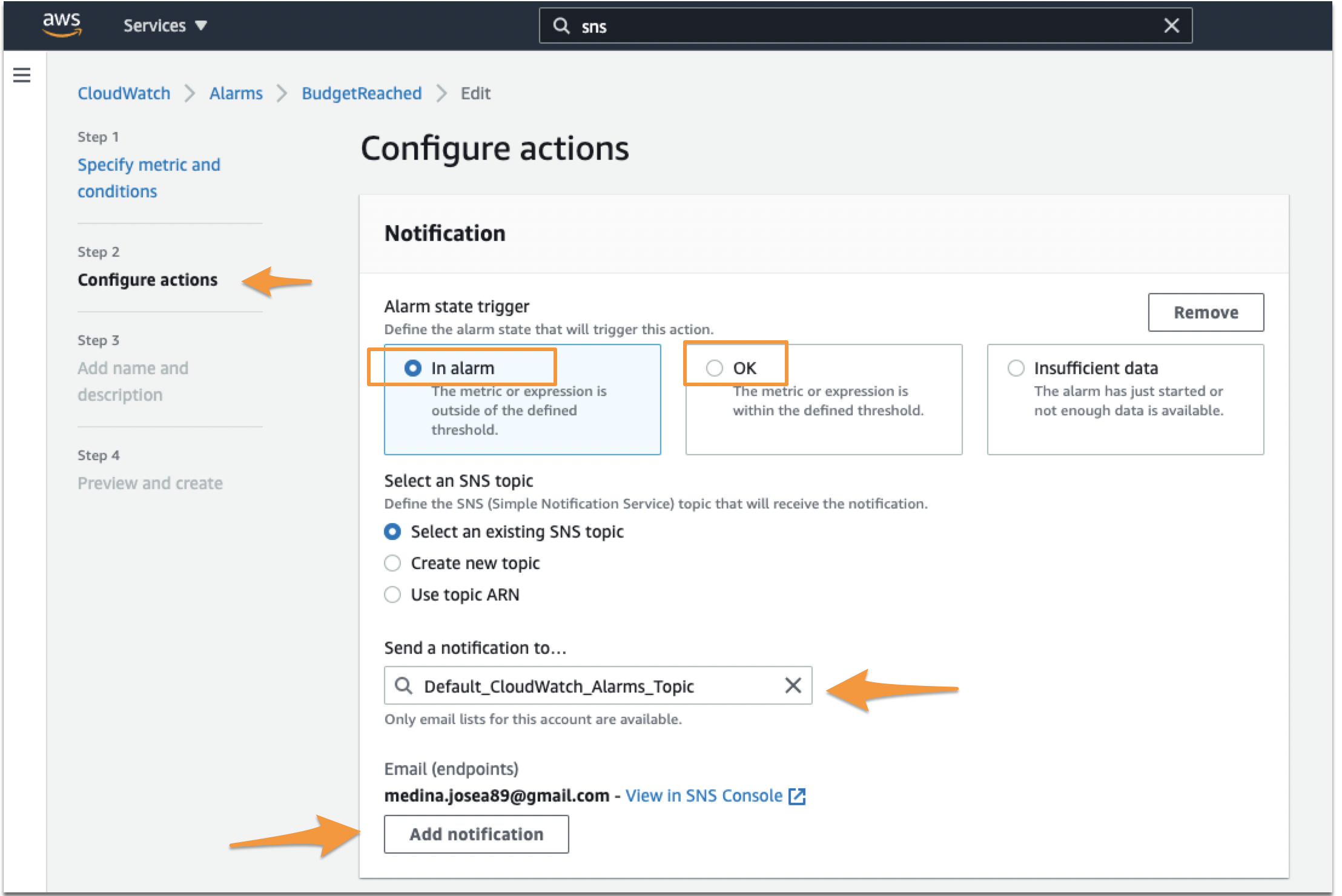

アラームをセットアップする場合、または既にセットアップしているアラームを編集する場合、2つ目のステップは通知のアクションを設定することです。In Alarm と OK の2つの異なるトリガー通知を設定してください。

最初の通知を In Alarm と設定します。次にこの通知をSNSで作成したトピックに関連付けます。その後、Add Notification を選択します。

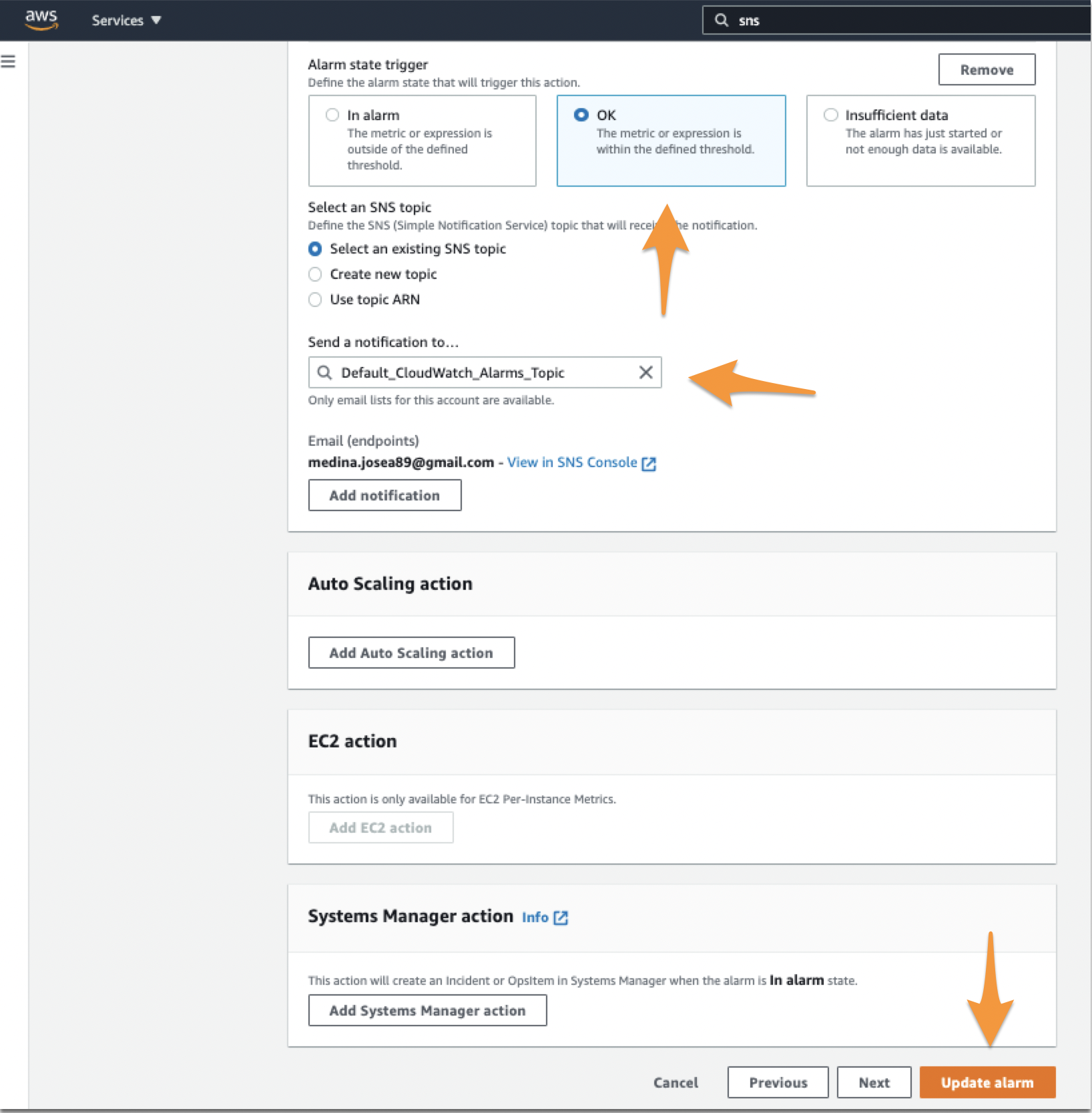

2つ目の通知を OK に設定します。正しいトピックを設定したことを再度確認してください。ページの最後にある Next または Update Alarm を選択してください。

AWSでトリガーされた In-Alert イベントが自己解決した場合、OK または復旧アラートがSplunk On-Callに送信され、Splunk On-Callでインシデントが解決されます。

必須フィールドとカスタムフィールド 🔗

Cloudwatchとのインテグレーションを調整しようとしている上級ユーザーには、考慮しなければならないいくつかの義務があります。Cloudwatchアラートエンドポイントに到達するアラートは基本的なフォームを持つ必要があります。CloudWatchから送信されるメッセージには次の3つのフィールドが必要です:

AlarmName: このフィールドには任意の文字列を指定でき、

entity_idにマッピングされます。entity_idは、異なるアラートをリンクするために使用されるフィールドであるため、各インシデントに対して一貫した命名規則を維持します。NewStateValue: このフィールドはCloudwatchによって入力され、

ALARM、クリティカルインシデントのトリガー、OK、インシデントの解決のいずれかになります。StateChangeTime: このフィールドもCloudwatchによって入力され、Splunk On-Callで使用されるタイムスタンプにマップされます。

さらに、必要な3つのフィールドが存在し有効である限り、どのメッセージペイロードにもカスタムフィールドを追加することができます。